A video segmentation method and system for translation

A video segmentation and video technology, applied in the field of video segmentation of video translation, can solve problems such as wasting translation time and failing to meet translation needs

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] To segment the content of the video text, there are various segmentation algorithms in the prior art. However, most of these segmentation methods are based on the attributes of the video itself, such as picture recognition, scene recognition, person recognition, etc., and the segmentation results mostly segment the continuous pictures of a certain scene, regardless of whether there is a sound flow in the scene composed of these continuous pictures. . This method of segmentation is not suitable for translation. Because, in a scene composed of a certain continuous field of pictures, there may be some dialogues and some no dialogues; for the pictures without dialogues, the translators can only wait.

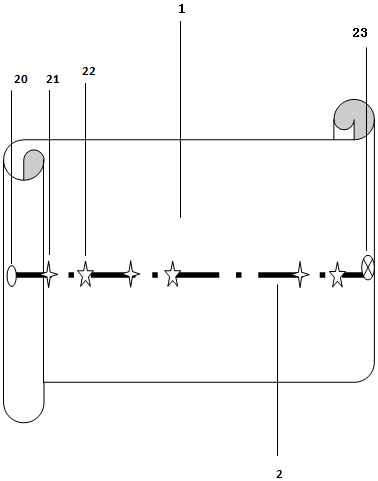

[0033] while using figure 1 The method shown above can avoid the above phenomenon.

[0034] exist figure 1 , for the part of the text video (1), identify the sound stream (2) therein, and start to detect the initial start point (20), middle pause point (21), middle start ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com