Video call special effect control method, terminal and computer readable storage medium

A technology of video calls and control methods, applied in the field of communication, can solve problems such as low user experience satisfaction and cumbersome operation of smart devices

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

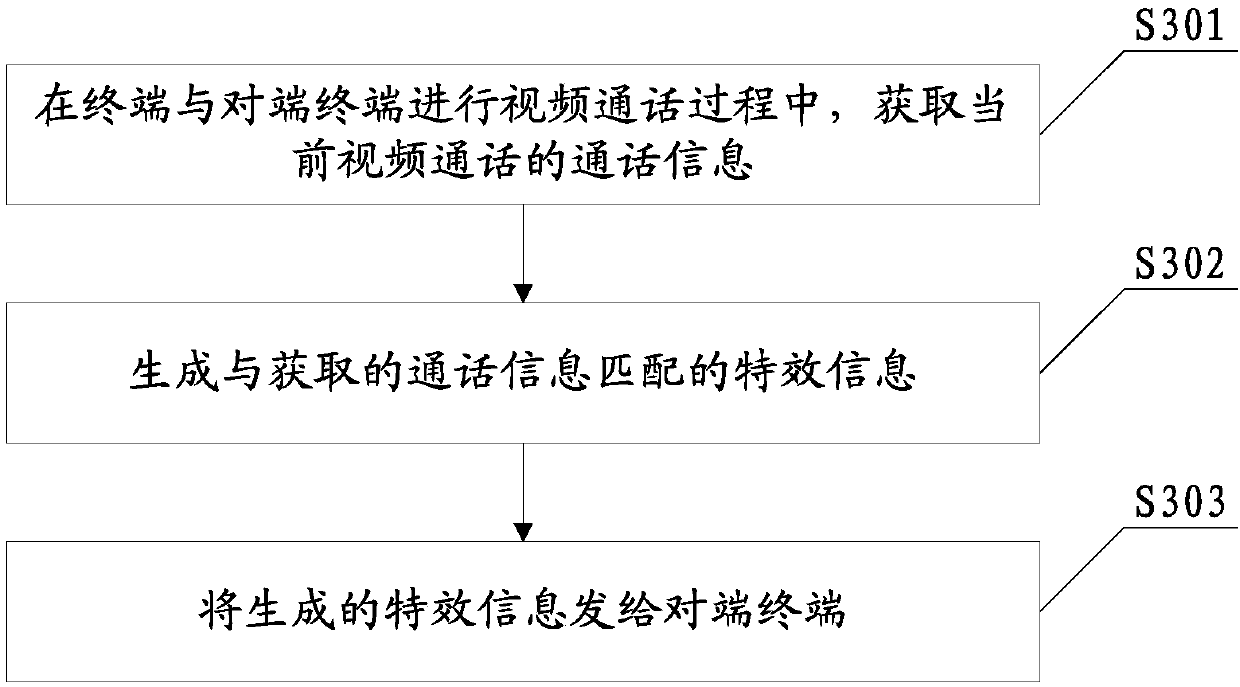

[0077] The video call special effect control method provided in this embodiment is applicable to various electronic devices capable of video calls, including but not limited to various smart phones, computers, tablets, and readers. For the video call special effect control method in this embodiment, see image 3 shown, including:

[0078] S301: Acquiring call information of the current video call during the video call between the terminal and the peer terminal.

[0079] In this embodiment, after the video call between the terminal and the peer terminal is detected, the call information may be collected immediately, or the call information may not be collected until the relevant special effect activation condition is triggered. For example, in this embodiment, at least one of the following conditions may be used as the special effect enabling condition:

[0080] Condition 1: A special effect activation command is received, and the special effect activation command can be issu...

no. 2 example

[0098] In order to facilitate the understanding of the present invention, this embodiment further illustrates the present invention by taking several specific call information as examples.

[0099] In an example, the call information obtained from the current video call includes time information of the current video call. At this point, generating special effect information that matches the communication information includes:

[0100] According to the acquired time information, the time characteristic special effect is matched from the preset correspondence table between time information and time characteristic special effect. In this embodiment, the time information and the time characteristic special effect correspondence table can be set in advance, for example, an example correspondence table is shown in the following table 1:

[0101] Table 1

[0102] period

time characteristic effects

6:00 to 8:00

good morning special effects / good morning spec...

no. 3 example

[0124] This embodiment provides a terminal, and the terminal may be various electronic terminals capable of controlling an intelligent terminal. see Figure 7 As shown, the terminal in this embodiment may further include a processor 701, a memory 702, and a communication bus 703;

[0125] The communication bus 703 is used to realize the communication connection between the processor 701 and the memory 702;

[0126] The processor 701 is configured to execute one or more programs stored in the memory 702, so as to implement the steps of the method for controlling special effects of a video call as exemplified in the above embodiments.

[0127] This embodiment also provides a computer-readable storage medium, which can be used in various electronic terminals, and stores one or more programs, and the one or more programs can be used by one or more The processor executes to realize the steps of the method for controlling special effects of a video call as exemplified in the above...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com