Boundary extraction method of missing area based on airborne lidar point cloud data

A technology for point cloud data and boundary extraction, which is applied in image data processing, image analysis, image enhancement, etc., and can solve problems such as data missing areas

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

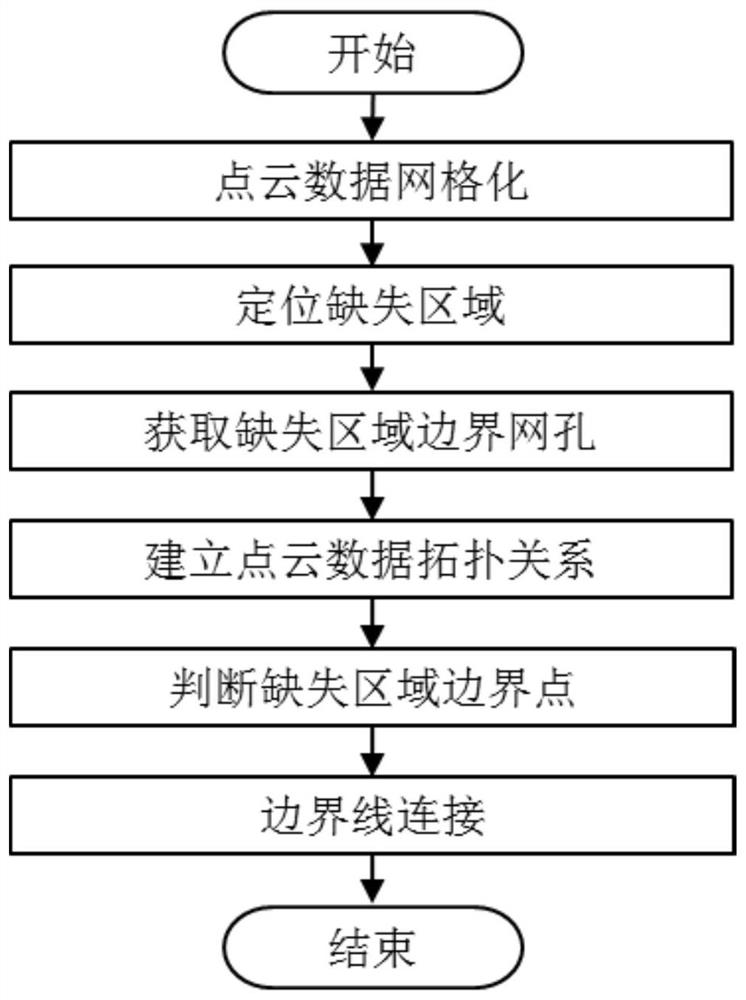

[0031] A method for boundary extraction of missing area based on airborne LiDAR point cloud data, see figure 1 , the method includes the following steps:

[0032] 101: Perform gridding processing on the original airborne LiDAR point cloud data, obtain the gridded matrix with missing areas, and define the matrix as a grid; use the seed method to traverse the grid to obtain distinguishable missing region;

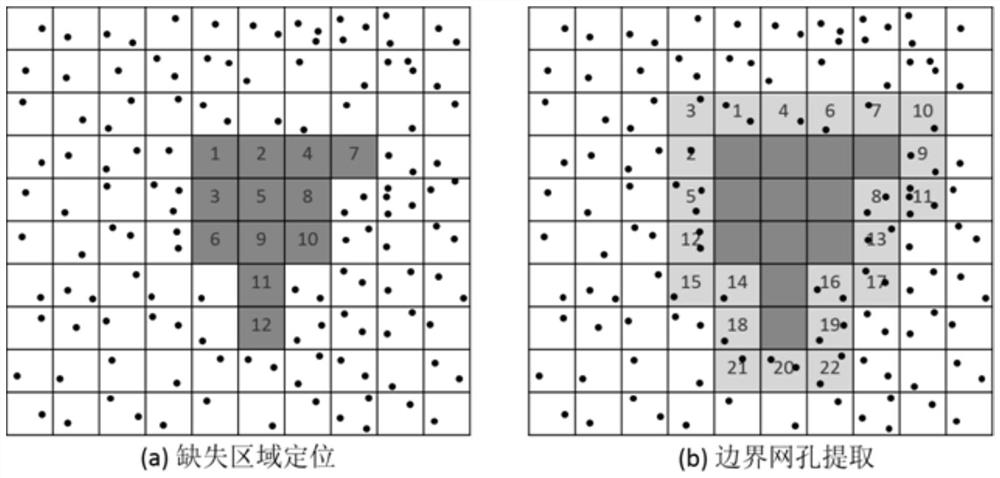

[0033] 102: Expand each missing area, obtain a mesh set surrounding the missing area, count the point cloud data in the mesh, and use it as the initial boundary feature point set of the missing area;

[0034] 103: Use the Kd-Tree method to establish the topological relationship between the airborne LiDAR point cloud data, and obtain the k-neighborhood points to be judged;

[0035] 104: Determine whether the point is a boundary feature point by the distribution uniformity of each point in the initial boundary feature point set and its k neighbor points; use the nearest point...

Embodiment 2

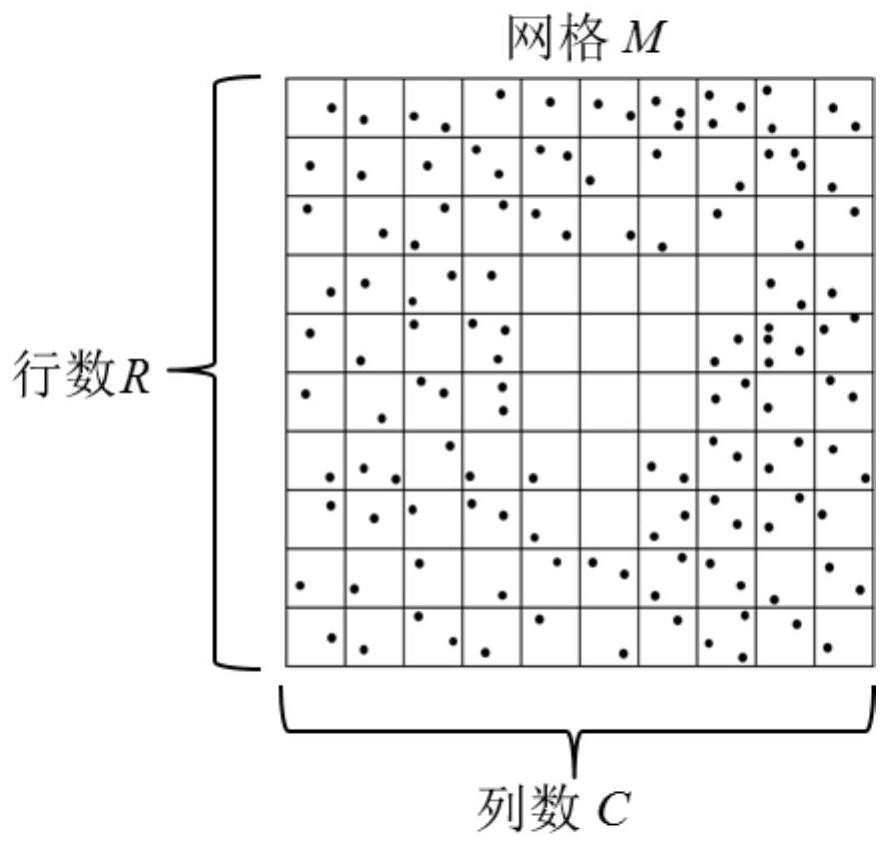

[0046] The specific calculation formula is as follows, figure 2 , image 3 The scheme in embodiment 1 is further introduced, see the following description for details:

[0047] 201: Airborne LiDAR point cloud data gridding;

[0048] The main purpose of meshing point cloud data is to quickly locate the missing area and obtain the point cloud data surrounding the missing area. The meshing process can be divided into three steps:

[0049] 1) First, project the point cloud data to the plane;

[0050] 2) Determine the grid division scale (the number of rows R and the number of columns C) according to certain conditions;

[0051] 3) For each point cloud data, according to its position in the plane projection, assign it to each mesh hole in the grid M. Schematic diagram of point cloud data gridding is attached figure 2 shown.

[0052]The most important step in the meshing process is to determine the division scale of the mesh. When determining the mesh division scale, the si...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com