Multiscale weighted matching and sensor fusion for dynamic vision sensor tracking

A visual sensor and dynamic technology, applied in the direction of instruments, character and pattern recognition, image data processing, etc., can solve problems such as difficult to cross-check camera motion or posture, difficult image matching, lack of key features, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

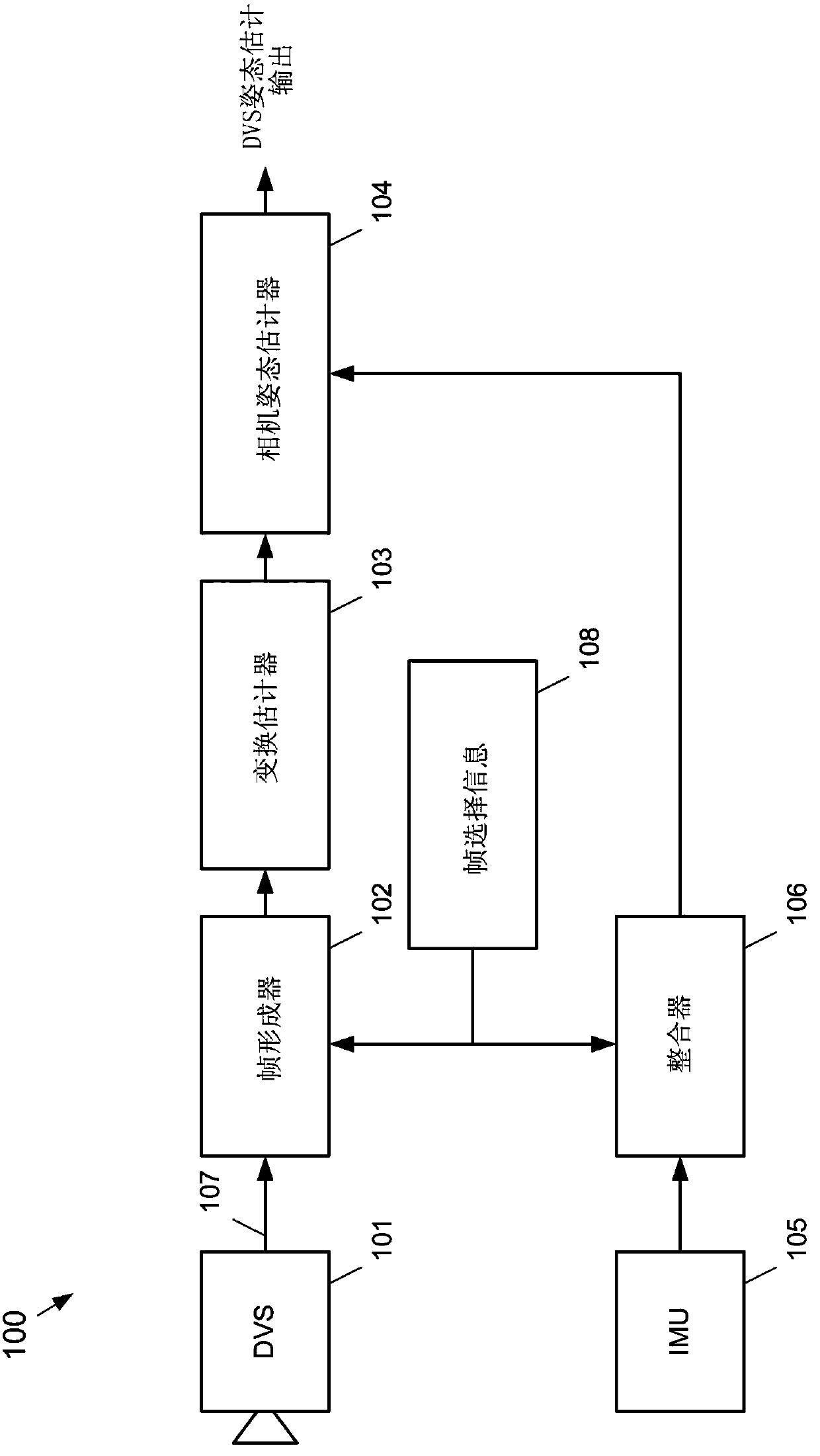

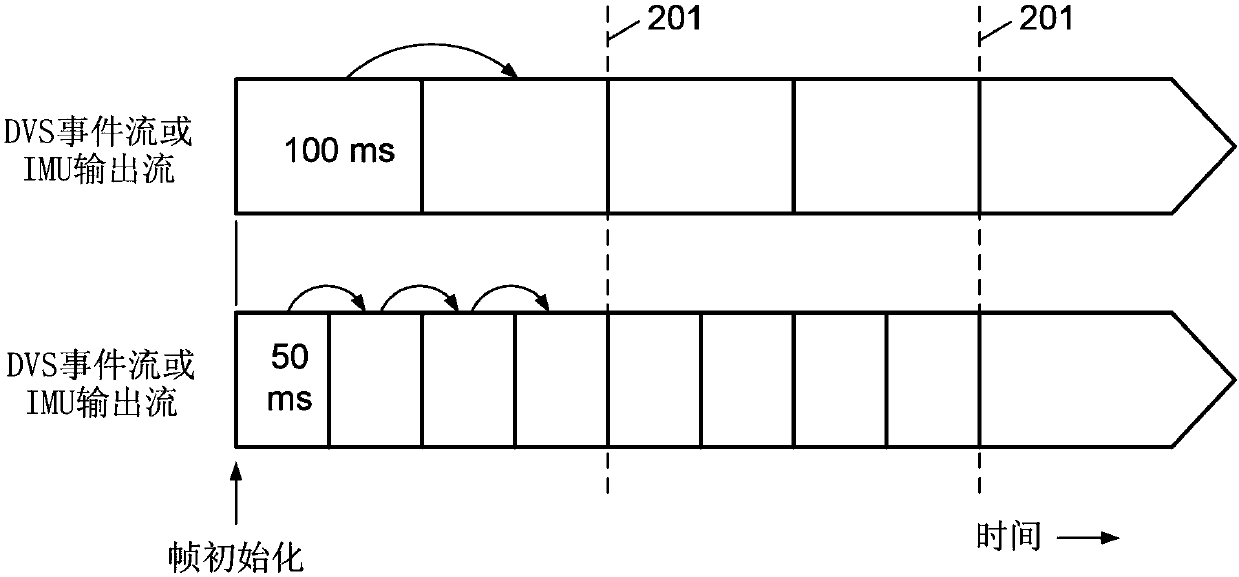

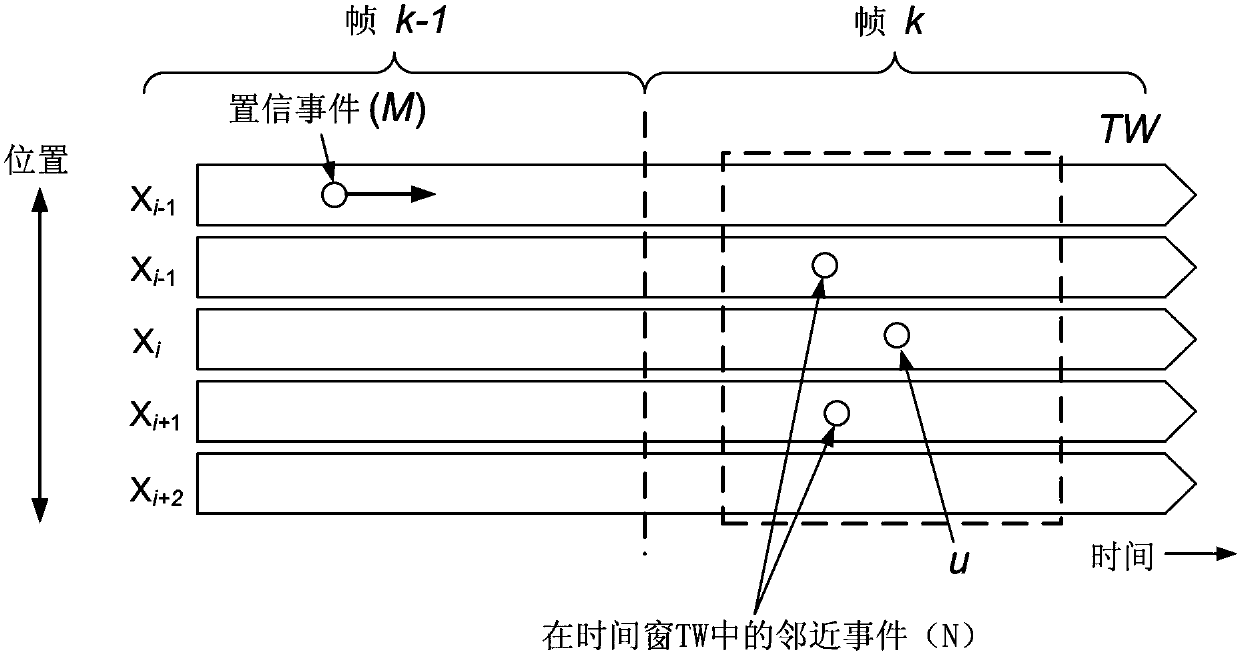

Method used

Image

Examples

Embodiment Construction

[0018] In the following detailed description, numerous specific details are set forth in order to provide a thorough understanding of the present disclosure. However, it will be understood by those skilled in the art that the disclosed aspects may be practiced without these specific details. In other instances, well-known methods, procedures, components, and circuits have not been described in detail so as not to obscure the subject matter disclosed herein.

[0019] Throughout this specification, reference to "one embodiment" or "an embodiment" means that a particular feature, structure, or characteristic described in connection with the embodiment can be included in at least one embodiment disclosed herein. Thus, appearances of the phrases "in one embodiment" or "in an embodiment" or "according to an embodiment" (or other phrases of similar meaning) in various places throughout this specification are not necessarily all referring to the same implementation. example. Further...

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap