Tracking and positioning method and device for three-dimensional display

A tracking positioning and stereoscopic display technology, which is applied in stereoscopic systems, image communications, electrical components, etc., can solve problems such as inaccurate tracking and positioning, crosstalk, etc., and achieve the effect of improving crosstalk and optimizing stereoscopic display effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach 1

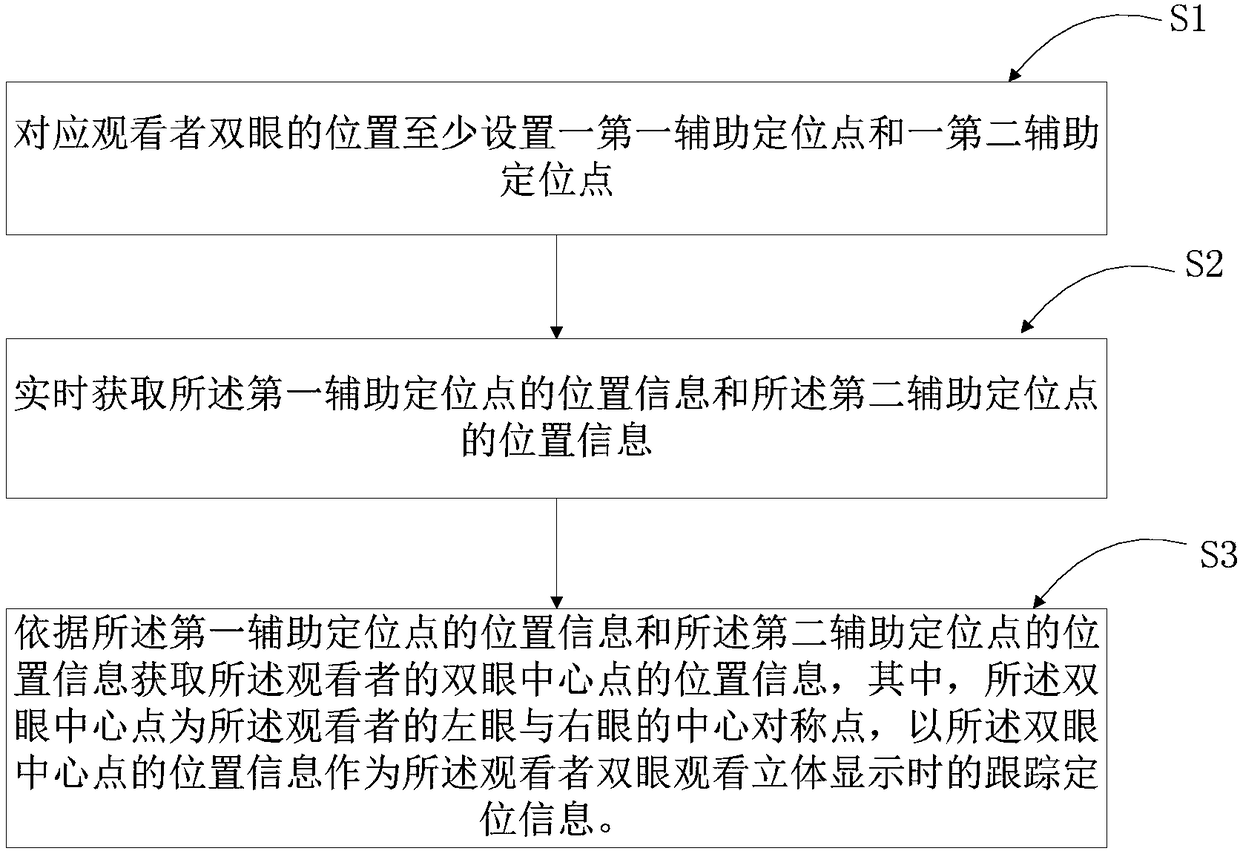

[0024] See figure 1 , figure 1 A schematic flowchart of a tracking and positioning method for stereoscopic display according to Embodiment 1 of the present invention is shown. The tracking and positioning method for stereoscopic display according to Embodiment 1 of the present invention is characterized in that the tracking and positioning method for stereoscopic display includes the following steps:

[0025] S1 setting at least a first auxiliary positioning point and a second auxiliary positioning point corresponding to the position of the viewer's eyes;

[0026] S2 acquiring the position information of the first auxiliary positioning point and the position information of the second auxiliary positioning point in real time;

[0027] S3 Obtain the position information of the center point of the eyes of the viewer according to the position information of the first auxiliary positioning point and the position information of the second auxiliary positioning point, wherein the c...

Embodiment 1

[0030] When the first auxiliary positioning point and the second auxiliary positioning point are infrared signal emitting units arranged on the head of the viewer, the step S2 specifically includes:

[0031] S21 Receive in real time the first infrared signal and the second infrared signal sent simultaneously by the first auxiliary positioning point and the second auxiliary positioning point;

[0032] S22 Obtain the spatial coordinate information of the first auxiliary positioning point and the spatial coordinate information of the second auxiliary positioning point according to the first infrared signal and the second infrared signal.

[0033] If the position of the center point of the eyes is used as the input of the mapping algorithm, the coordinates of the calculated tracking position need to be converted into the coordinates of the center of the eyes (nasion point).

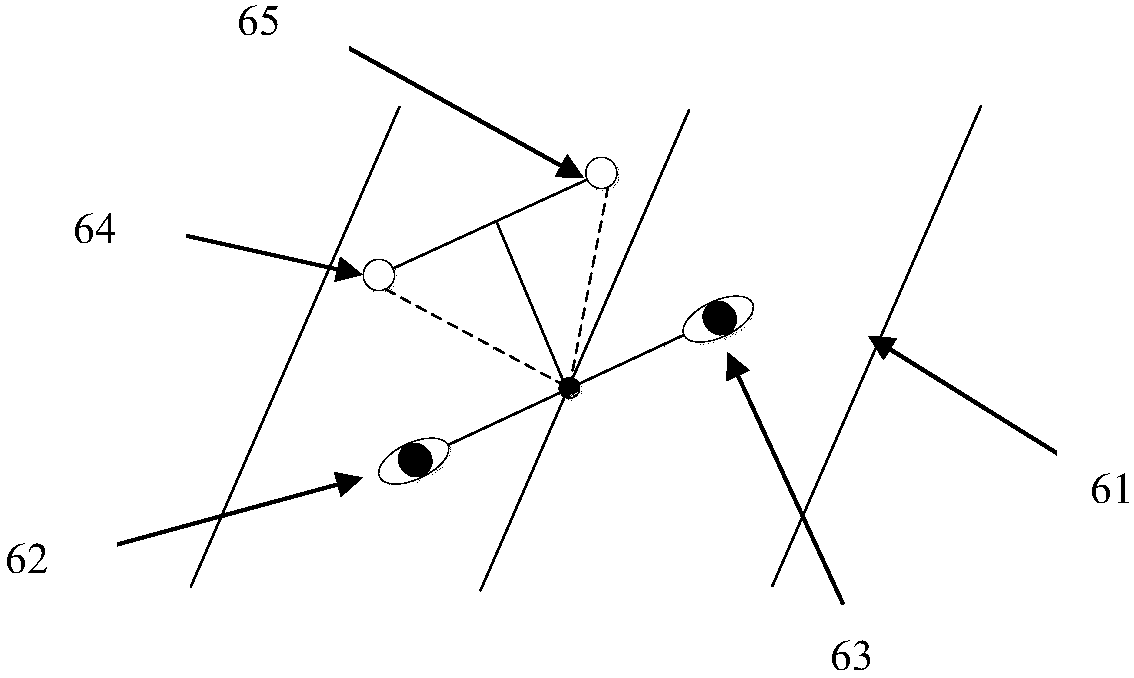

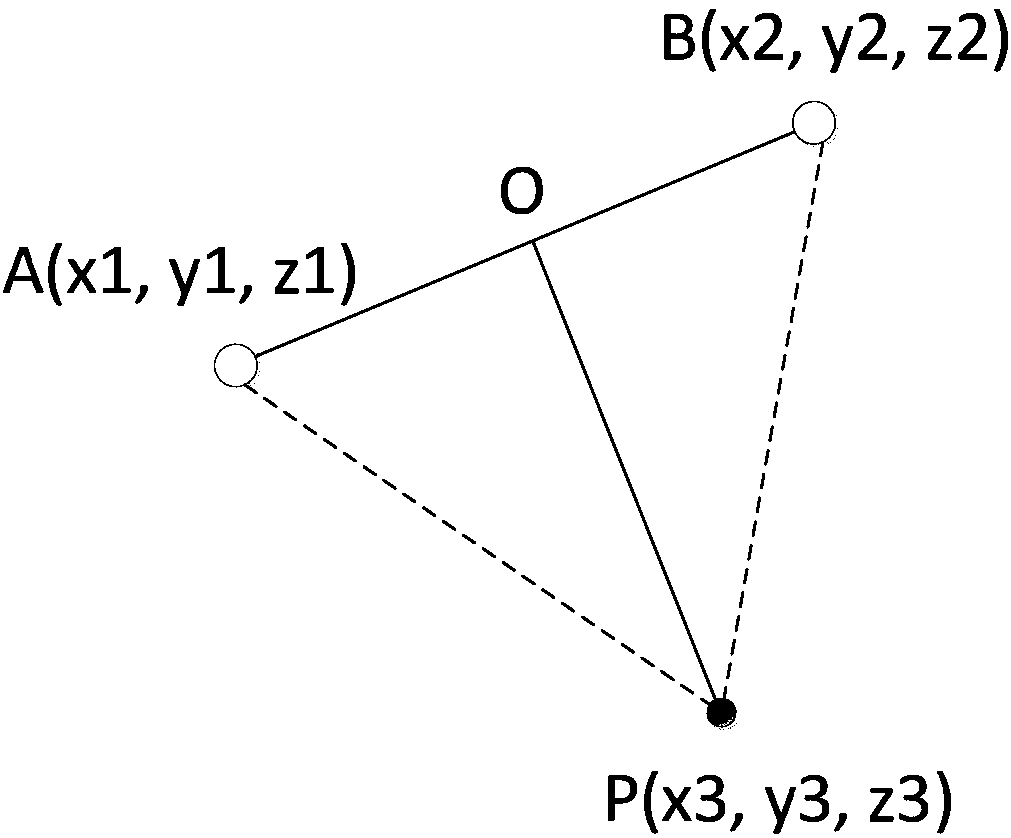

[0034] See figure 2 and image 3 , figure 2 A schematic diagram showing the relationship between the ...

Embodiment 2

[0039] When the first auxiliary positioning point and the second auxiliary positioning point are feature points of the viewer's face, the step S2 specifically includes:

[0040] S210 Recognizing the face of the viewer in real time, extracting at least two feature points of the face of the viewer, obtaining the positional relationship between each feature point and the eyes of the viewer, and arbitrarily selecting two feature points as the first auxiliary positioning point and the second auxiliary positioning point;

[0041] S220 respectively locate the feature point serving as the first auxiliary positioning point and the feature point serving as the second auxiliary positioning point, and obtain the spatial coordinate information of the first auxiliary positioning point and the spatial coordinate information of the second auxiliary positioning point. Spatial coordinate information.

[0042] Specifically, the feature points extracted by general face recognition algorithms are...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com