Deep neural network model compression method based on asymmetric ternary weight quantization

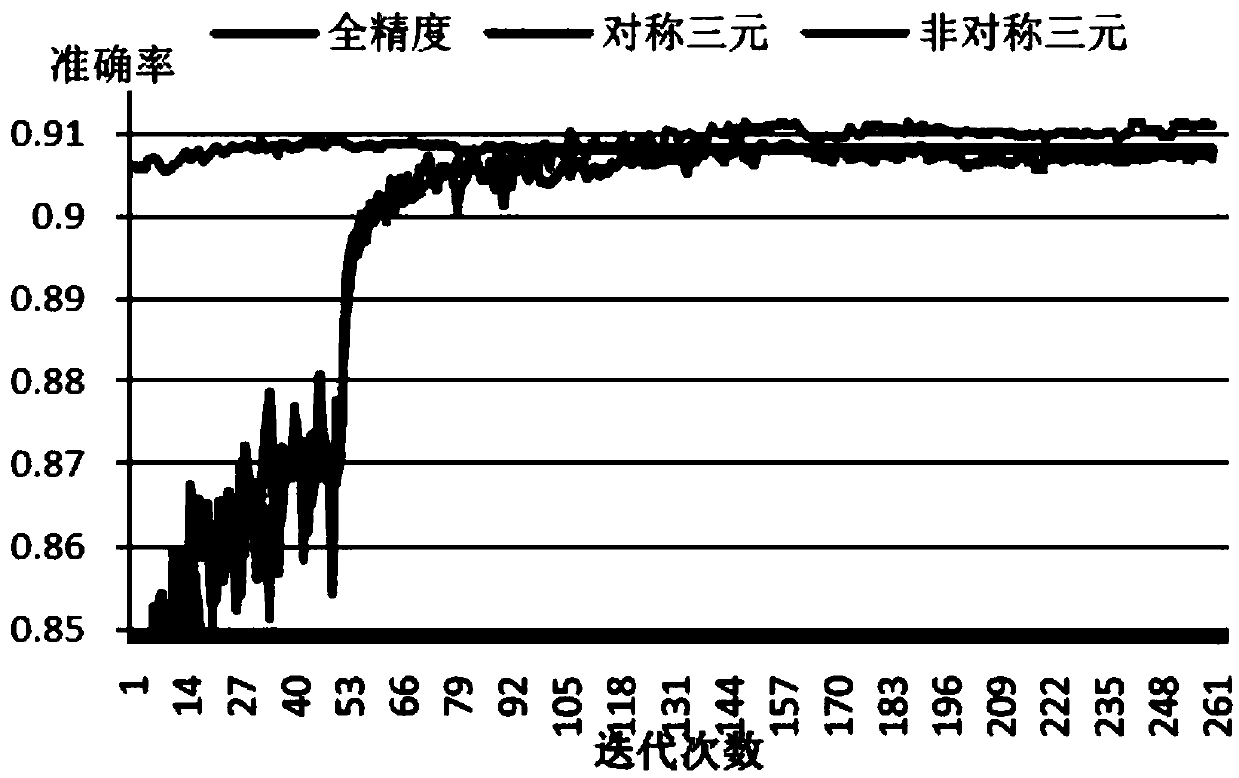

A technology of deep neural network and compression method, which is applied in the field of deep neural network model compression based on asymmetric ternary weight quantization, can solve the problems of limiting the expressive ability of ternary weight network, etc., so as to improve the recognition accuracy, reduce loss, improve The effect of expressiveness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0059] Deep neural networks usually contain millions of parameters making it difficult to be applied to devices with limited resources, but usually most of the parameters of the network are redundant, so the main purpose of this invention is to remove redundant parameters to achieve model compression. The realization of technology is mainly divided into three steps:

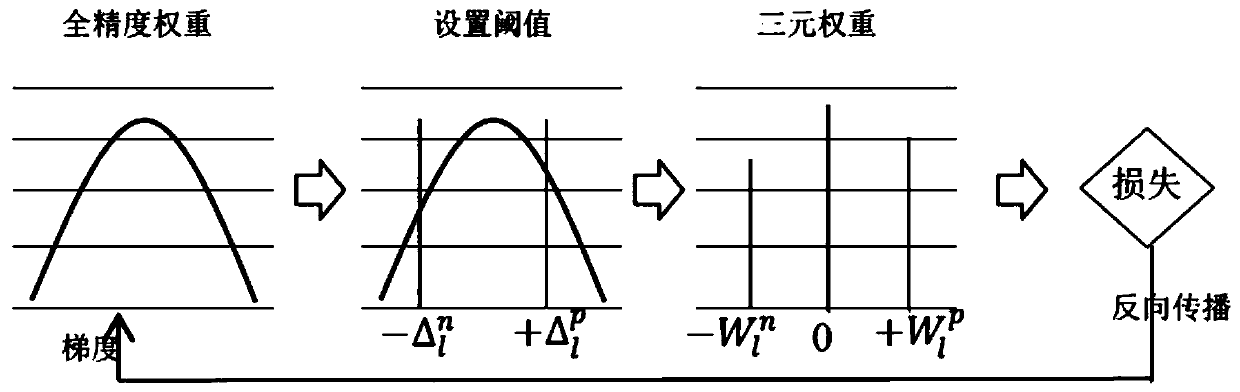

[0060] (1): Asymmetric ternary weight quantization process:

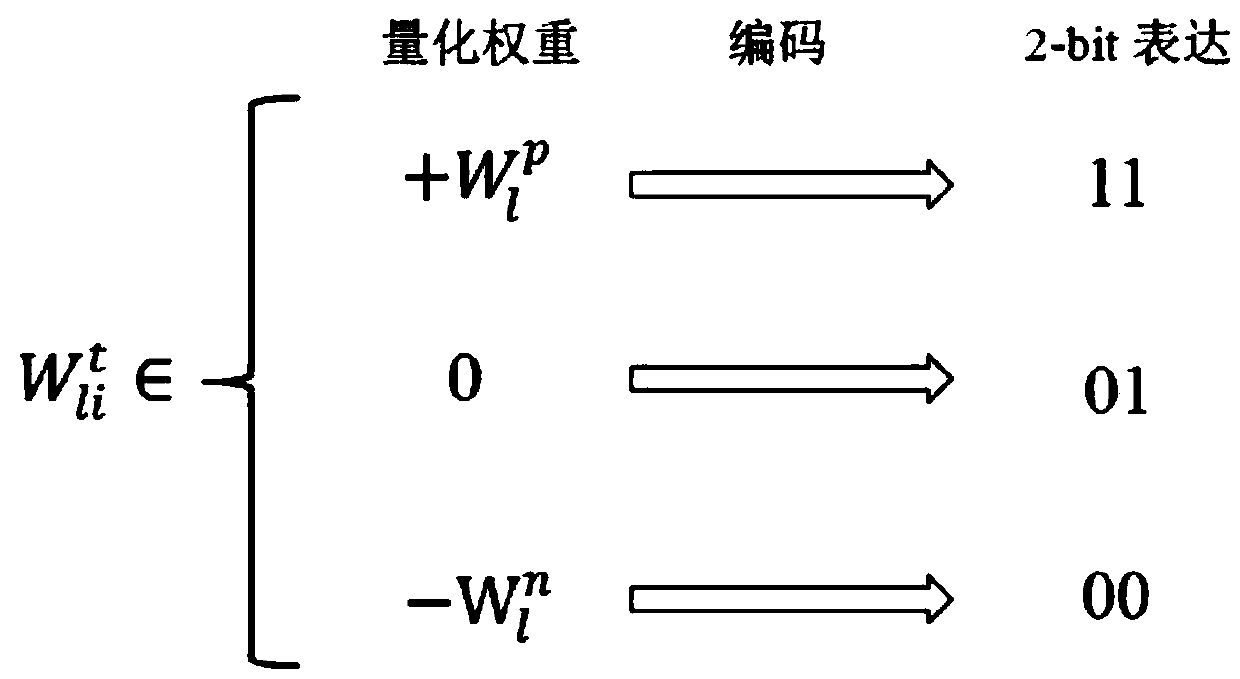

[0061] The asymmetric ternary weight quantization method quantizes the traditional floating-point network weight to the ternary value during network training Among them, the quantization method adopts the method of threshold setting, and the formula is as follows:

[0062]

[0063] in the formula Is the threshold used in the quantization process, and any floating-point number can be assigned to different ternary values according to its range. is the corresponding scaling factor, which is used to reduce the loss caused by the quantization pr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com