Video scene classification method, device, equipment and storage medium

A scene classification and video scene technology, applied in the field of computer vision, can solve problems such as difficult scene classification, and achieve the effect of meeting personalized viewing needs, fast recognition rate, and improved accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

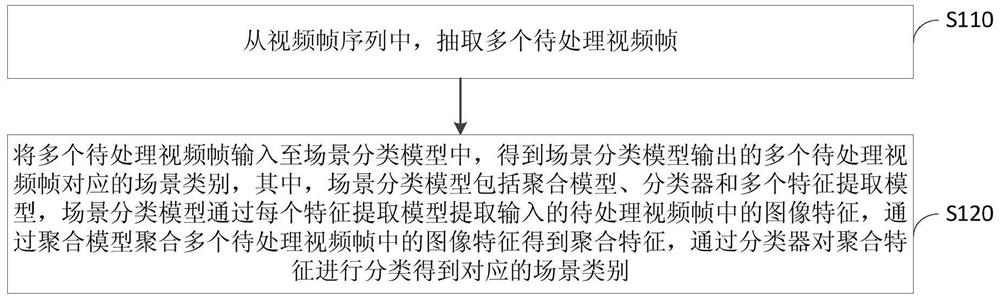

[0025] figure 1 This is a flowchart of a video scene classification method provided in Embodiment 1 of the present disclosure. This embodiment can be applied to the case of scene classification of a video frame sequence in a video stream. The method can be performed by a video scene classification device. The device can be composed of hardware and / or software and integrated into the electronic equipment, and specifically includes the following steps:

[0026] S110. Extract a plurality of video frames to be processed from the video frame sequence.

[0027] The video frame sequence refers to continuous video frames within a period of time in the video stream, for example, continuous video frames within a time period of 5 seconds or 8 seconds, and the video frame sequence includes a plurality of video frames.

[0028] Optionally, when extracting a plurality of video frames to be processed, the extraction may be performed continuously or discontinuously in the video frame sequenc...

Embodiment 2

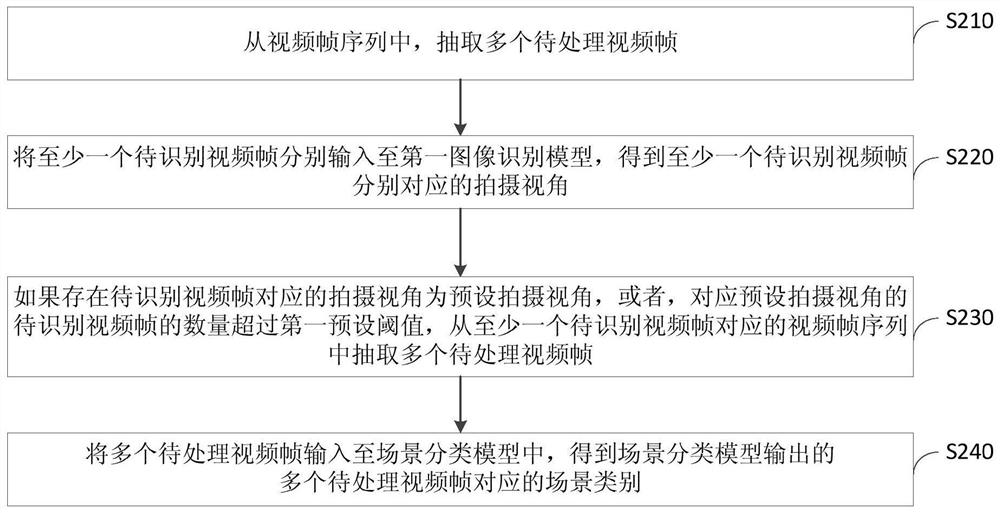

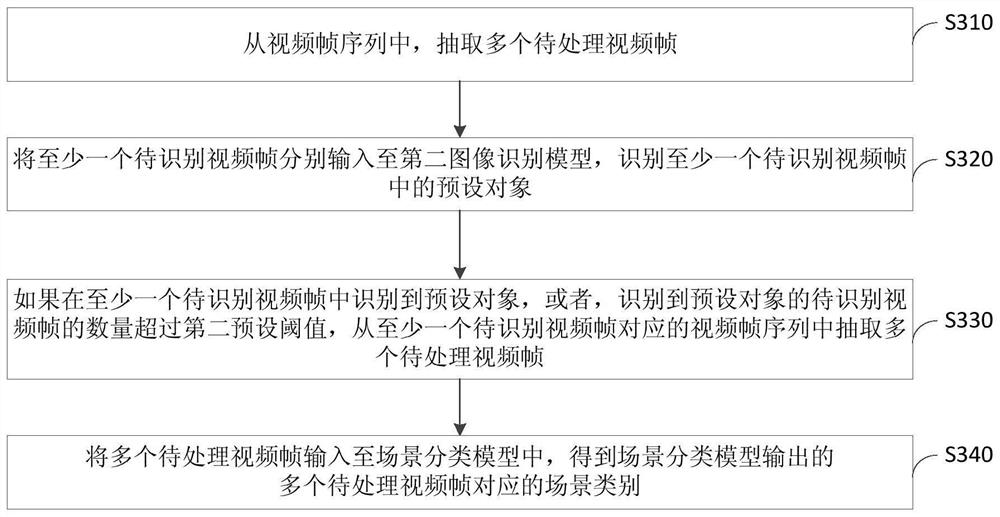

[0051] In each optional implementation manner of the foregoing embodiment, the video frame to be processed may be extracted from any video frame sequence of the video stream, and the scene classification of the to-be-processed video frame may be performed. However, the video stream contains complex contents, and it cannot be guaranteed that the to-be-processed video frames in each video frame sequence belong to a certain preset scene category. Based on this, this embodiment first locks a certain video frame sequence according to the shooting angle of view, and then performs scene classification on the video frames in the video frame sequence.

[0052] figure 2 This is a flowchart of a video scene classification method provided in Embodiment 2 of the present disclosure. This embodiment can be combined with each optional solution in one or more of the foregoing embodiments, and specifically includes the following steps:

[0053] S210. Extract at least one to-be-identified vide...

Embodiment approach

[0061] Embodiment 1: Input at least one to-be-recognized video frame into a first image recognition model, respectively, to obtain a shooting angle of view corresponding to each to-be-recognized video frame output by the first image recognition model.

[0062] In this embodiment, the first image recognition model can directly recognize the shooting angle of the video frame to be recognized. Then, when training the first image recognition model, the video frame samples of the long-distance shooting perspective and the long-distance shooting perspective labels, and the video frame samples and the short-distance shooting perspective labels of the short-distance shooting perspective are used as model inputs for training.

[0063] The second embodiment: input at least one to-be-recognized video frame into the first image recognition model respectively, and obtain the display area of the target object in each to-be-recognized video frame output by the first image recognition model....

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com