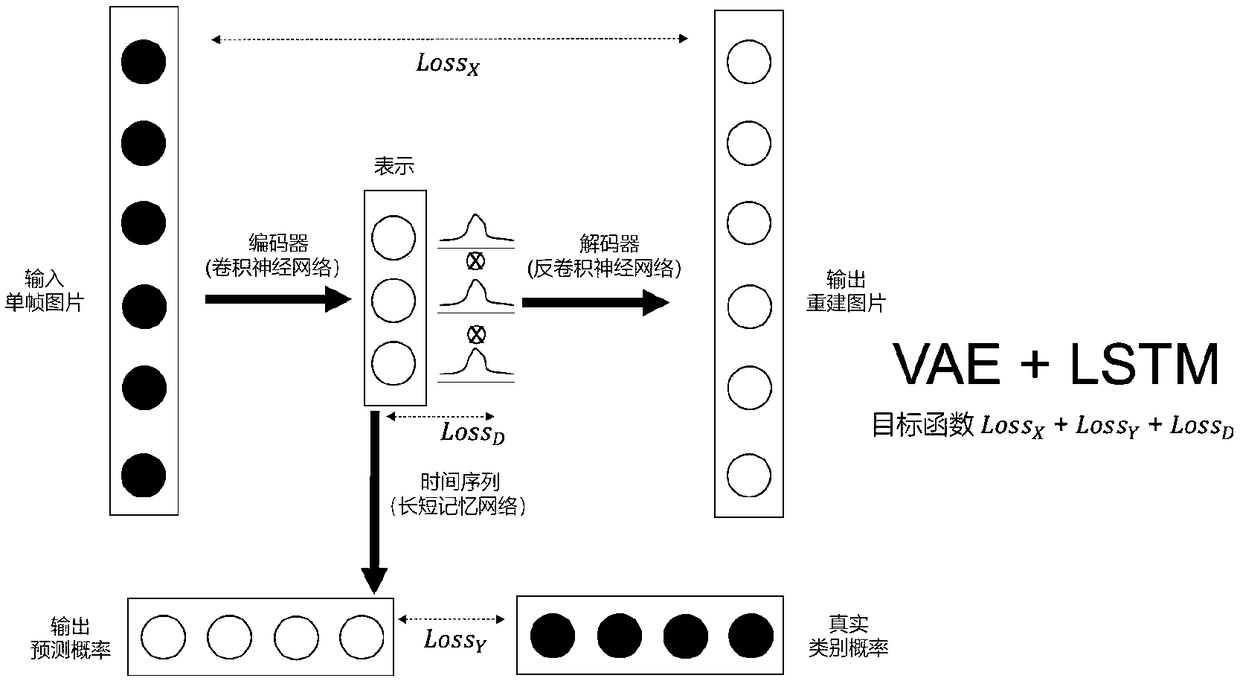

Emotion recognition method and system based on deep learning model and long-short memory network

An emotion recognition and deep learning technology, which is applied in the recognition of patterns in signals, character and pattern recognition, instruments, etc., can solve the problems of not having the ability to generate visual signals, and not being able to learn EEG signals well, and to improve the accuracy, The effect of reducing subjective factors

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

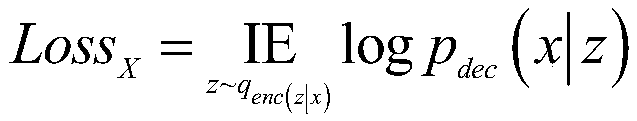

Method used

Image

Examples

Embodiment

[0076] First: EEG signal data preprocessing and division of data sets

[0077] Select DEAP as the dataset for training the model. Note that this method is not limited to a specific EEG data set, nor is it limited by the number of EEG channels, the number of emotional categories, and the division method. DEAP is a public multimodal (e.g. EEG, video, etc.) dataset. EEG 32 participants recorded signals from 32 channels and watched 40 videos of 63 s each. EEG data were pre-processed and down-sampled to 128 Hz and 4-45 Hz frequency band ranges. By the same transformation concept, a Fast Fourier Transform (FFT) is applied to the 1-second EEG signal and converted into an image. In this experiment, alpha (8-13 Hz), beta (13-30 Hz) and gamma (30-45 Hz) emerged as frequency bands representing relevant activity in the brain. The next step is transformation using the Azimuthal Equidistant Projection (AEP) and the Clough-Tocher scheme, resulting in three 32x32 pixel images correspondin...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com