A method for visual tracking through spatio-temporal context

A space-time context and visual tracking technology, applied in the field of visual tracking of computer vision, can solve the problems of tracking model quality deterioration, tracking target drift, wrong background information, etc., and achieve the effect of alleviating the problem of noise samples and avoiding tracking drift

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

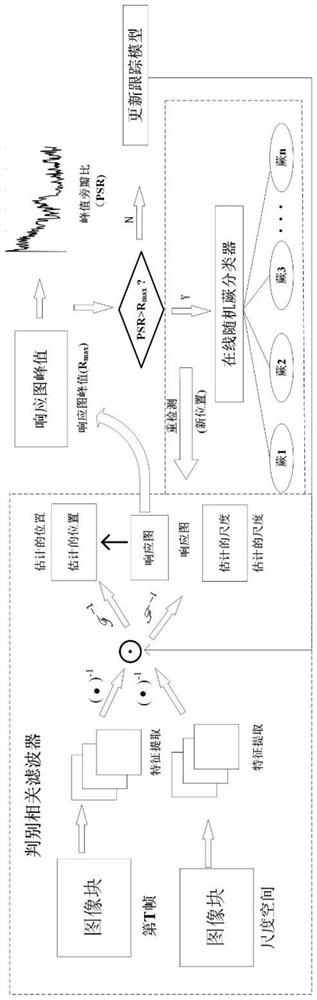

[0050] like figure 1 As shown, the overall steps of the present invention are:

[0051] Step 1: Initialize parameters;

[0052] Step 2: Train a context-aware filter to obtain a position model;

[0053] Step 3: The maximum scale response value of the training scale correlation filter is obtained to obtain a scale model; the order of step 2 and step 3 can be exchanged;

[0054] Step 4: The classifier outputs the response graph; the discriminative correlation filter generates the peak-to-side lobe ratio corresponding to the peak of the response graph;

[0055]Step 5: Compare the peak value of the response graph to the peak sidelobe ratio. If the peak value of the response graph is greater than the peak sidelobe ratio, introduce an online random fern classifier for re-detection; if the peak value of the response graph is less than the peak sidelobe ratio, update the position of step 2 The model and the scale model of step 3; if the peak value of the response graph is equal to t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com