Tumble detection method based on video articulation points and hybrid classifier

A hybrid classifier and detection method technology, applied in the field of fall detection based on video joints and hybrid classifiers, can solve problems such as difficult to reduce detection time-consuming, simple model, complex model, etc., to reduce detection time-consuming, reduce accuracy Accurate, high-precision effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

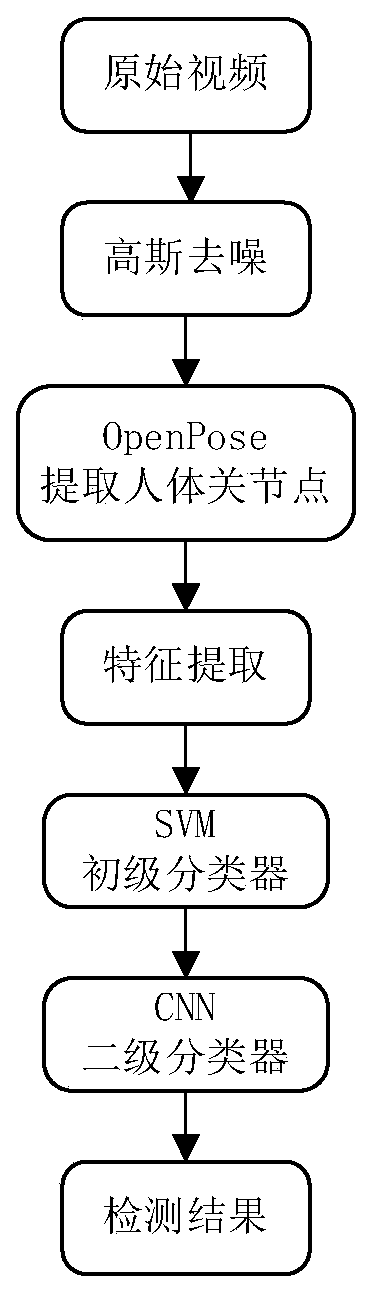

Method used

Image

Examples

Embodiment 2

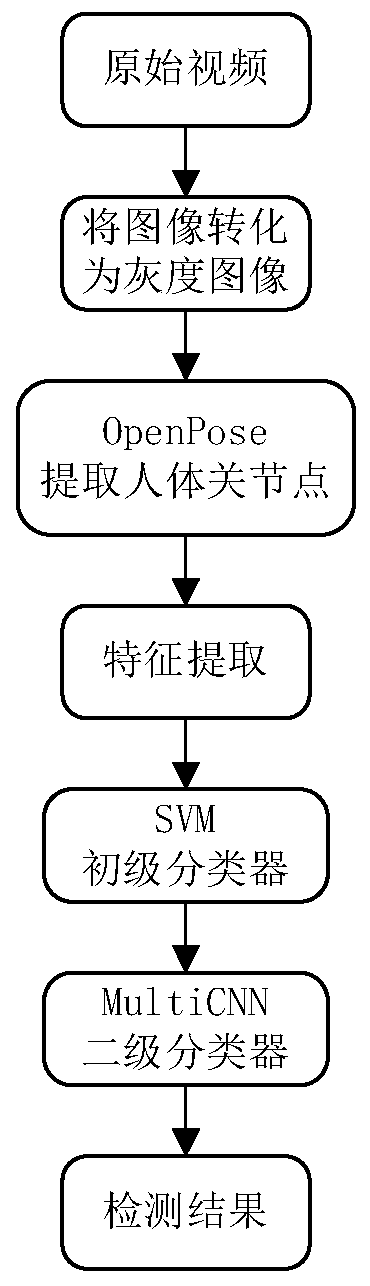

[0087] Such as image 3 Shown, a kind of fall detection method based on video articulation point and mixed classifier, and the difference of embodiment 1 is:

[0088] In step 2, instead of performing Gaussian denoising processing on each frame image of the detected video segment, grayscale processing is performed.

[0089] In step 6, the secondary classifier is different from that in Example 1. The secondary classifier in this implementation is a multi-scale convolutional neural network (referred to as MultiCNN), including a convolutional layer connected sequentially through the activation function Relu , a pooling layer, and three fully connected layers. In the convolution method of the convolutional neural network, the padding parameter is set to 'valid'. Four convolution kernels with scales of 3×3, 5×5, 7×7, and 9×9 are set in the convolution layer; the sizes of the pooling layer are 8×1, 6×1, 4×1, Four pooling operators of 2×1; 3×3, 5×5, 7×7, 9×9 convolution kernels and...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com