Action prediction method based on multi-task random forest

A random forest and action prediction technology, applied in the field of computer vision, can solve problems such as long time-consuming and low accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0053] The present invention will be further explained below in conjunction with specific embodiments, but the present invention is not limited thereto.

[0054] The present invention provides a kind of action prediction method based on multi-task random forest, comprises the following steps:

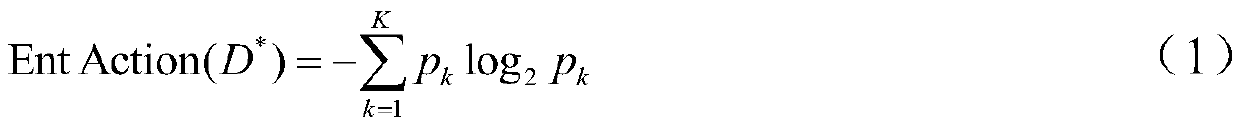

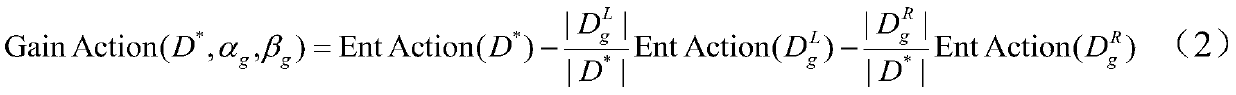

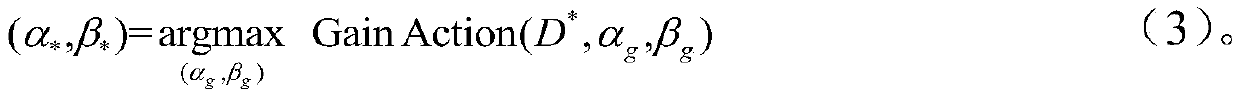

[0055] S1: Use training data to build an action prediction model based on multi-task random forest, where multi-task random forest is an integrated learning model containing N multi-task decision trees,

[0056] Among them, the steps to construct a multi-task random forest are as follows:

[0057] S11: Collect a training set containing M incomplete videos Each sample in the training set D contains the feature vector x m ∈R F×1 , action category label Observation rate label Incomplete videos of , where F represents the number of features in the feature vector, K represents the number of action categories; specifically, first collect a set of complete videos with action category l...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com