A Lane Line Extraction Method for Event Camera Based on Deep Learning

A deep learning and extraction method technology, applied in the field of image processing, can solve the problems of poor imaging quality and difficult lane line extraction, and achieve the effects of low delay, fast and accurate lane line curve fitting, and high dynamic range

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] In order to make the object, technical solution and effect of the present invention more clear and definite, the present invention will be further described in detail below with reference to the accompanying drawings.

[0028] The present invention provides a method for extracting lane lines of an event camera based on deep learning, comprising the following steps:

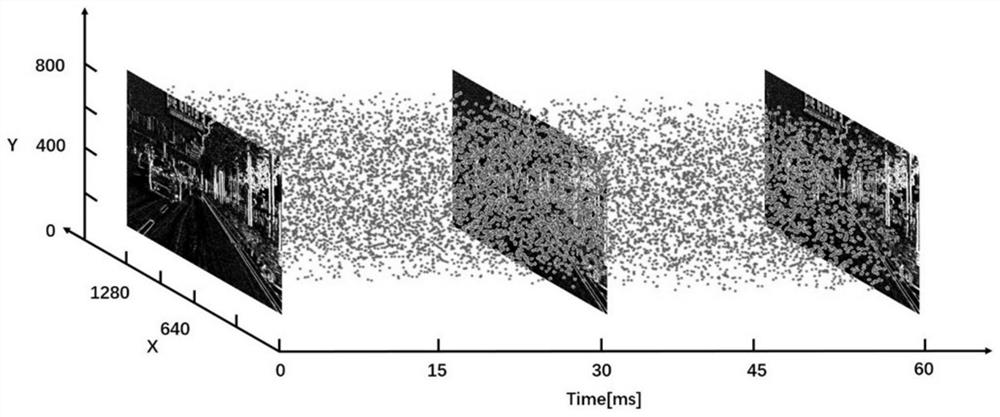

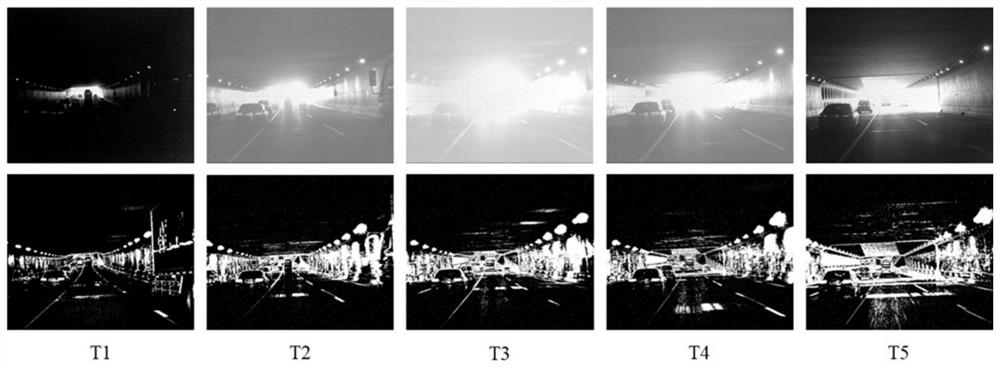

[0029] Step 1: Create an image frame from the event flow generated by DVS. The method of building a frame is generally to accumulate corresponding events in a period of time, and finally express it in a binary image, such as figure 1 shown.

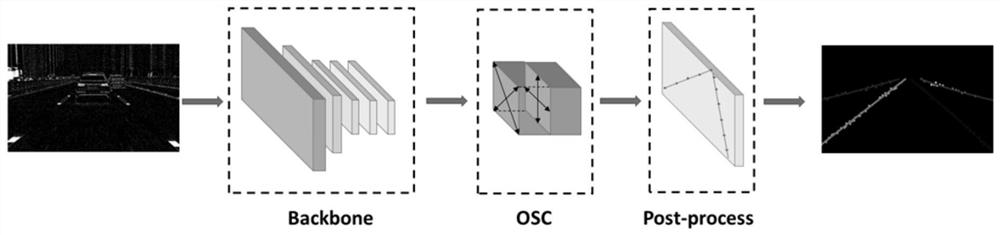

[0030] Step 2: Send the generated DVS images and corresponding semantic labels into the network based on structural prior for supervised training: the network based on structural prior is composed of a base network and an omnidirectional slice convolution module, and the base network passes convolution Product and pooling are used to extract semantic information. The ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com