First-person perspective video interaction behavior identification method based on interaction modeling

A first-person, video interaction technology, applied in character and pattern recognition, neural learning methods, biological neural network models, etc. model, unable to describe the interactive behavior well, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

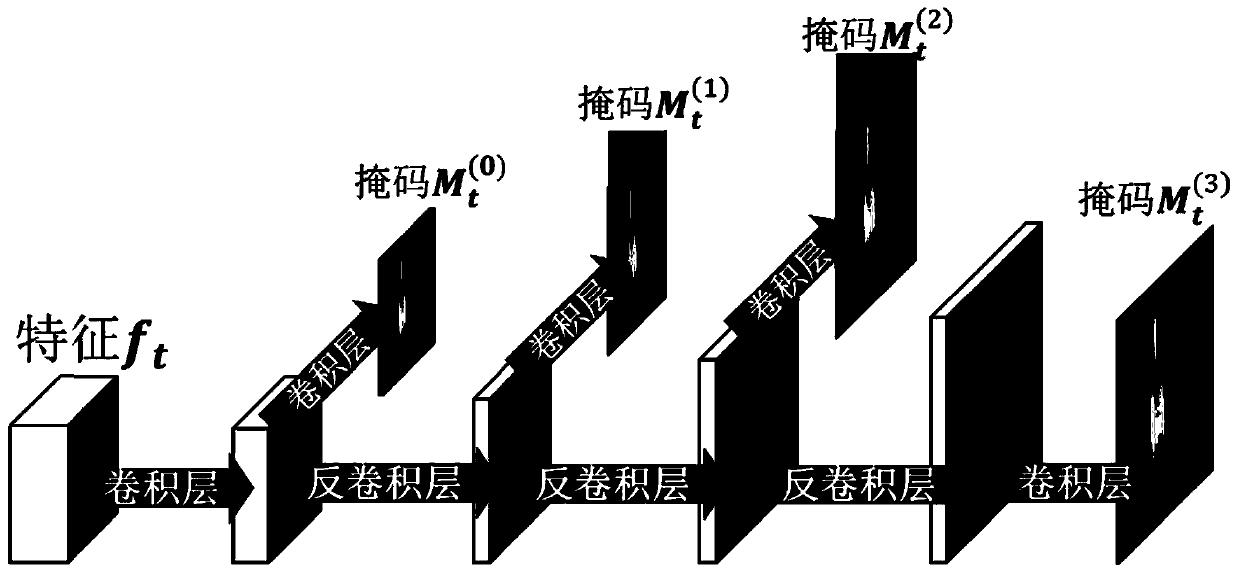

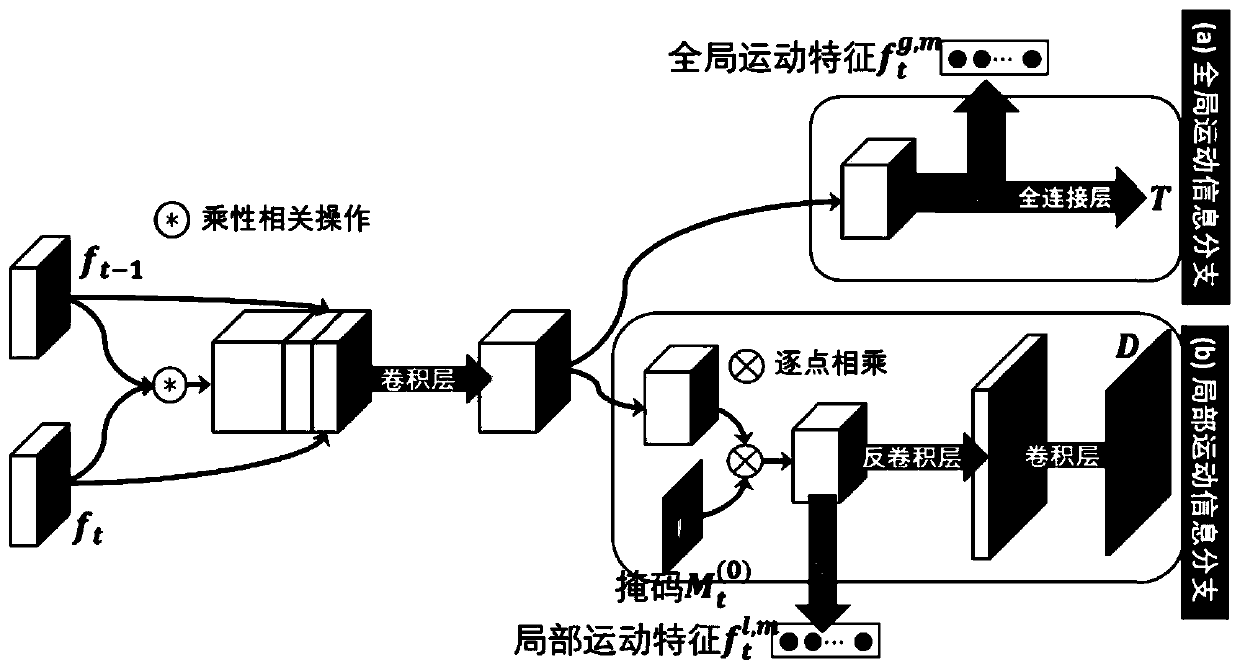

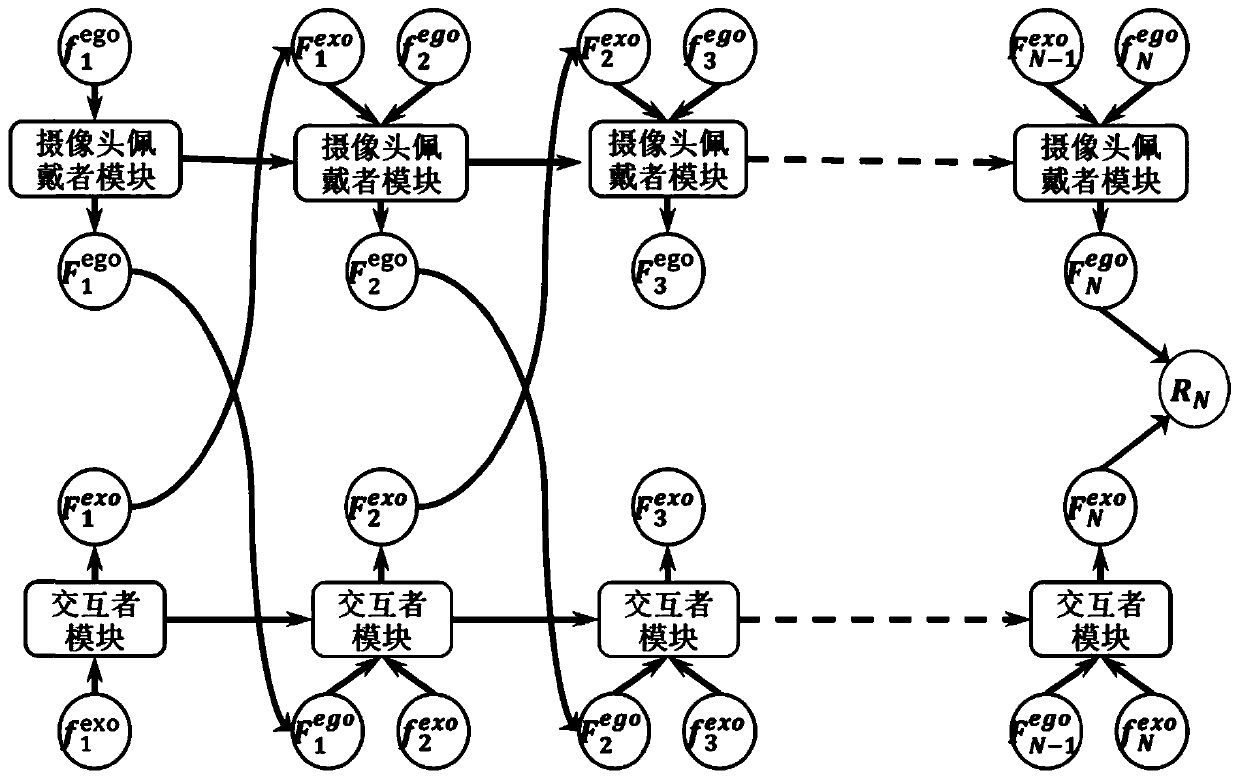

[0059] The problem to be solved by the present invention is that given a video clip, the intelligent video analysis system needs to identify the behavior category of the people in the video. For an intelligent video analysis system based on a wearable device, the camera is worn on a certain person, and the video is from a first-person perspective. It is necessary to identify the type of interaction between the wearer and others in the first-person perspective. At present, the main first-person perspective interactive behavior recognition method mainly adopts a method similar to the third-person perspective behavior recognition method, and directly learns features from the overall static appearance and dynamic motion information of the video, without explicitly combining the camera wearer and Separate and model the relationship between the interactors with which they interact. The present invention focuses on the first-person perspective interactive behavior recognition problem...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com