Action recognition device and action recognition method

A technology for identifying devices and actions, applied in character and pattern recognition, instruments, computing, etc., and can solve problems such as inability to recognize

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 approach 》

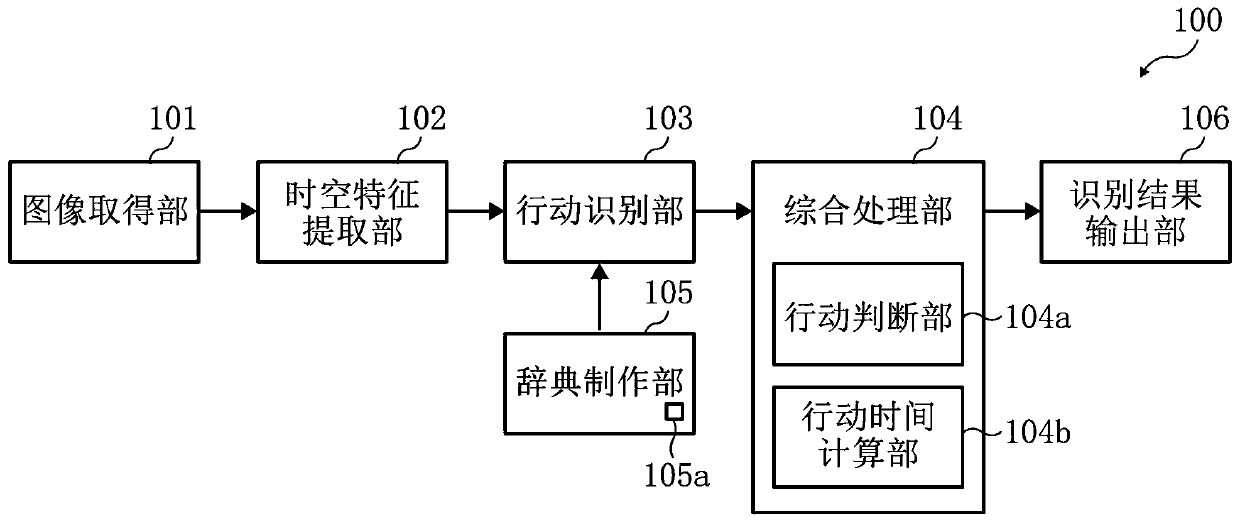

[0033] figure 1 is a functional configuration block diagram of the action recognition device according to the first embodiment.

[0034] The action recognition device 100 in this embodiment includes an image acquisition unit 101 , a spatiotemporal feature extraction unit 102 , an action recognition unit 103 , an integrated processing unit 104 , a dictionary creation unit 105 , and a recognition result output unit 106 .

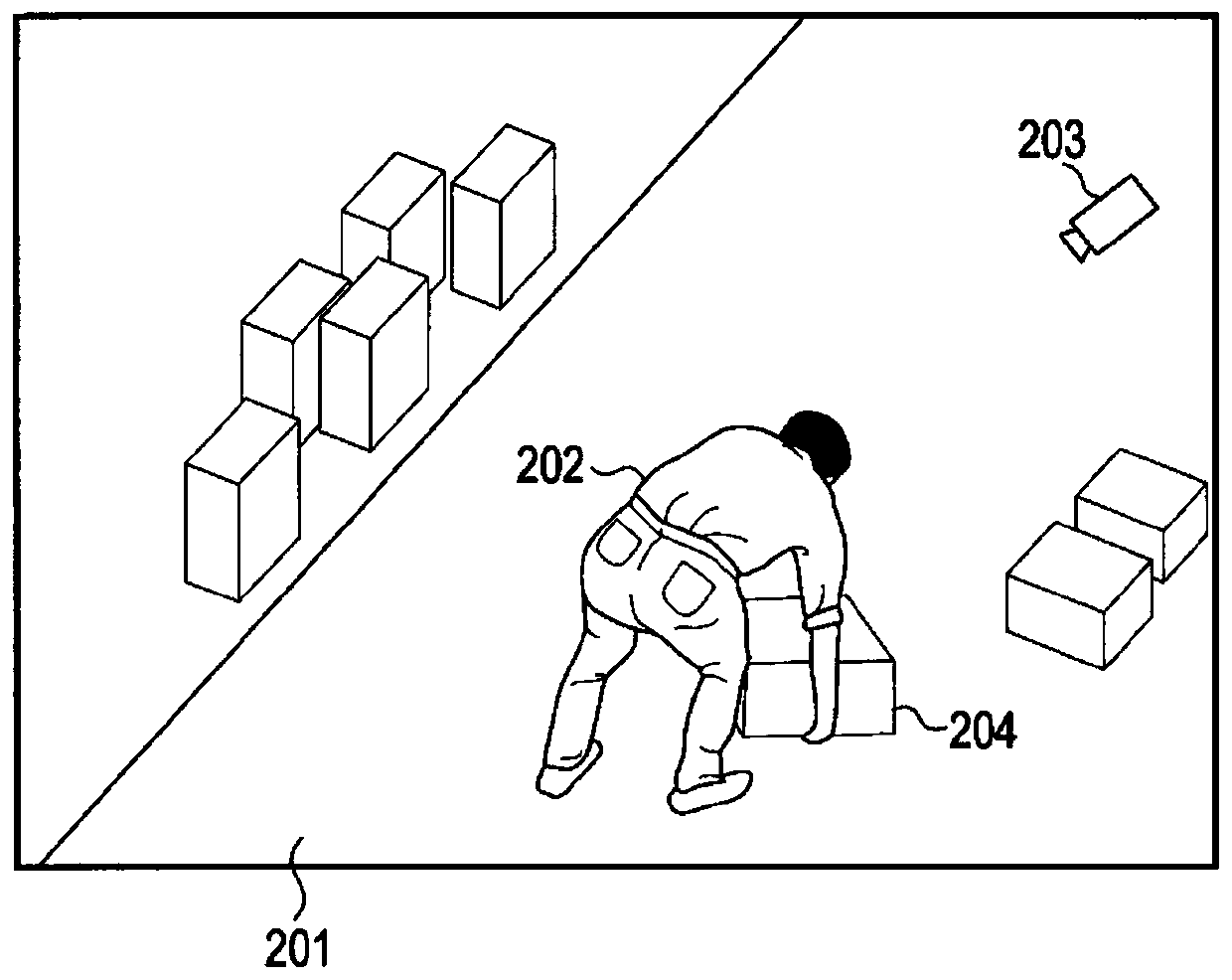

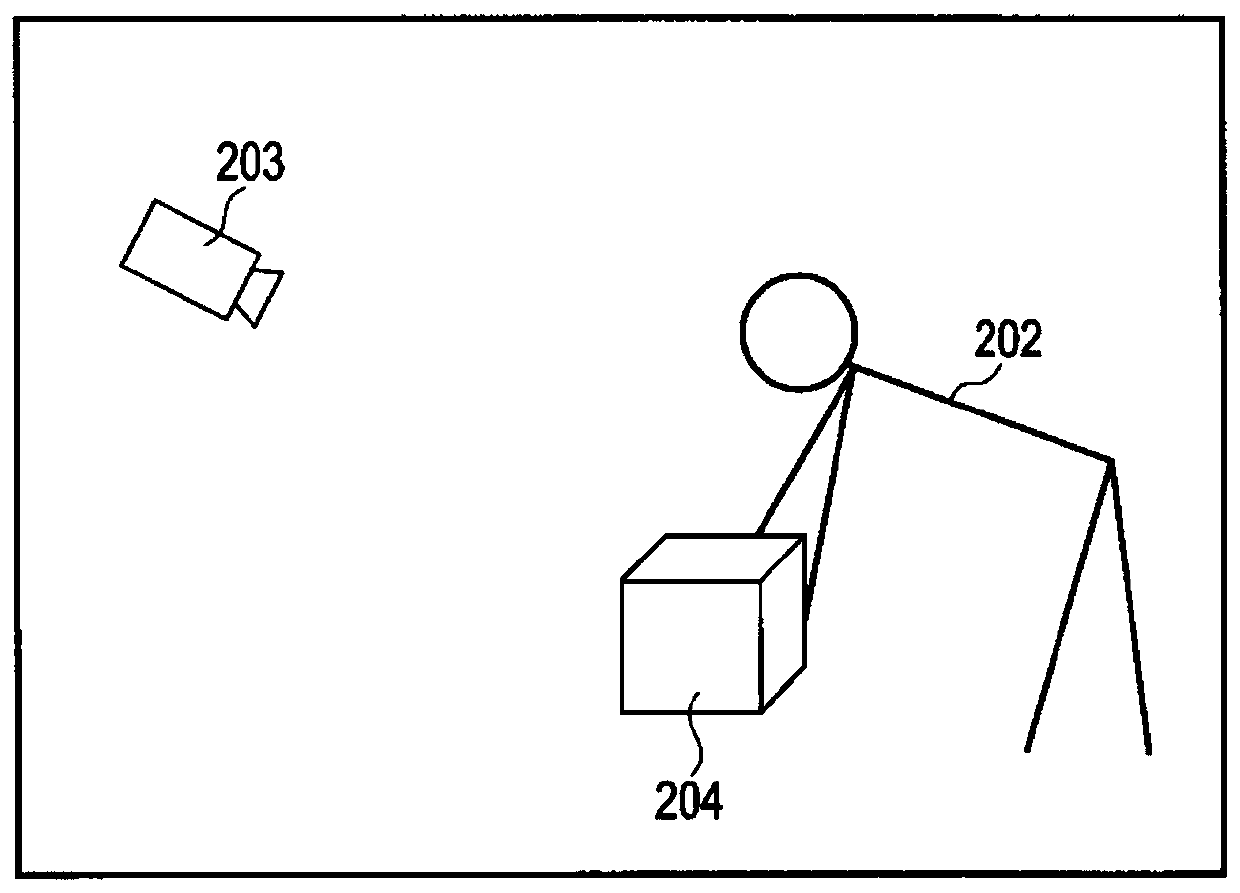

[0035] The image acquisition unit 101 is, for example, as figure 2 The image of the camera 203 installed in the workplace 201 shown is obtained in real time or offline. The installation place of the camera 203 is arbitrary, and it may be any place as long as the action of the worker 202 when working in the workplace 201 can be photographed. The image of the camera 203 can be directly sent from the camera 203 to the action recognition device 100 through, for example, wired or wireless, or can be transmitted to the action recognition device 100 through a reco...

no. 2 approach

[0109] In the second embodiment, in the above-mentioned first embodiment, a condition that the reliability P of the element action is higher than the threshold value Thre set in advance as a judgment criterion is added to carry out comprehensive processing.

[0110] The basic structure of the action recognition device 100 in this embodiment is the same as that of the first embodiment above. figure 1 is the same. In the second embodiment, the action determination unit 104a of the integrated processing unit 104 compares the reliability P of the action of each element in units of frames, and determines the element whose reliability P is higher than the threshold value Thre and whose reliability P is the highest. Action is a function of the object job action. The processing operation of the second embodiment will be described in detail below.

[0111] Figure 15 It is a flowchart for explaining the integrated processing operation performed by the integrated processing unit 104 ...

no. 3 approach

[0131] In the third embodiment, there is a judgment time Tw for judging an element action, and the element action is judged at intervals of the judgment time Tw.

[0132] The basic configuration of the action recognition device 100 is the same as that of the above-mentioned first embodiment. figure 1same. In the third embodiment, the action determination unit 104a of the comprehensive processing unit 104 has a function of comparing the reliability P of each element action at the determination time Tw, and determining the element action with the highest reliability P as the target operation action. The processing operation of the third embodiment will be described in detail below.

[0133] Figure 16 It is a flowchart for explaining the integrated processing operation performed by the integrated processing unit 104 of the action recognition device 100 in the third embodiment. The integrated processing shown in this flowchart is in the Figure 13 Execute in step S27.

[013...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com