Deep hash method based on metric learning

A metric learning and hashing technology, applied in the field of computer vision and image processing, can solve the problems of not encouraging the same symbols, misjudgment, poor hash code discrimination, etc., to achieve fast and accurate image retrieval, accurate hash coding, Chinese The effect of small distance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

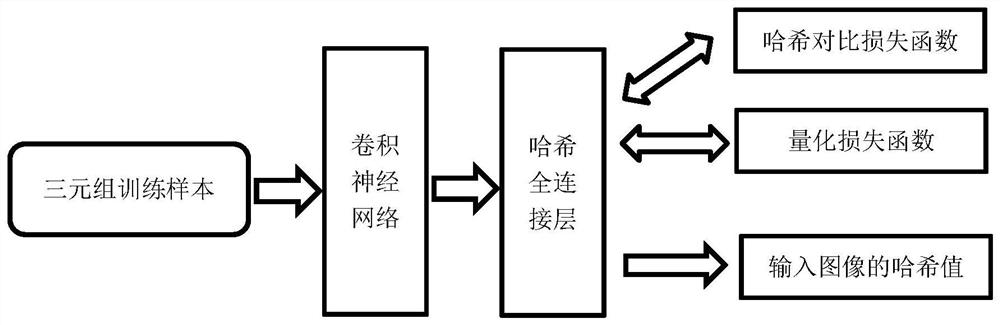

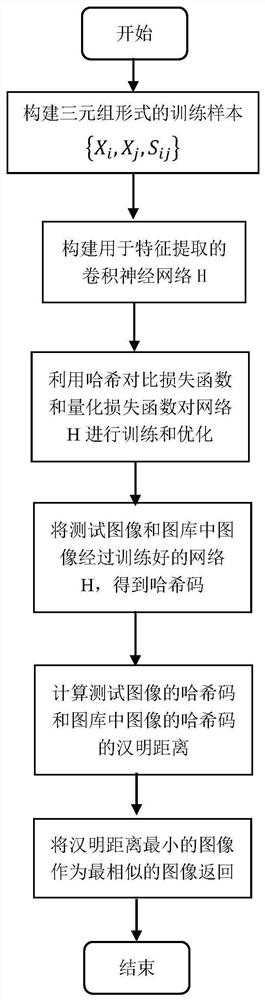

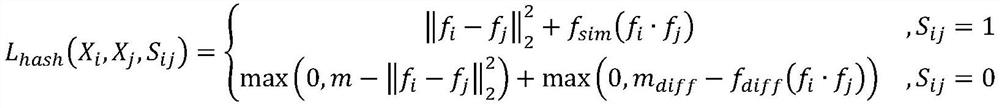

[0026] to combine figure 1 and figure 2 Describe this embodiment, the deep hashing method based on metric learning, this method is realized by the following steps:

[0027] 1. Construct training samples in the form of triplets;

[0028] Input data: The input for each training is a triplet form of two images and the label relationship between them:

[0029] {X i , X j , S ij}

[0030] Among them, X represents the image, S ij Represents image X i , Xj The label relationship between the same category is 1, and the different category is 0, that is:

[0031]

[0032] Before entering the neural network for feature extraction, X in the input triplet pair needs to be i , X j Perform scaling (resize) and cropping (crop) operations to ensure that X i , X j Have the same image dimensions; scale the image to 256px by 256px and randomly crop the content area to 227px by 227px.

[0033] 2. Build a deep neural network;

[0034] Referring to the existing deep convolutional n...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com