Style migration method of music with human voice

A music and style technology, applied in the field of data processing, can solve problems such as unsatisfactory effects and the inability to achieve the number of cover songs by singers

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0056] Below in conjunction with accompanying drawing and specific embodiment the present invention is described in further detail:

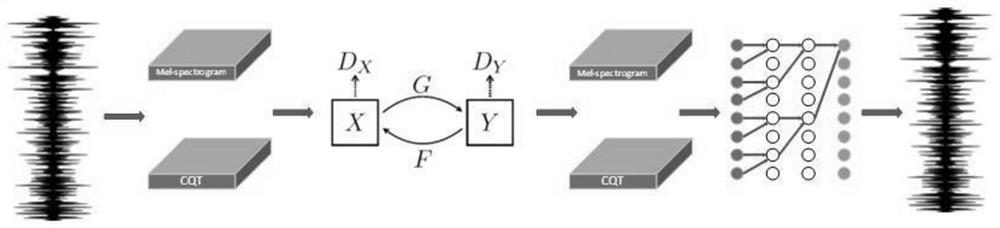

[0057]The invention provides a music style migration method with human voice, based on CycleGAN and WaveNet decoder, to solve the current music style conversion field. Most image style transfer algorithms perform poorly on music style transfer. The algorithm performed even worse on style transfer in music with vocals.

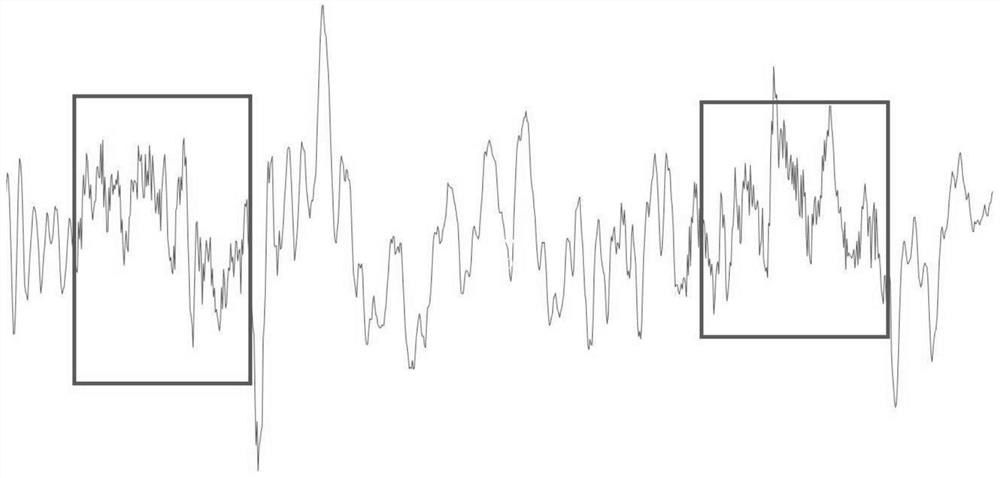

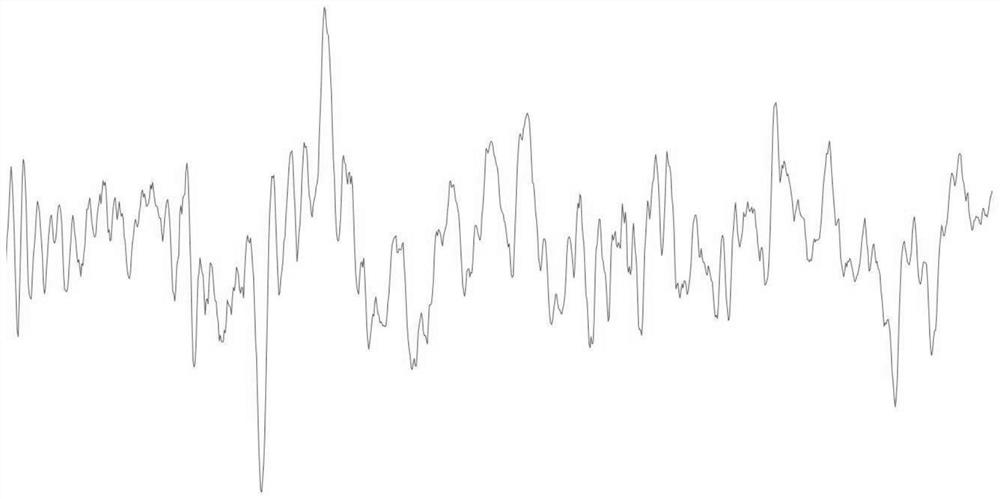

[0058] The overall model architecture diagram of the present invention is as follows figure 1 As shown, the audio image with human voice generated by deconvolution is as follows figure 2 As shown, the nearest neighbor interpolation is used to generate the audio image with human voice as shown in image 3 As shown, the algorithm of the patent invention has been tested on the computer programming environment, and we have verified the correctness and feasibility of our algorithm through the experiment. The specific configuratio...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com