Semantic segmentation method in autonomous driving scenarios based on bisenet

A semantic segmentation and automatic driving technology, applied in scene recognition, combustion engine, internal combustion piston engine, etc., can solve the problem of time-consuming, and achieve the effect of small model size, good convergence and high accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

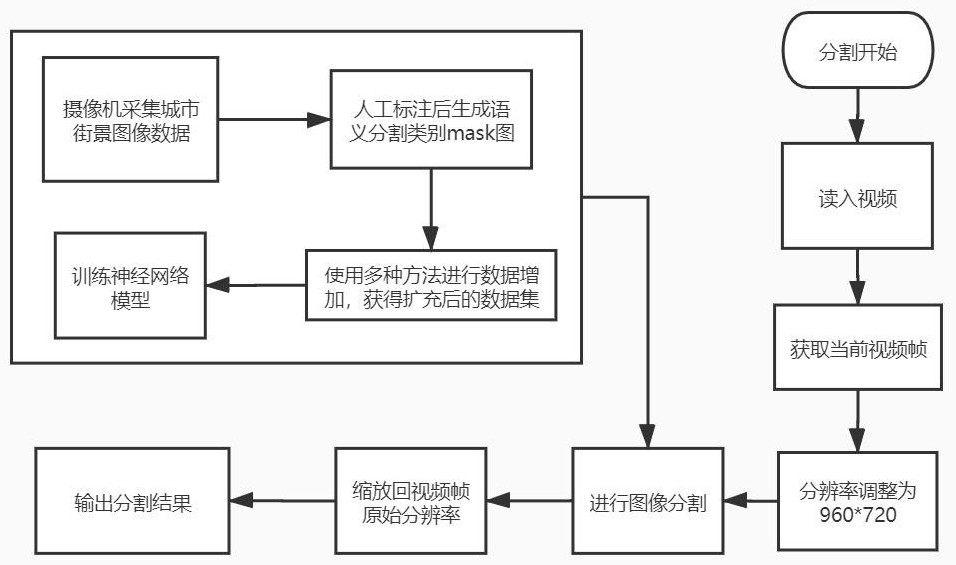

[0046] The present invention will be further described below with reference to the accompanying drawings and embodiments.

[0047] Please refer to figure 1 , the present invention provides a kind of semantic segmentation method under the automatic driving scene based on BiSeNet, comprises the following steps:

[0048] Step S1: collecting urban street image data and preprocessing;

[0049] Step S2: label the preprocessed image data to obtain labelled image data;

[0050] Step S3: performing data enhancement on the marked image data, and using the enhanced image data as a training set;

[0051] Step S4: build a BiSeNet neural network model, and train the model based on the training set;

[0052] Step S5: Preprocess the video information collected by the camera, and perform semantic segmentation on the city streets in the camera according to the trained BiSeNet neural network model.

[0053] Further, the step S1 is specifically:

[0054] Step S11: analyze the categories that...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com