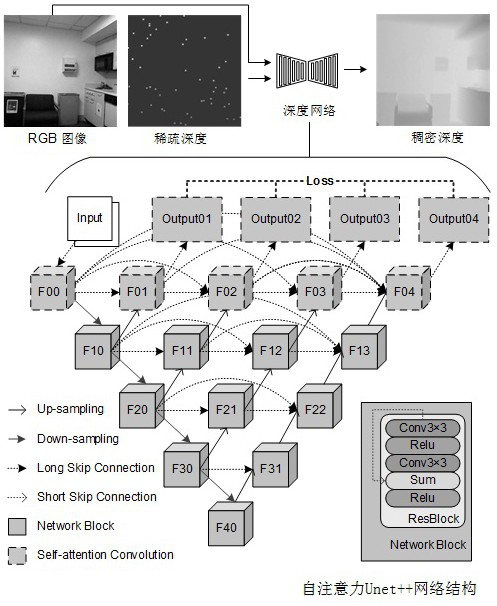

Real-time dense depth estimation method based on sparse measurement and monocular RGB image

A RGB image and depth estimation technology, applied in image enhancement, image analysis, image data processing, etc., can solve the problems of limited computing and storage resources, computationally intensive algorithms cannot be easily adopted, and insufficient utilization of sparse information, etc., to achieve the task The effect of maximizing performance, reducing differences, and improving convergence speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] The present invention will be further explained below in conjunction with the accompanying drawings and specific embodiments. It should be understood that the following specific embodiments are only used to illustrate the present invention and are not intended to limit the scope of the present invention.

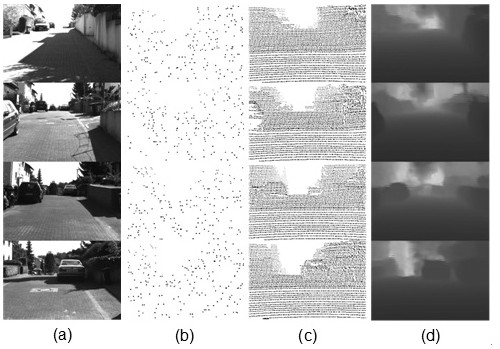

[0030] The present invention uses the indoor data set NYU-Depth-v2 and the outdoor data set KITTI as our experimental data sets to verify the proposed method of real-time dense depth estimation based on sparse measurements and monocular RGB images. The experimental platform includes Pytorch0.4.1, Python3.6, Ubuntu16.04 and NVIDIA TiTanV GPU. The NYU-Depth-v2 dataset is composed of high-quality 480×640RGB and depth data collected by Kinect. According to the official split of the data, there are 249 scenes containing 26331 pictures for training, and 215 scenes containing 654 pictures for testing. The KITTI mapping dataset consists of 22 sequences, including camera and ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com