Traffic scheduling method and device, electronic equipment and storage medium

A flow scheduling and flow technology, applied in the field of network communication, can solve problems such as high server utilization, reduced service capacity, and low server performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

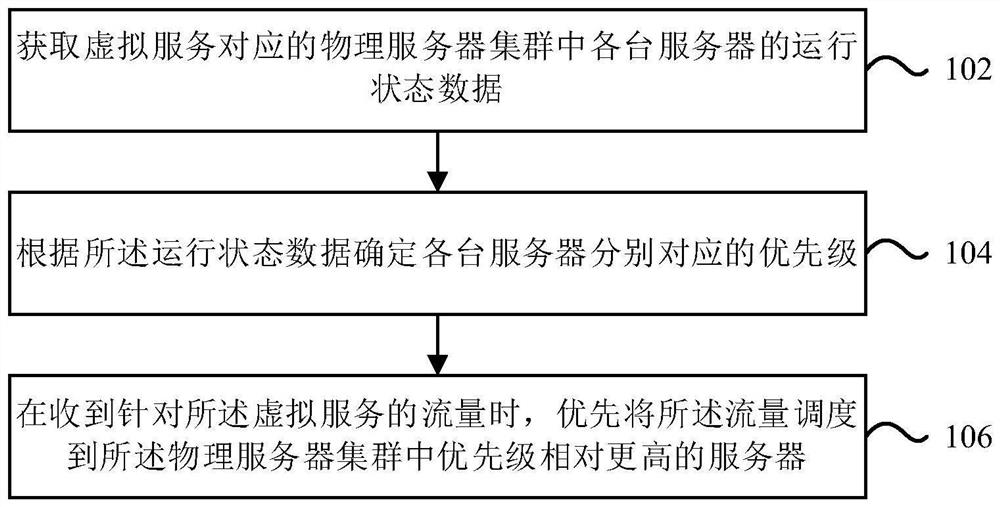

Method used

Image

Examples

Embodiment Construction

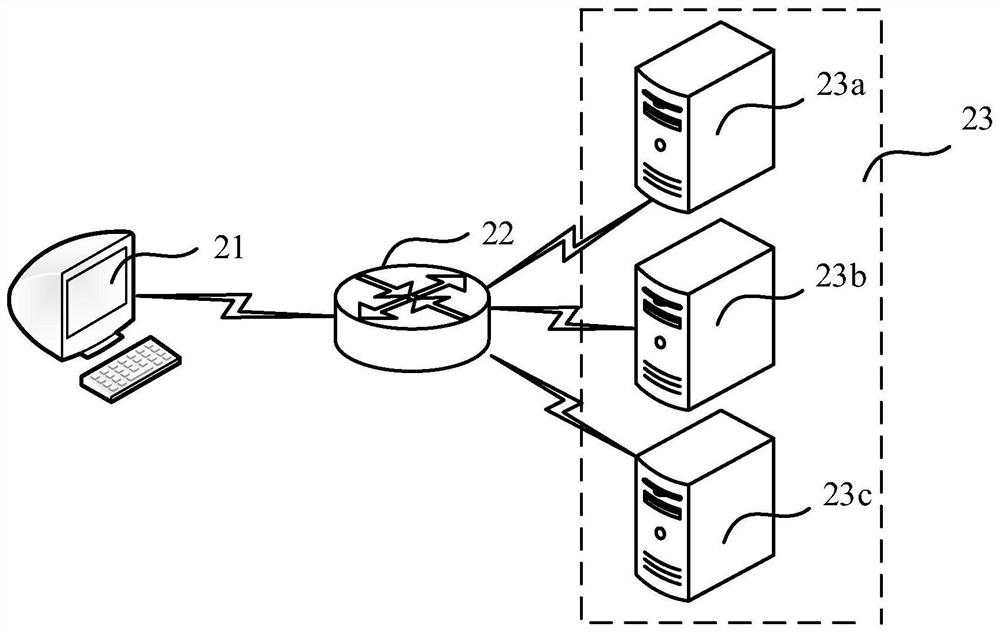

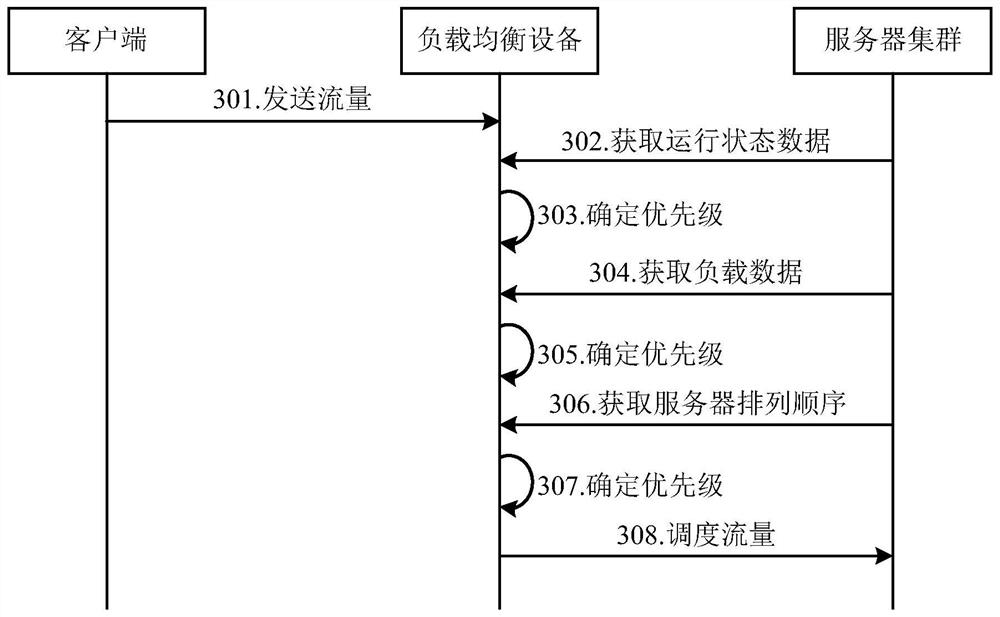

[0026] Reference will now be made in detail to the exemplary embodiments, examples of which are illustrated in the accompanying drawings. When the following description refers to the accompanying drawings, the same numerals in different drawings refer to the same or similar elements unless otherwise indicated. The implementations described in the following exemplary embodiments do not represent all implementations consistent with this application. Rather, they are merely examples of apparatuses and methods consistent with aspects of the present application as recited in the appended claims.

[0027] The terminology used in this application is for the purpose of describing particular embodiments only, and is not intended to limit the application. As used in this application and the appended claims, the singular forms "a", "the", and "the" are intended to include the plural forms as well, unless the context clearly dictates otherwise. It should also be understood that the term...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com