Video question answering method based on object-oriented double-flow attention network

An object-oriented, attention technology, applied in neural learning methods, biological neural network models, digital video signal modification, etc., can solve problems such as video question answering tasks that cannot be image question answering, and achieve the effect of improving exploration ability.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment

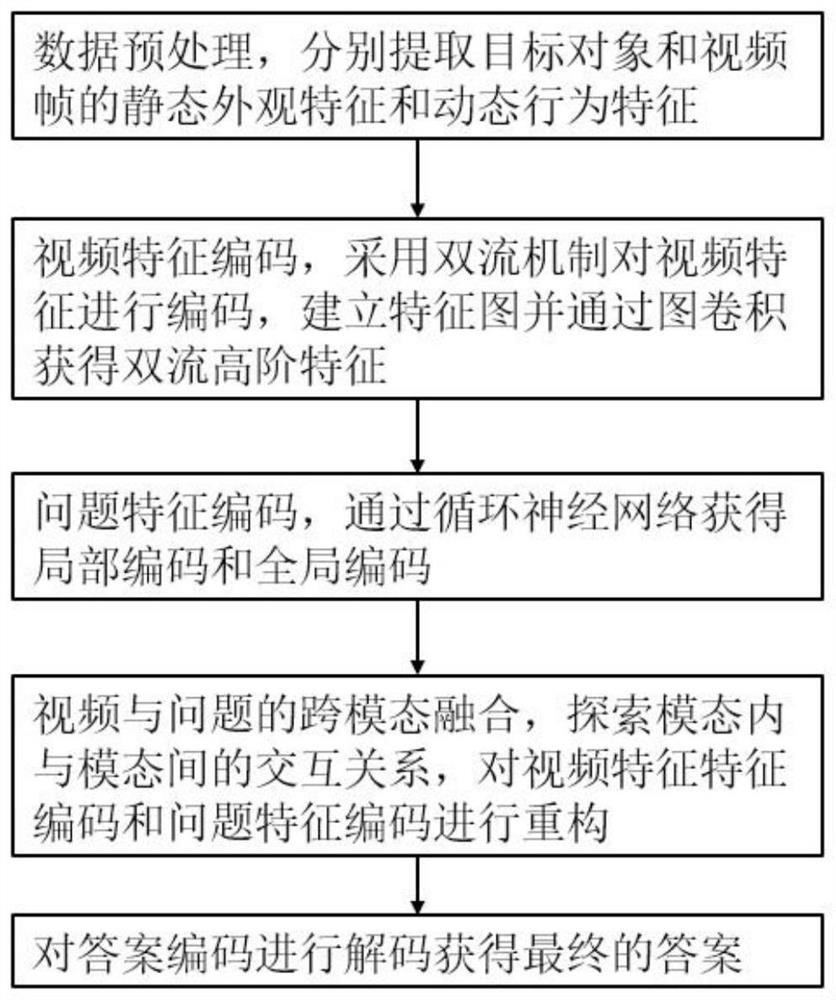

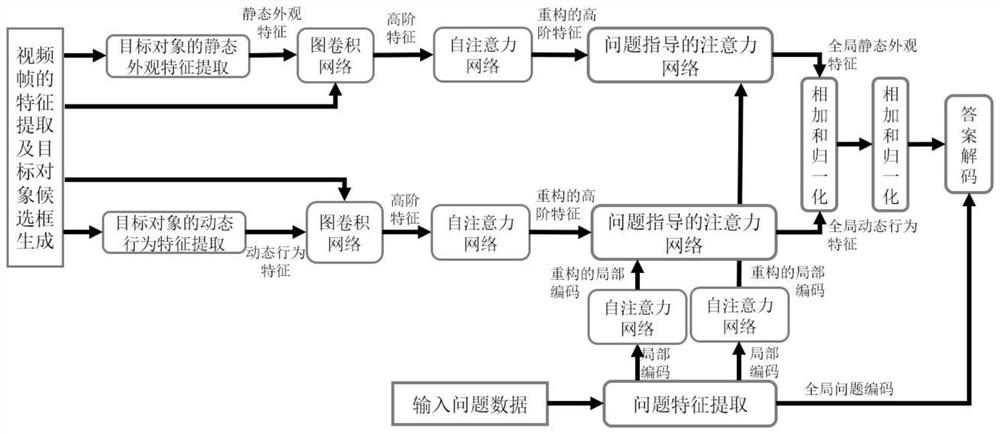

[0118] Such as figure 1 , figure 2 As shown, an object-oriented two-stream attention network based video question answering method, the steps are as follows:

[0119] Step (1), carry out data preprocessing to input data, for a section of video of input, at first adopt the mode of average sampling to sample video frame, in the present invention, the sampling number of every section video is T=10 frames. After that, the Faster-RCNN target detection algorithm is used to generate target objects on each frame, and multiple candidate frames are obtained. In addition, a convolutional network is used to extract static appearance features and dynamic behavior features for each video frame. In the present invention, the ResNet-152 network trained on the ImageNet image library is used to extract static appearance features, and the I3D network trained on the Kinetics action recognition data set is used to extract dynamic behavior features of video frames. Finally, the RoIAlign method ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com