Fashion compatibility analysis method and system based on deep multi-modal feature fusion

A technology of feature fusion and analysis method, applied in the field of computer vision and image applications, can solve the problem of less research on multi-modal information fusion of fashion items, achieve a broad promotion space and use value, improve accuracy, Reasonable matching effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0046] In order to make the above objects, features and advantages of the present application more clearly understood, the present application will be described in further detail below with reference to the accompanying drawings and specific embodiments.

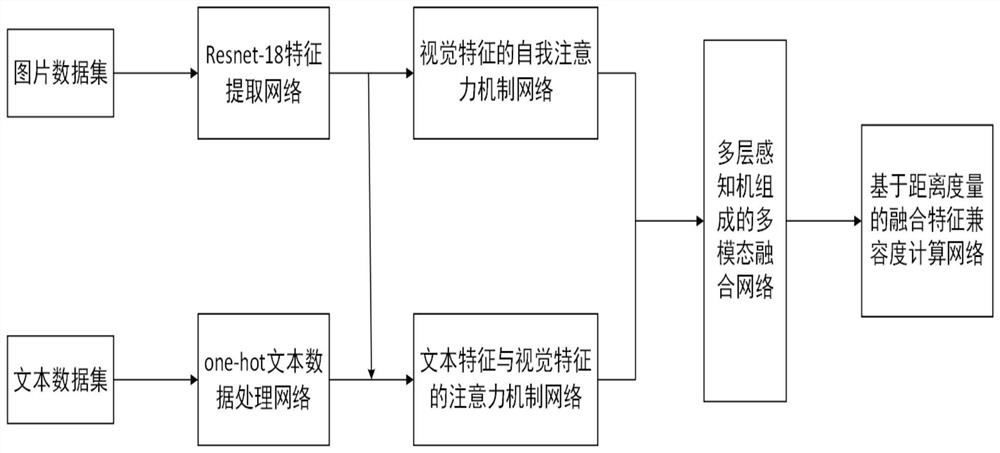

[0047] like figure 1 As shown, a fashion compatibility analysis method based on deep multimodal feature fusion, the method includes:

[0048]Sample feature extraction network, the methods used are Resnet-18-based visual feature extraction network to extract visual features and one-hot encoding-based text feature extraction network to process text data;

[0049] After the features are extracted, the features are fused. The methods used are the visual feature and text feature fusion network based on the attention mechanism, and the extracted visual features and text features are fused and the visual feature self-attention network based on the attention mechanism to strengthen the visual modality. characteristic expression;

...

Embodiment 2

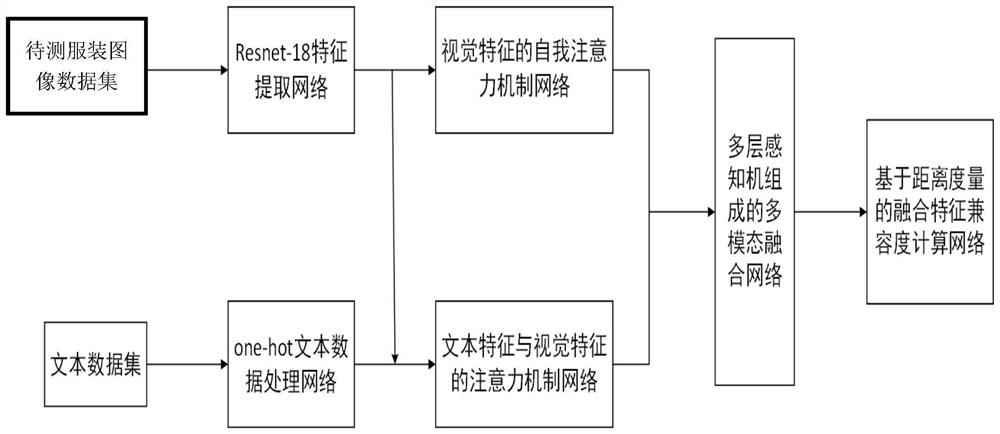

[0075] like figure 2 As shown, the fashion compatibility analysis of the clothing image to be tested is widely used in the field of computer vision and graphics. Expression, different angles contain different fashion item information, and the fusion of different fashion item information ensures the integrity of the item features. For different categories of clothing items, through sample feature extraction network, visual feature extraction network based on Resnet-18, visual feature extraction and text feature extraction network based on one-hot encoding, visual feature and text feature fusion network based on attention mechanism , strengthen the feature expression of the visual modality of fashion items, and use the calculation network based on the compatibility of fusion features to shorten the positive pair distance of fusion features in the multimodal vector space and expand the negative pair distance. The present invention can reasonably match single products, Improve t...

Embodiment 3

[0103] Embodiment 3 In order to make the above objects, features and advantages of the present application more obvious and easy to understand, the present application will be described in further detail below with reference to the accompanying drawings and specific embodiments.

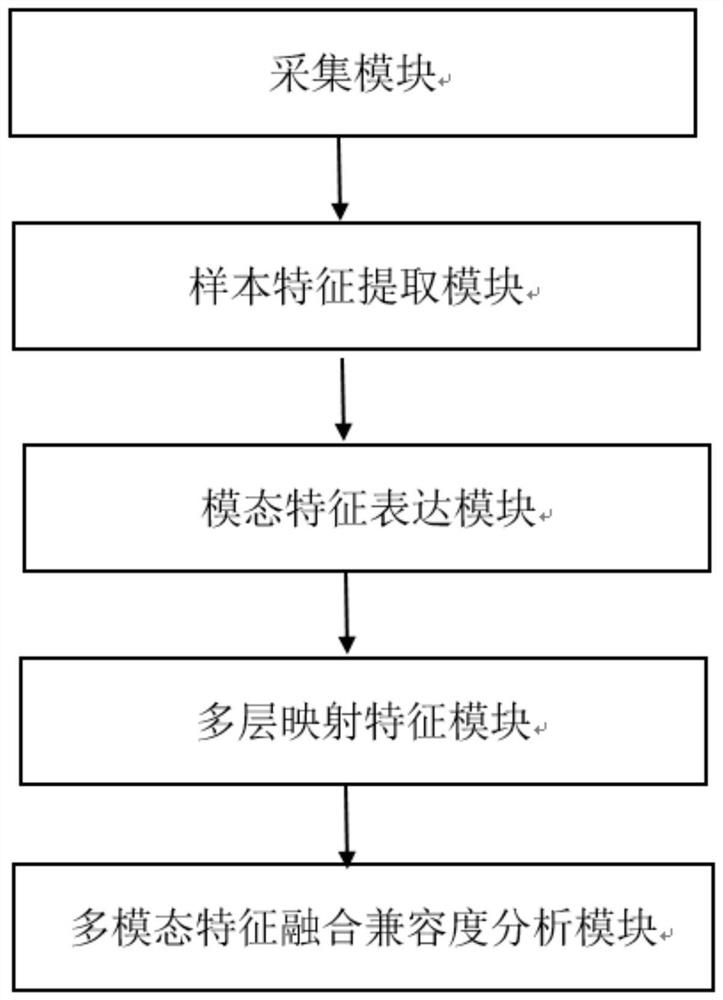

[0104] like image 3 As shown, a fashion compatibility analysis system based on deep multimodal feature fusion includes: acquisition module, sample feature extraction module, modal feature expression module, multi-layer mapping feature module and multimodal feature fusion compatibility analysis module ;

[0105] The acquisition module is used to collect a sample set of data to be tested;

[0106] The sample feature extraction module is used to perform sample feature extraction network training based on the data sample set to be tested, and obtain the sample features of the data to be tested in the data sample set to be tested;

[0107] The modal feature expression module is used to perform feature ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com