Virtual machine task management system

a virtual machine and task management technology, applied in the field of network switches, can solve the problems of over-expensive solutions, failure of any given switch, and destruction of the integrity of the entire switching system,

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

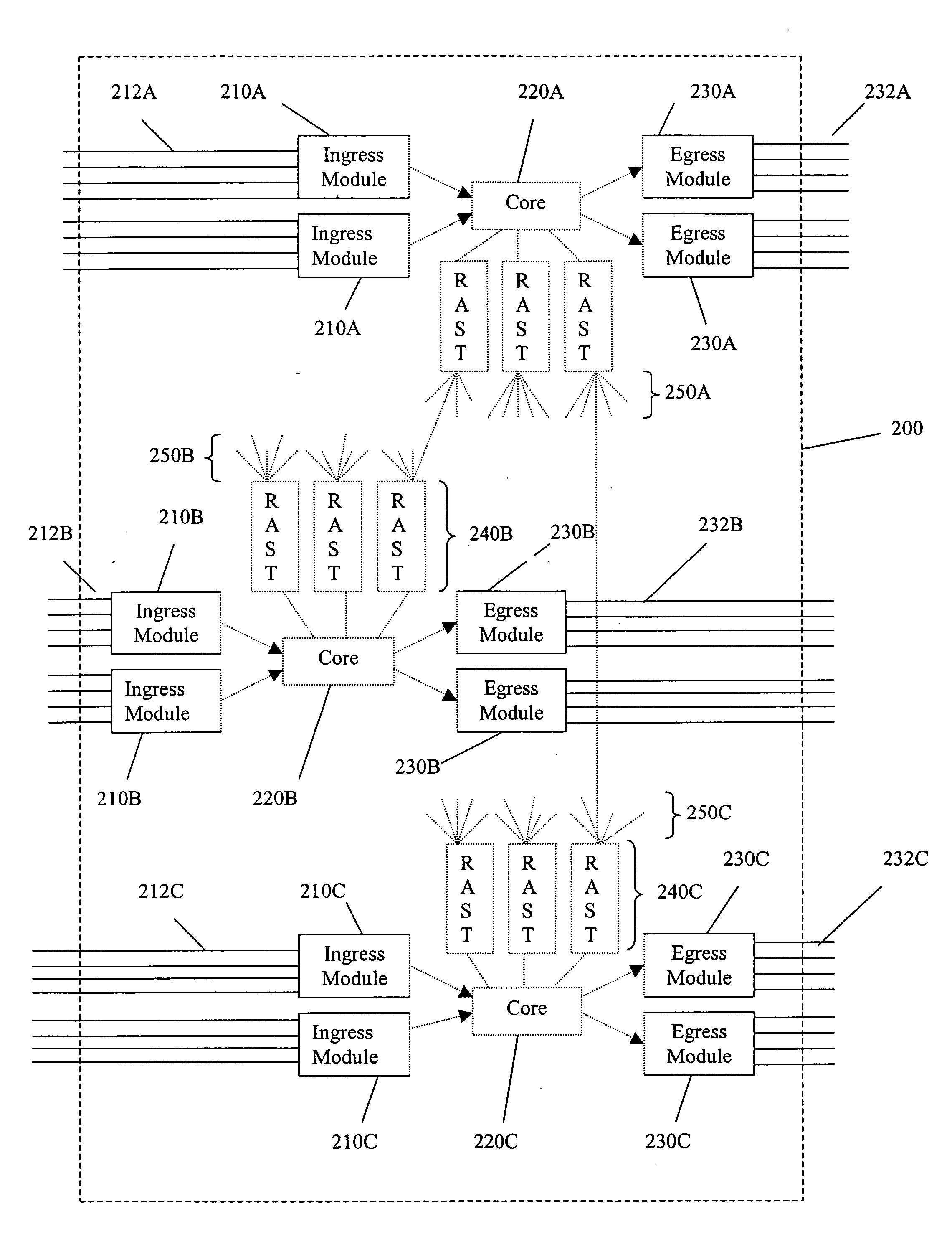

[0028] In FIG. 2 a switching system 200 generally includes ingress elements 210A-C, egress elements 230A-C, core switching elements 220A-C and connector elements 240A-C. The ingress elements encapsulate incoming packets with a routing header (see FIG. 3), and perform initial switching. The encapsulated packets then enter the core elements for further switching. The intermediate elements facilitate communication between core elements. The egress elements remove the header, and deliver the packets to a sink or final destination.

[0029] Those skilled in the art will appreciate that switching (encapsulation) header must, at a bare minimum, include at least a destination element address. In preferred embodiments the header also includes destination port ID, and where elements are clustered and optional destination cluster ID. Also optional are fields for source cluster, source element, and source port IDs. As used herein an “ID” is something that is the same as, or can be resolved into a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com