Hardware support for superpage coalescing

a superpage coalescing and hardware technology, applied in the field of computer systems, can solve the problems of inability to efficiently co-create pages, and inability to meet the needs of users, so as to facilitate efficient co-creation and reduce latentities associated with page copying

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

)

[0029] With reference now to the figures, and in particular with reference to FIG. 3, there is depicted one embodiment 40 of a memory subsystem constructed in accordance with the present invention. Memory subsystem 40 is generally comprised of a memory controller 42 and a system or main memory array 44, and is adapted to facilitate superpage coalescing for an operating system which controls virtual-to-physical page mappings in a data processing system. The operating system (OS) may have many conventional features including appropriate software which determines page mappings, and decides when it is desirable to coalesce pages into a larger (super)page; such details are beyond the scope of the present invention but will become apparent to those skilled in the art.

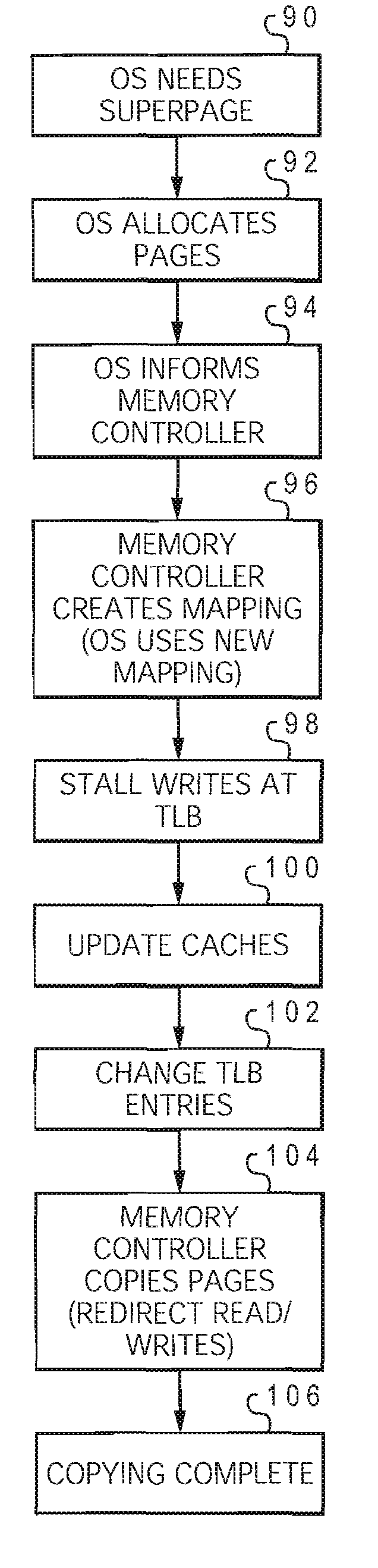

[0030] Memory subsystem 40 provides a hardware solution to superpage coalescing which reduces or eliminates the poor TLB behavior that occurs during the prior art software-directed copying solution. In the present invention...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com