Method and apparatus for dynamic resizing of cache partitions based on the execution phase of tasks

A cache and execution stage technology, applied in the direction of memory architecture access/allocation, memory address/allocation/relocation, memory system, etc., to achieve the effect of avoiding retention and realizing effective utilization

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] The above and other features, aspects and advantages of the present invention are described in detail below in conjunction with the accompanying drawings. The accompanying drawings include 6 figures.

[0024] figure 1 An embodiment of a method for dynamically resizing cache partitions for application tasks in a multiprocessor is illustrated. The execution stage 101 of each application task is identified by using the basic block vector (BBV) metric or working set of the application task. The phase information and work sets 102 of the application tasks are stored in the form of a table. Then, according to the execution stage of the application task, the cache partition is dynamically configured using the stage information.

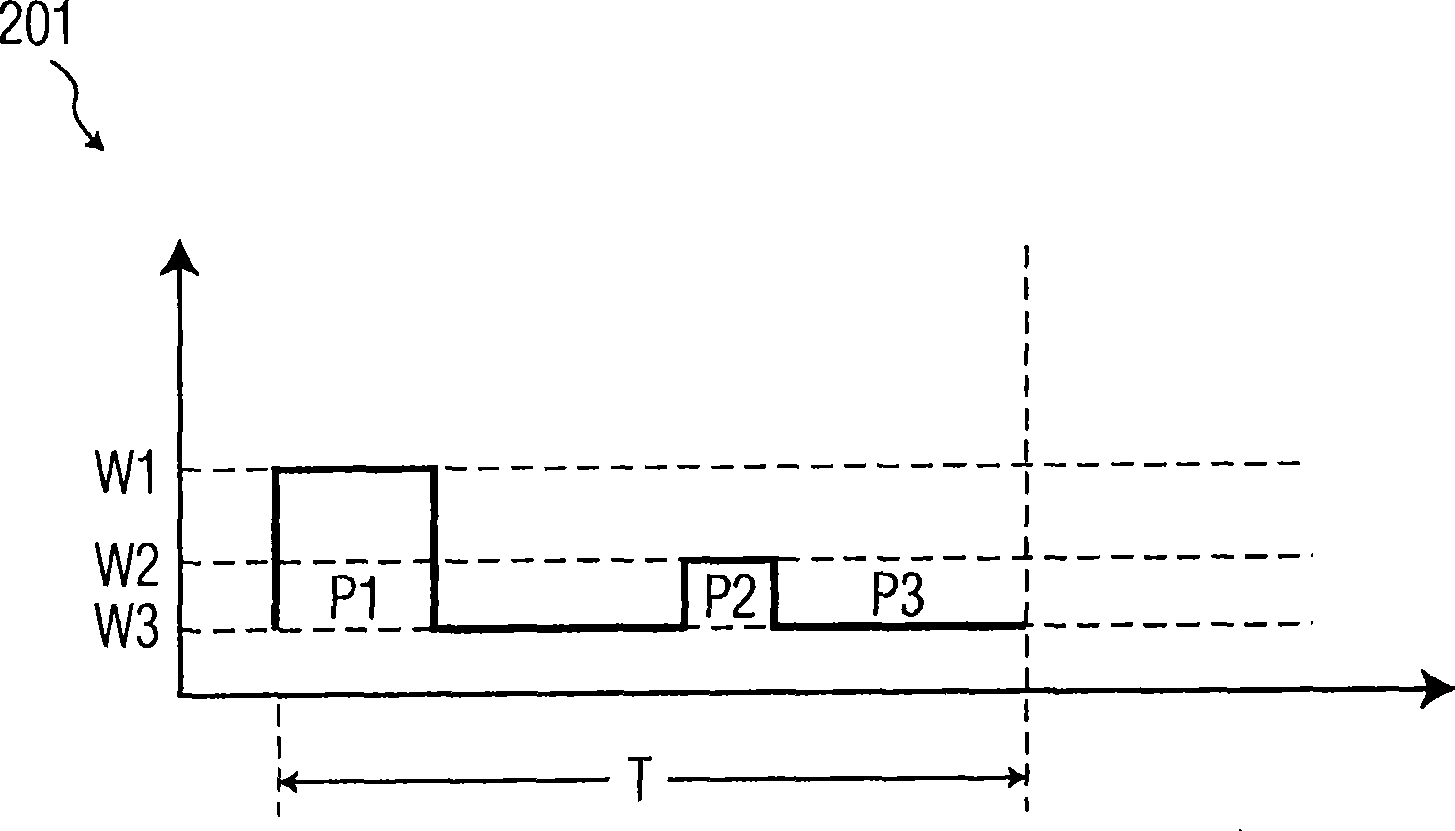

[0025] According to the proposed invention, the size of the cache partition is adjusted during certain situations of application task execution such that at any given point in time, the application task is allocated a necessary and sufficient amoun...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com