Depth map intra prediction method based on linear model

An intra-frame prediction and linear model technology, applied in the field of communication, can solve problems such as low coding efficiency and failure to consider the inherent characteristics of the depth map, and achieve the effects of improving coding efficiency, accurate prediction value, and small coding rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

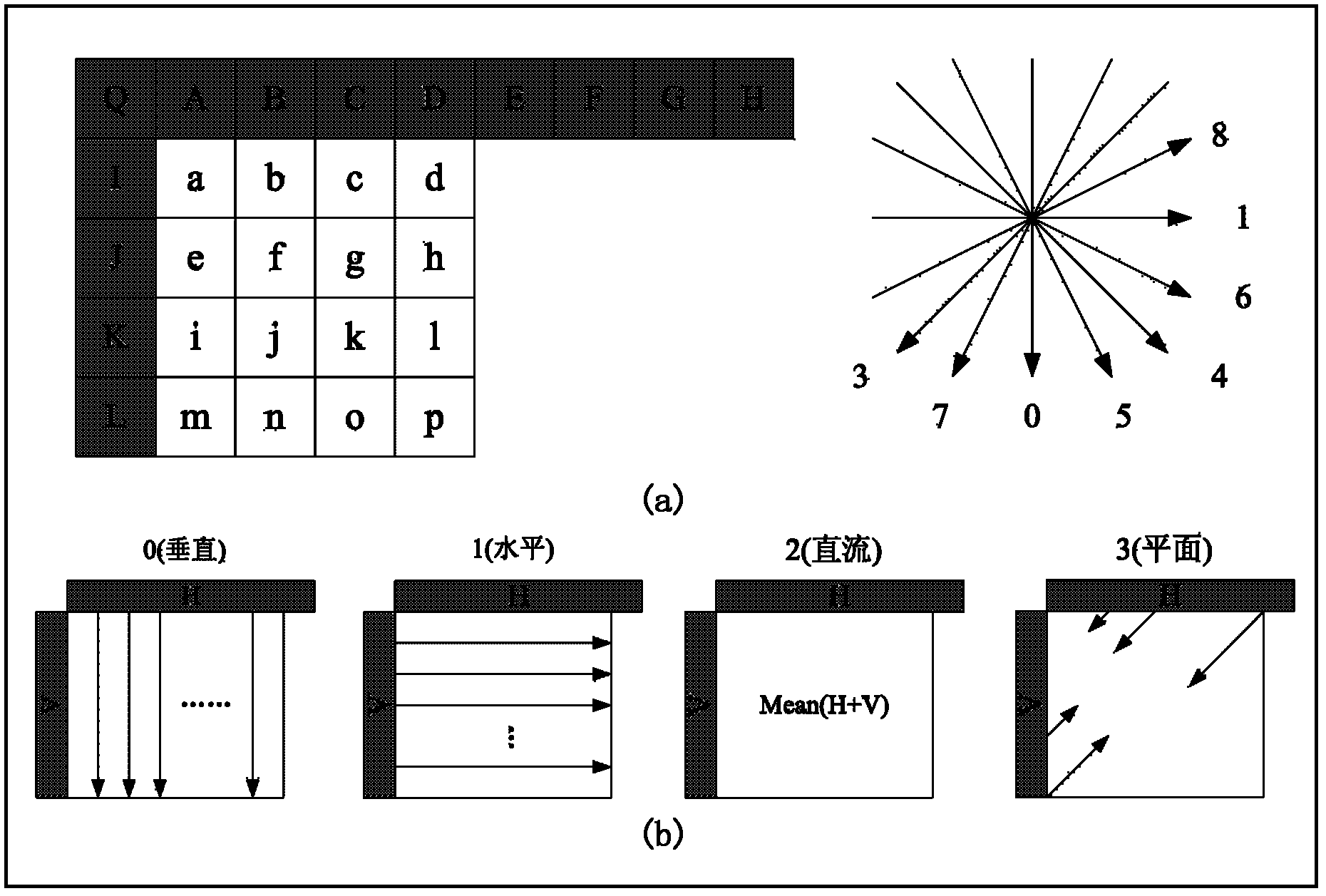

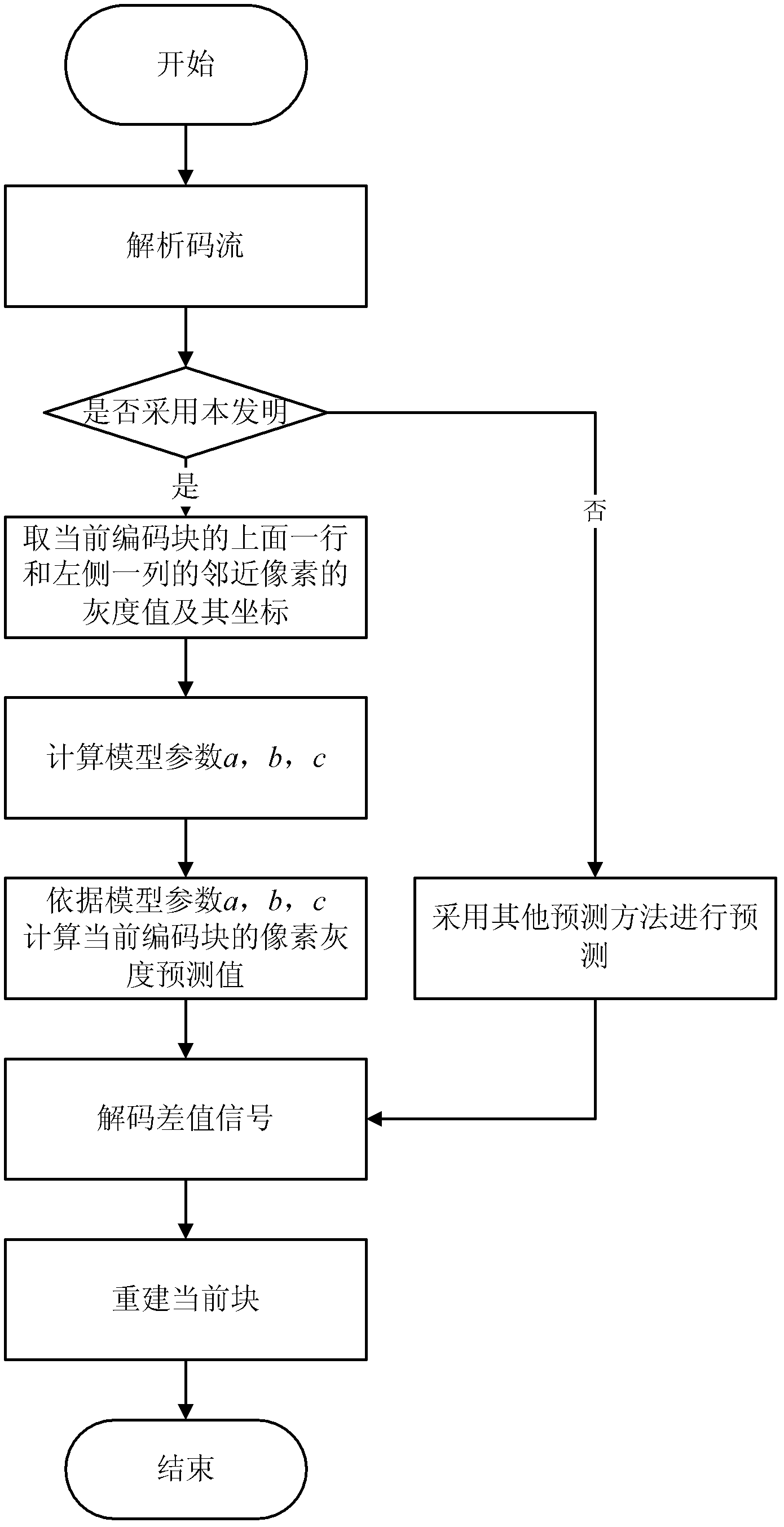

[0049] The method for intra-frame prediction of a depth map based on a linear model in the present invention calculates linear model parameters based on the adjacent pixel gray values and pixel coordinates of the current coding block; then calculates the linear model parameters based on the model parameters and the pixel coordinates of the current coding block. The pixel grayscale prediction value of the current encoding block; it is necessary to change the encoder and decoder at the same time, including the implementation process of the encoding end and decoding end.

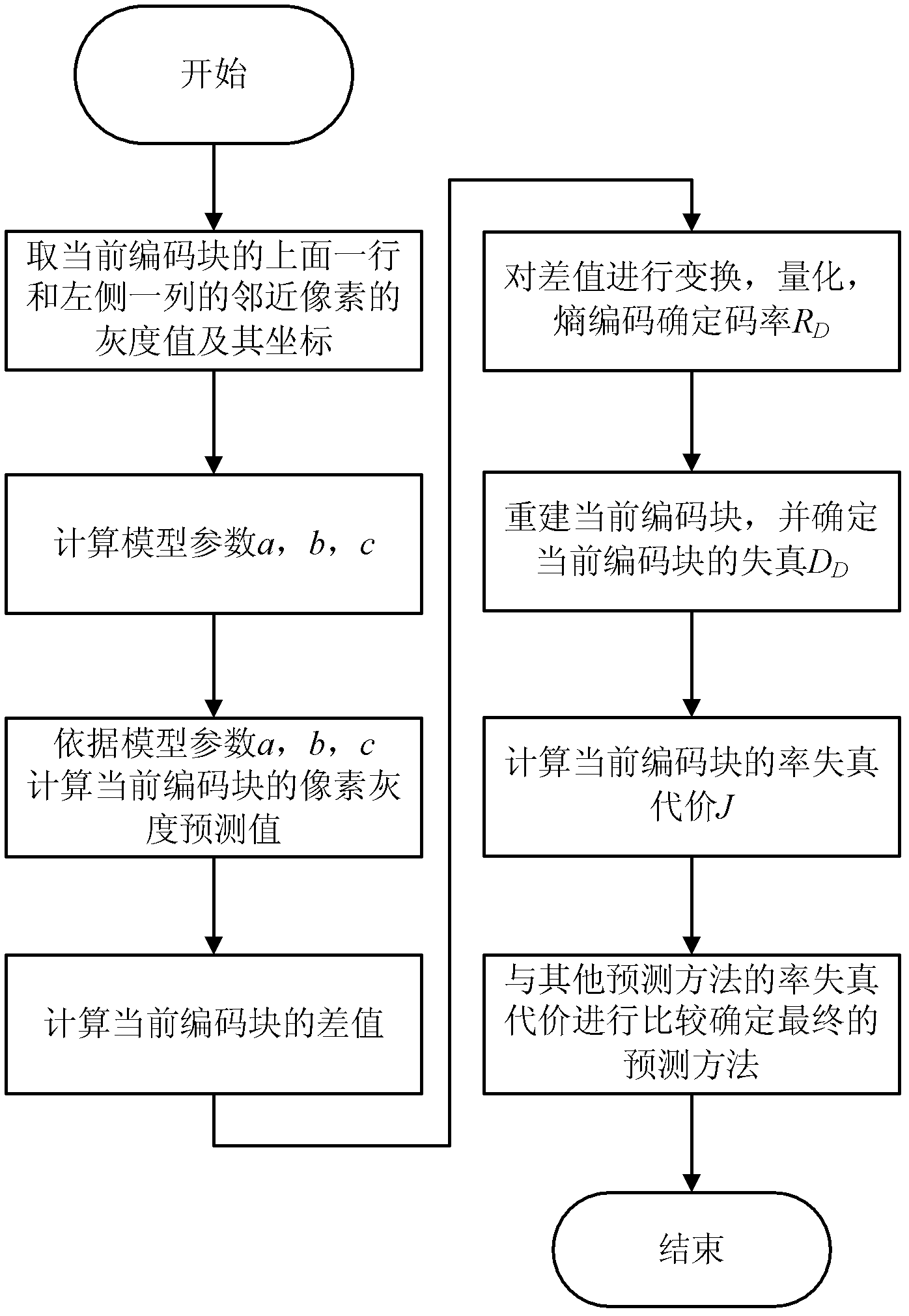

[0050] The implementation process on the encoding side is as follows figure 2 shown, including the following steps:

[0051] Step 1, through the analysis, it is known that the spatial distribution characteristics of the depth map can be expressed by the following model,

[0052] L=a.x+b.y+c,

[0053] Among them, L represents the gray value of the pixel in the depth map, (x, y) represents the pixel coordina...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com