Time-of-flight depth imaging

A depth image and depth technology, applied in image analysis, image communication, image data processing, etc., can solve problems such as inaccuracy, depth camera damage, and depth measurement

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

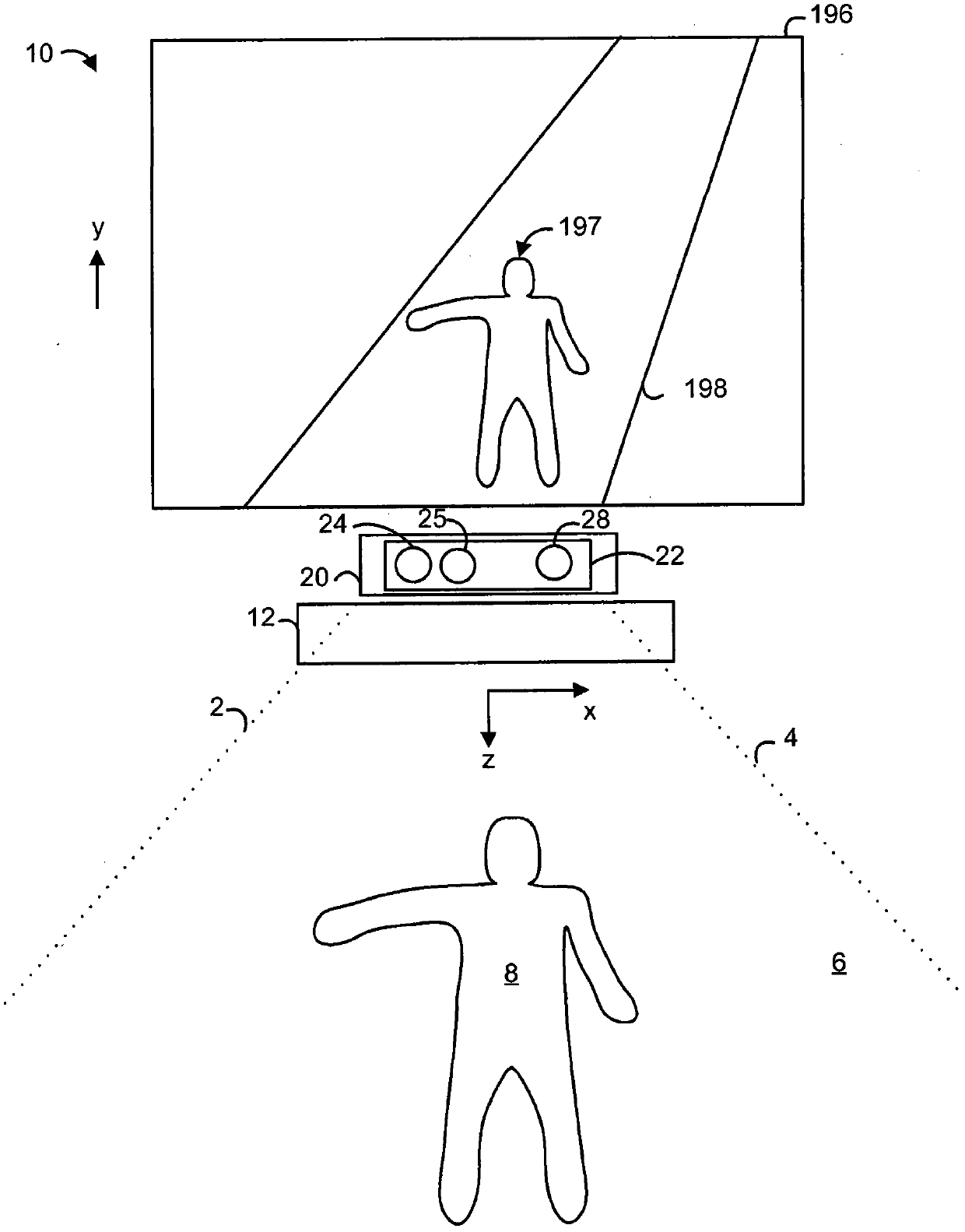

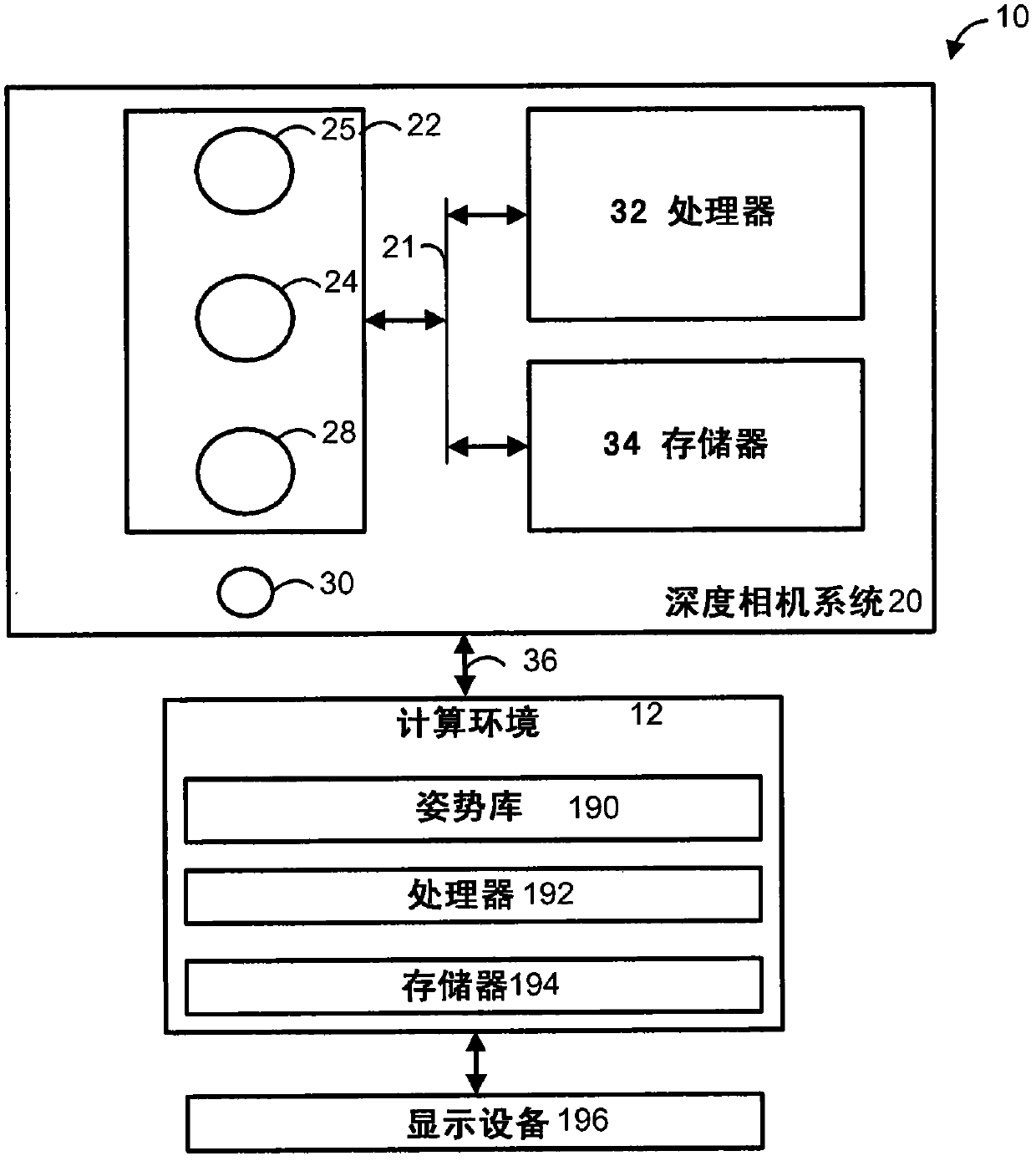

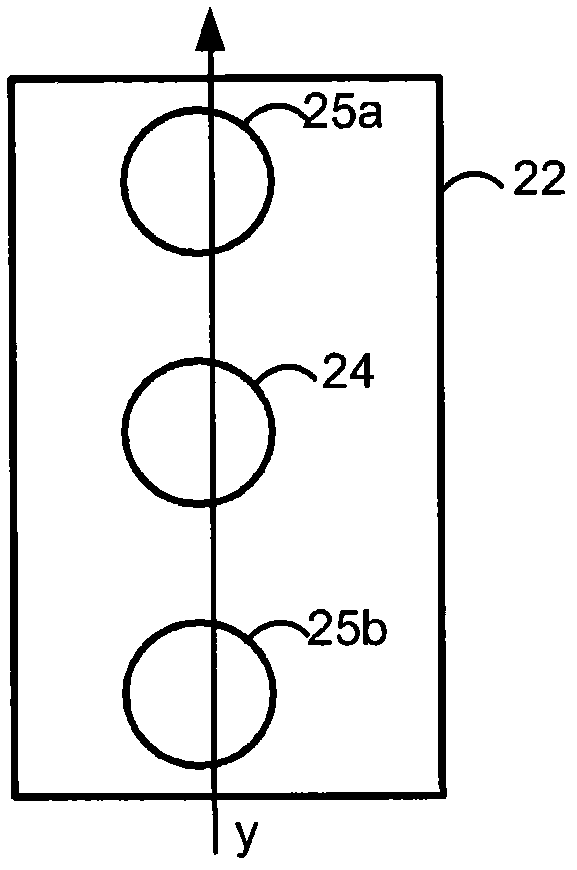

[0028] Techniques for determining depth to an object are provided. A depth image is determined based on two light intensity images collected at different locations or times. For example, a light beam is emitted into a field of view where two image sensors at slightly different positions are used to collect two input light intensity images. Alternatively, light intensity images may be collected from the same sensor but at different times. A depth image can be generated based on these two light intensity images. This technique compensates for differences in the reflectivity of objects in the field of view. However, there may be some misalignment between pixels in these two light intensity images. An iterative process can be used to alleviate the need for exact matching between light intensity images. This iterative process may involve modifying one of the light intensity images based on a smoothed version of the depth image generated from the two light intensity images. Sub...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com