Multi-level cache processing method of drive program in embedded type operation system

An operating system and driver technology, applied in memory systems, multi-program devices, program startup/switching, etc., can solve problems such as affecting operating efficiency and data leakage, saving hardware resources investment, improving operating efficiency and improving performance. The effect of stability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

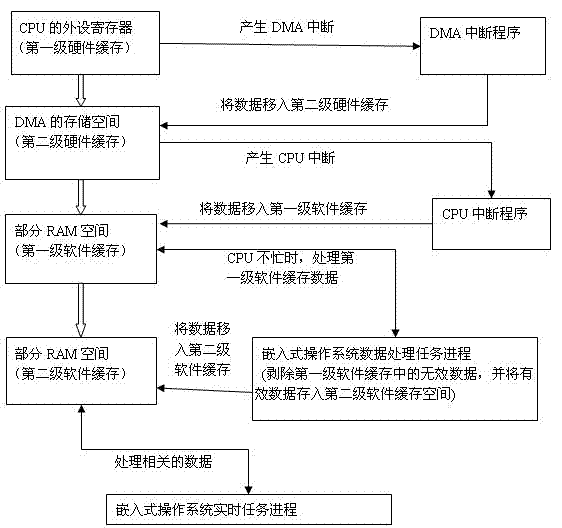

[0011] see figure 1 As shown, the multi-level cache processing method of the driver in the embedded operating system of the present invention can improve the operating efficiency of the embedded system by setting the storage unit that fully utilizes the existing hardware peripheral resources through software. The multi-level cache processing method of driver program in the embedded operating system of the present invention is as follows: at first, utilize the peripheral hardware register of CPU as first-level hardware cache, such as: A / D sampling CPU has the cache space of 16 words; Secondly , using the DMA storage space of the CPU as the second-level hardware cache, and its storage space is generally K-level; when the first-level hardware cache is full, a DMA interrupt is generated to read the data in the first-level hardware cache into In the second-level hardware cache; then, use part of the RAM space as the first-level software cache, because the storage space of DMA is sm...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com