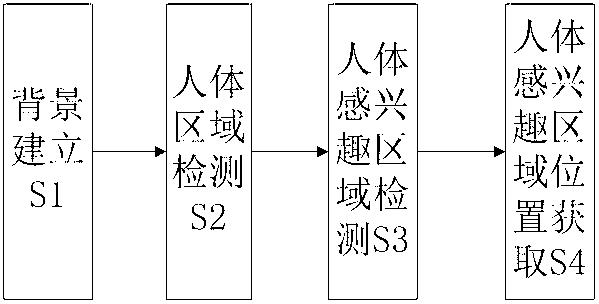

Method for obtaining position of human body interesting area relative to screen window

A technology of area of interest and human body area, applied in the field of obtaining the position of the area of interest of the human body relative to the screen window, can solve the problems of inconvenient use, high cost, sensitive sensor position, etc., and achieve the effect of simple human-computer interaction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

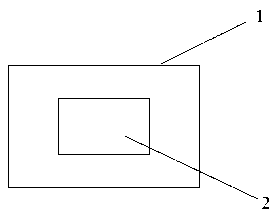

[0026] Embodiment 1: Applied to somatosensory games. The area of interest is the palm, and single-color gloves can be worn. The color requirements are quite different from the clothing and indoor background, so that the palm area can be accurately and quickly obtained. The video window can be overlapped with the screen window so that the palm can move around the entire screen. The game program can set actions according to the position of the palm. Of course, any part of the body can be used as the region of interest, as long as the color of the clothing and the background have a large difference.

Embodiment 2

[0027] Embodiment 2: Applied to human hands to control TV. Same as the above example, the ROI is the palm. The palm features are extracted as the centroid position and the minimum circumscribed rectangle area. When the palm is open and clenched, the corresponding minimum circumscribing rectangle area is very different, which can be identified as two states. The application generates corresponding control actions based on these two states, such as opening the palm to indicate selection, and clenching to indicate execution.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com