Kinect-based people counting method

A counting method and people flow technology, which is applied in calculation, image data processing, computer components, etc., can solve the problems of people counting accuracy drop, image matching, tracking target loss, etc., and achieve the effect of simple equipment and high counting accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0018] The Kinect-based people counting method of the present invention will be described in further detail below in conjunction with the accompanying drawings.

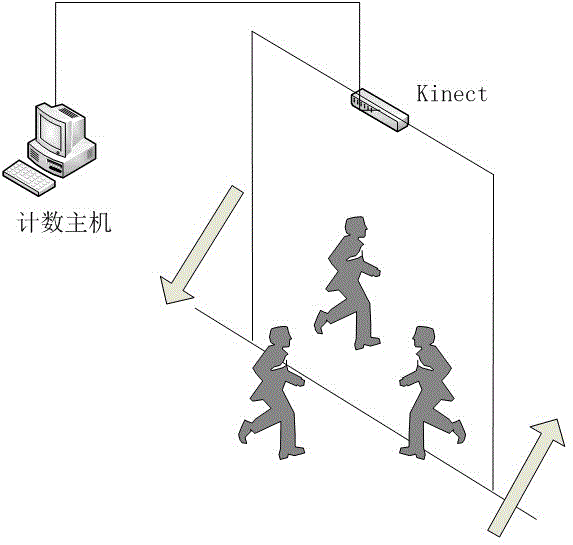

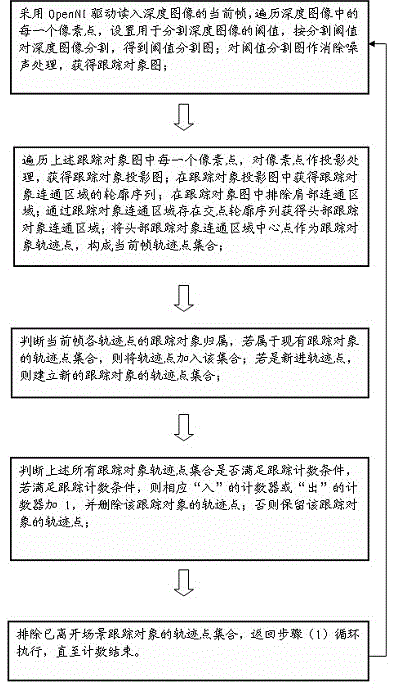

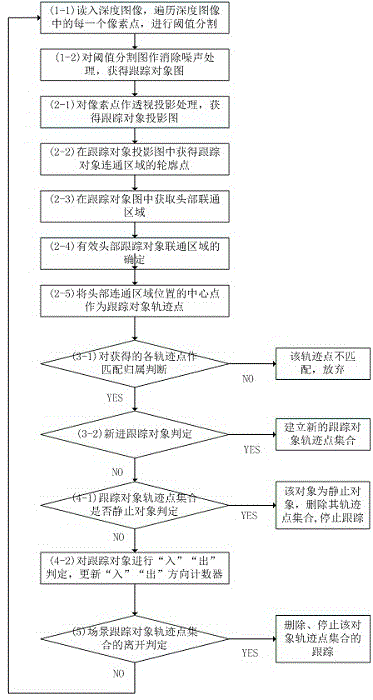

[0019] like figure 1 As shown, the involved hardware of the above-mentioned Kinect-based counting method is made up of a Kinect depth camera and a counting host installed from top to bottom; figure 2 , image 3 Shown, above-mentioned people counting method based on Kinect is characterized in that comprising the steps:

[0020] (1) Read in the current frame of the depth image through the OpenNI drive, traverse each pixel in the depth image, set the threshold T for segmenting the depth image, segment the depth image according to the segmentation threshold, and obtain a threshold segmentation map; The segmentation image is processed to eliminate noise, and the tracking object image is obtained. The specific steps are as follows:

[0021] (1-1), as described in the above step (1), read in the depth image through the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com