Video interaction inquiry method and system based on mass data

A query method and technology of massive data, applied in the field of video data query, can solve the problems of video data unable to provide location information, lack of semantic retrieval mechanism, unable to meet the needs of smart city video query, etc., to increase relevance and improve effectiveness Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

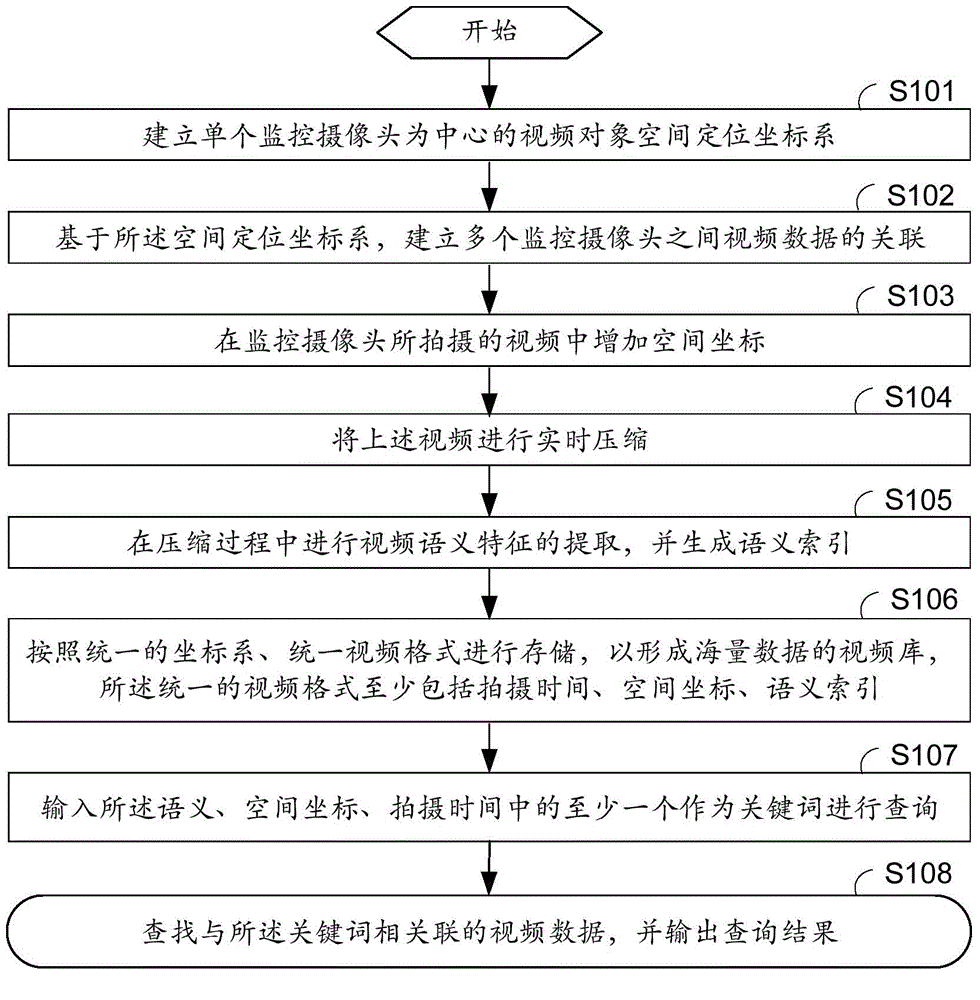

[0028] A video interactive query method based on massive data mainly includes the following steps:

[0029] In step S101, based on the spatial positioning method of video analysis, a video object spatial positioning coordinate system centered on a single monitoring camera is established.

[0030] In step S102, based on the spatial positioning coordinate system, an association of video data between a plurality of surveillance cameras is established.

[0031] Said associations include:

[0032] (1) Correlate according to time, for example, to show the traffic operation situation of a road section during the rush hour at 6:00 p.m.;

[0033] (2) Association based on geographic information. For example, five monitoring cameras are installed on a certain street, which can be associated according to geographic information such as their monitoring range and relative distance.

[0034] (3) Correlate according to the video object, such as monitoring the video data of the suspected veh...

Embodiment 2

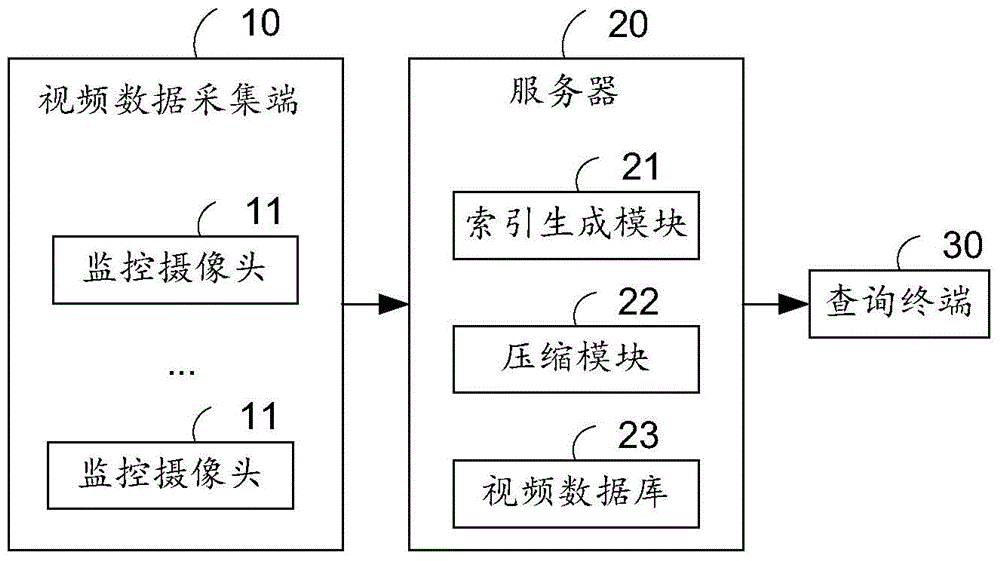

[0053] see figure 2 , shows the block diagram of video interactive query system based on massive data. The query system includes: a video data collection terminal 10 , a server 20 , and a query terminal 30 .

[0054] The video data collection terminal 10 is used to provide the content of the video data, the shooting time, and the location information of the shooting location. It mainly includes a plurality of surveillance cameras 11 all over the city corners.

[0055] The server 20 is used for unified management of the video data, including establishing a unified video format, associating video data from multiple sources, and setting indexing rules. Specifically, servers include:

[0056] The video database 21 is used to establish a unified coordinate system and a unified video format for the collected video data, and perform association according to part of the content in the video format.

[0057] The index generation module 22 is used to set index rules and extract vid...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com