Multi-interaction method and device based on kinect and unity3d

An interactive method and equation technology, applied in the input/output of user/computer interaction, the input/output process of data processing, instruments, etc., can solve the problems of cumbersome 3D registration algorithm, many restrictions, and few interactive methods, etc., to achieve triggering The registration mechanism is flexible, simplifies displacement changes and enriches the effect of interactive methods

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0035] Embodiments of the present invention will be described in detail below with reference to the accompanying drawings.

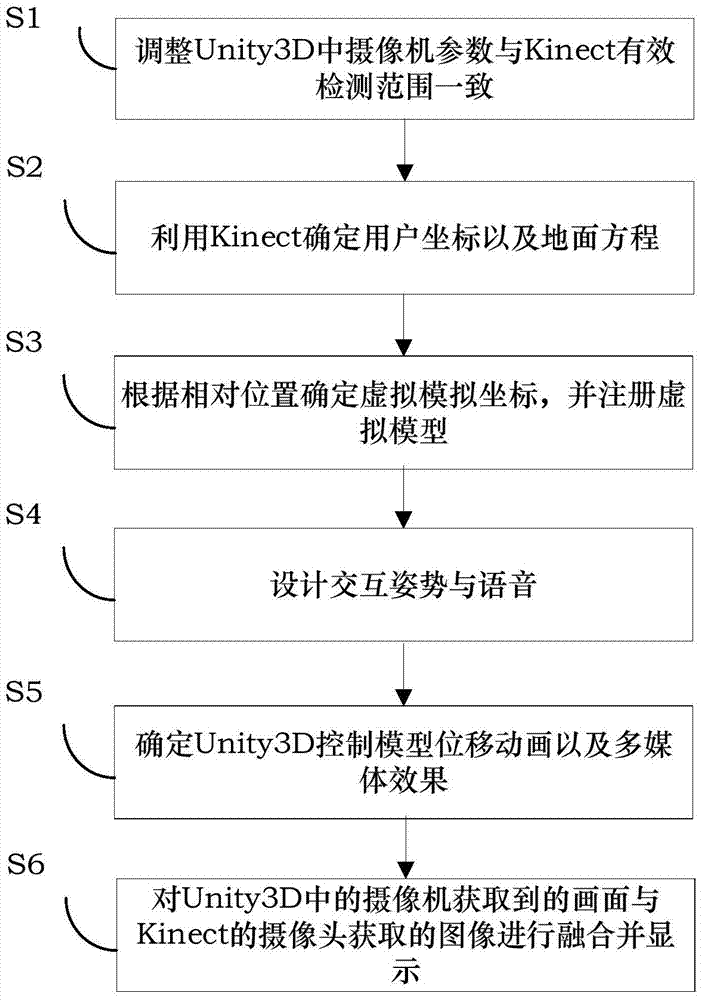

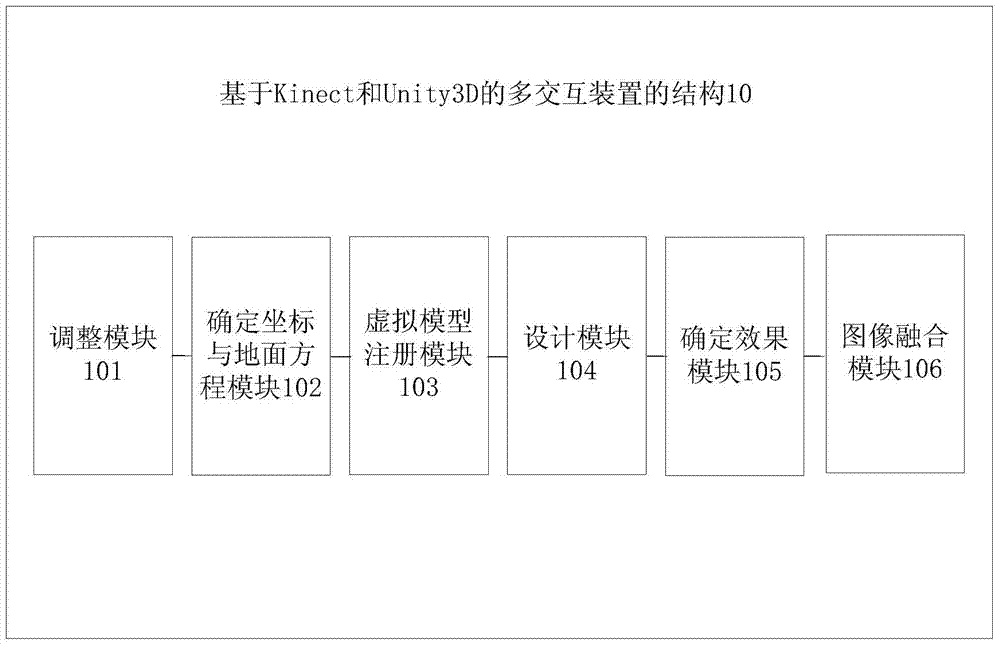

[0036] Such as figure 1 As shown, the present invention provides a kind of multi-interaction method based on Kinect and Unity3D, comprises specific following steps:

[0037] Step S1: Adjust the camera parameters in Unity3D to be consistent with the effective detection range of Kinect. Specifically, place the Kinect to the preset position of the real scene, and adjust the real scene to be within the effective detection range of the Kinect, wherein the effective range refers to 1.2-3.6 meters from the camera, 57 degrees horizontally, and 43 degrees vertically.

[0038] Furthermore, in the coordinate system of the data returned by Kinect, the origin is the Kinect sensor, so the camera in Unity3D is placed at the coordinate origin to facilitate the calculation of virtual model coordinates during 3D registration. Adjust the Field of view and Clipping Planes...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com