Self-adaptation target tracking method based on vision saliency characteristics

A target tracking and adaptive technology, applied in the field of computer vision, can solve problems such as inability to track targets effectively, achieve the effects of good real-time performance, improve stability, and reduce tracking errors

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] The present invention will be further described below in conjunction with the accompanying drawings.

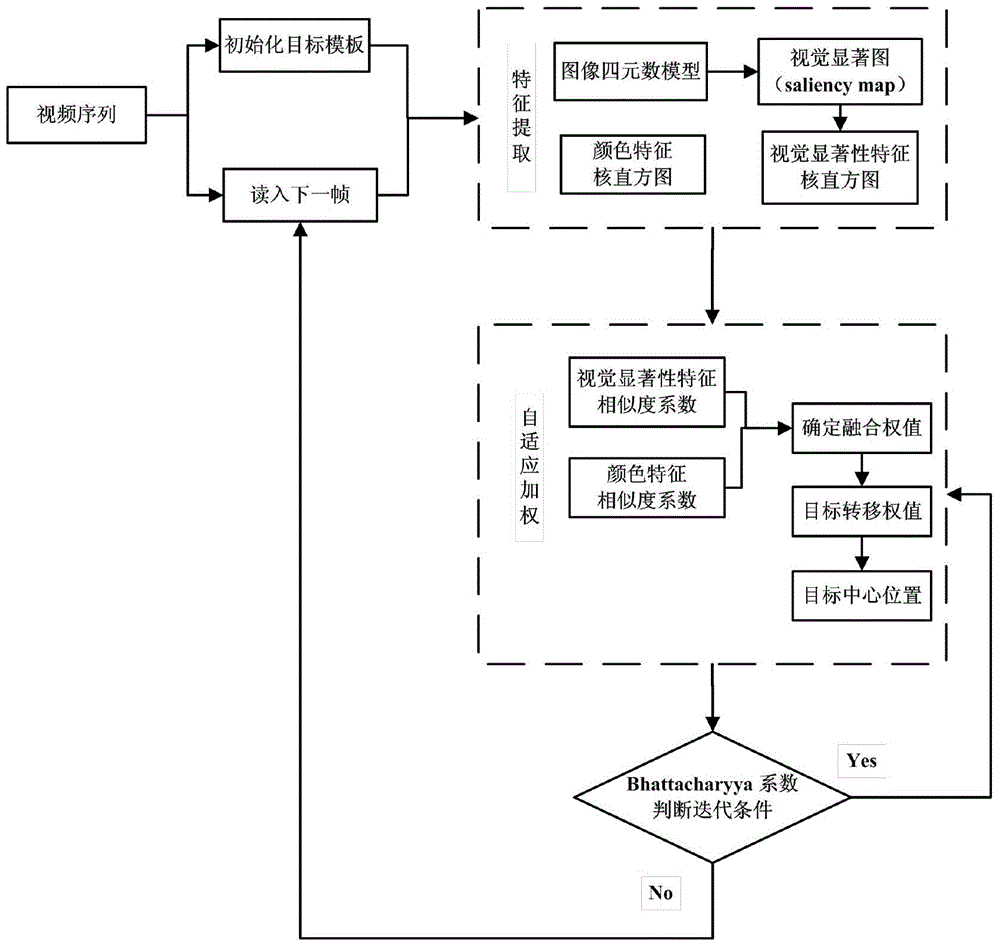

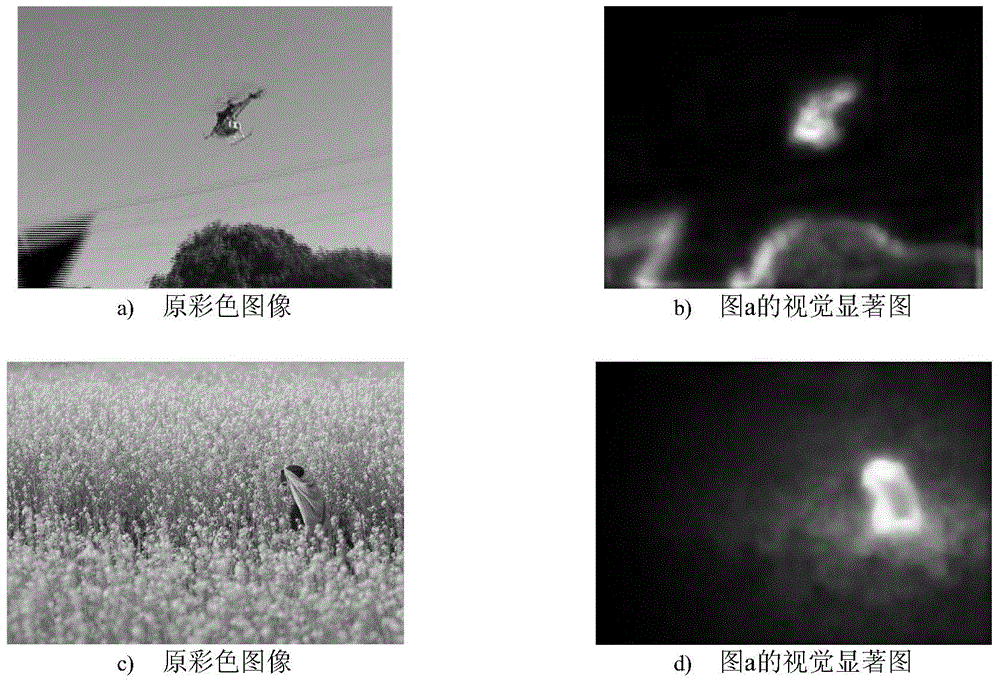

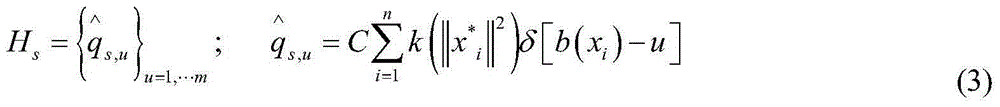

[0035] The principle of the method of the present invention is to detect the target visual saliency map based on the principle of frequency domain filtering, describe the target model in combination with color features and visual saliency features, and adjust the transfer vector fusion weight adaptively according to the size of the similarity coefficient to realize complex Accuracy of object tracking against background. The visual saliency feature can effectively enhance the target and suppress the interference, and integrate the features of the visual saliency region, so as to strengthen the information description of the target in the candidate area and weaken the interference of background information. Using the visual attention mechanism to effectively screen out salient information and provide it to the target tracking method can improve the efficiency of informat...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com