Infrared and visible light image fusion method based on salient objects

A technology of infrared image and image fusion, applied in image enhancement, image data processing, instruments, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

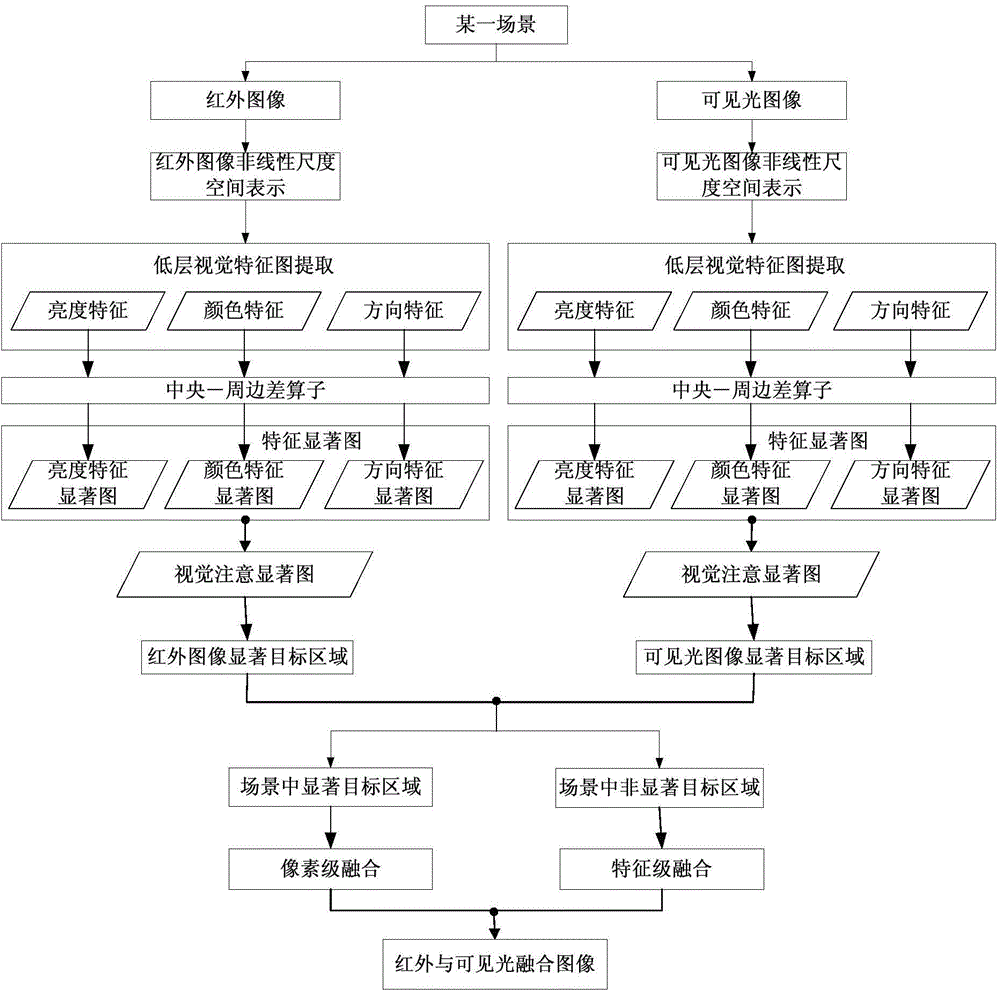

Method used

Image

Examples

Embodiment

[0159] The realization process of the present invention is illustrated by a specific example.

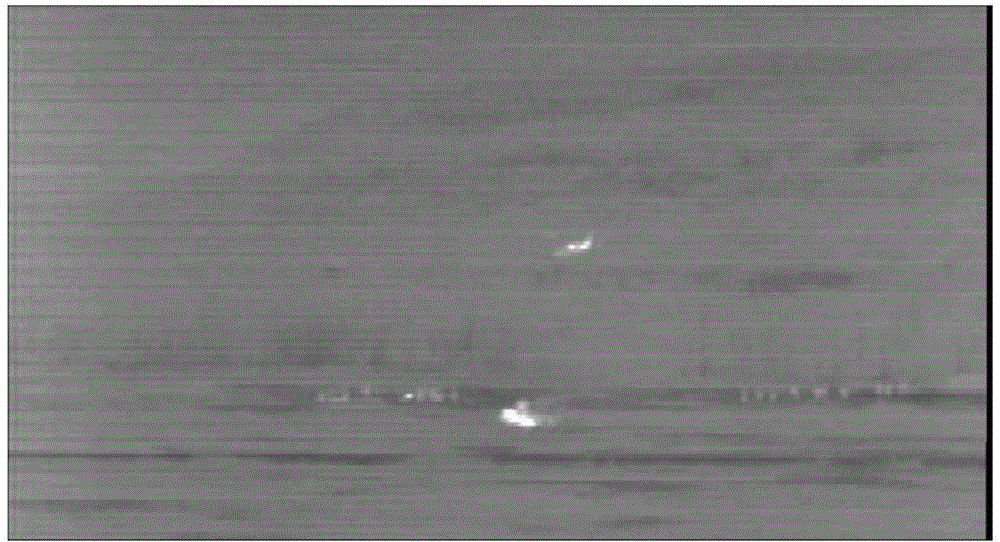

[0160] figure 2 is the infrared image of a certain scene, image 3 is a visible light image of a scene.

[0161] As described in step 1, first establish the nonlinear scale space representation of infrared images and visible light images, and set the edge threshold λ to 0.5.

[0162] As described in step 2, calculate the brightness, color and direction visual feature maps of the infrared image and the visible light image, and the brightness, color and direction saliency map, and calculate the visual attention saliency map of the infrared image and the visible light image.

[0163] According to step 3, the number of salient target areas in the infrared image is calculated to be 5, and the number of salient target areas in the visible light image is 4, among which the number of salient target areas in both the infrared image and the visible light image is 3, and the salient target ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com