Video Concentration Method Based on Object Size Adaptive

A video condensing and adaptive technology, applied in image data processing, instruments, computing and other directions, can solve the problems of collision and occlusion of moving objects, and achieve the effect of reducing mutual collision and occlusion

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

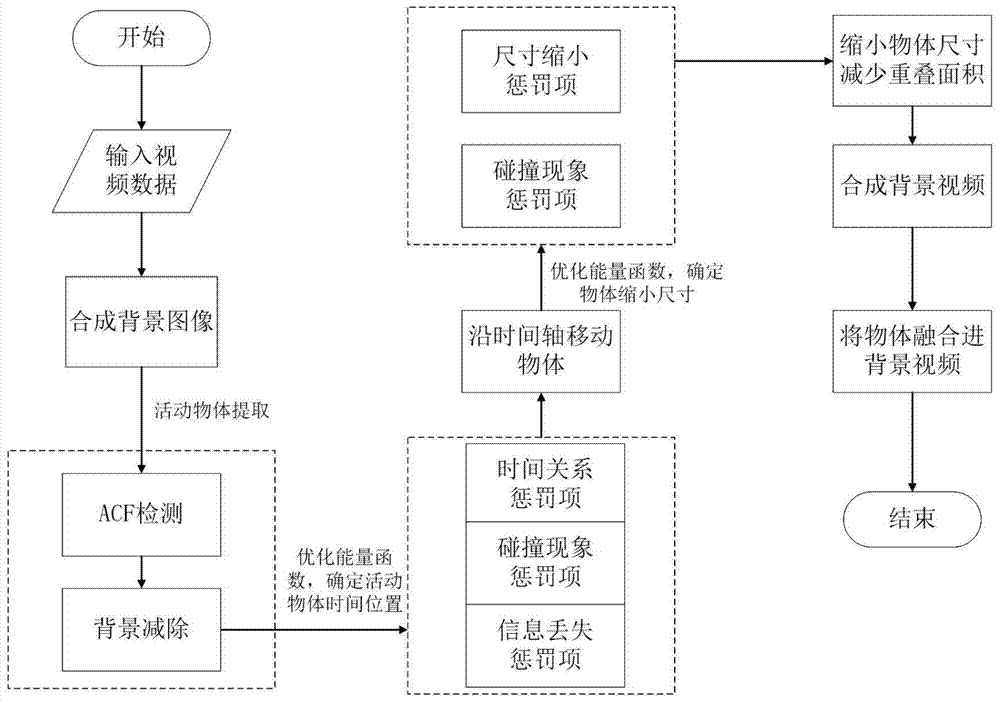

[0041] refer to figure 1 . The specific steps of the video concentration method based on the self-adaptive object size of the present invention are as follows:

[0042] 1. Synthesize the background image.

[0043]For each frame of input video data, a corresponding background image is synthesized for it. Specifically, an average value is taken for video sequences 30 seconds earlier than the current frame and 30 seconds later than the current frame, and the average image is used as the background corresponding to the current frame. For the frame at the beginning of the original video, if there is no previous frame, only the video sequence later than it is used; for the frame at the end of the original video, only the video sequence earlier than it is used. The background image synthesis formula is

[0044]

[0045] In the formula, B represents the background image to be synthesized, I represents a frame in the selected video sequence, and n represents the total number of ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com