Remote sensing target detection method based on sparse guidance and significant drive

A target detection and salience technology, which is applied in the direction of instruments, character and pattern recognition, scene recognition, etc., can solve the problems of complex calculation, category recognition of insignificant areas, and extraction of target areas, etc., to achieve comprehensive category information and lucid recognition effect great effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

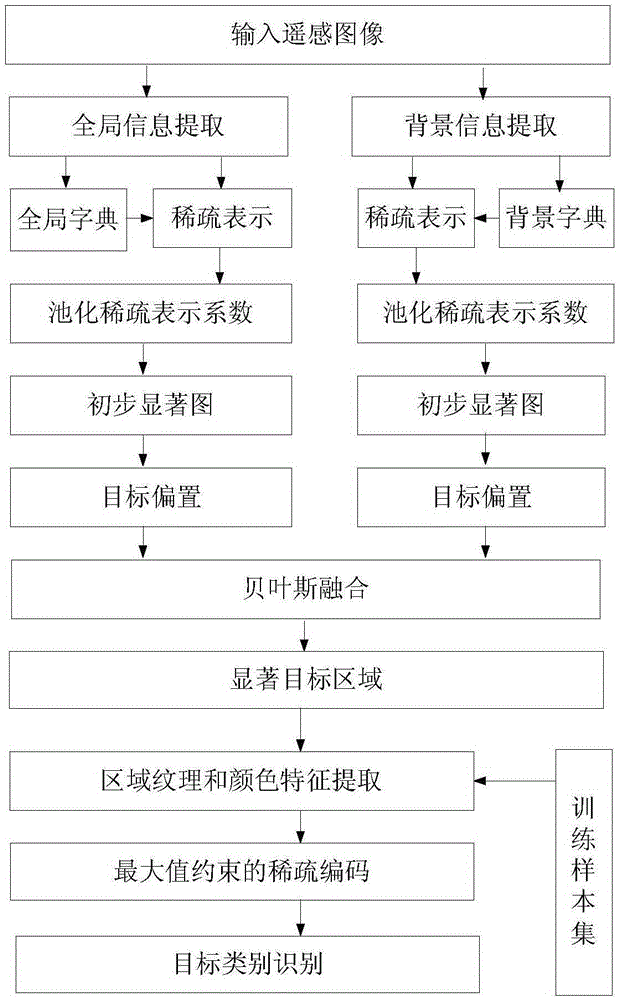

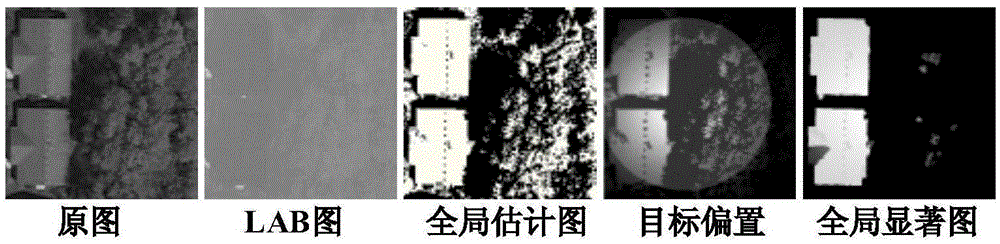

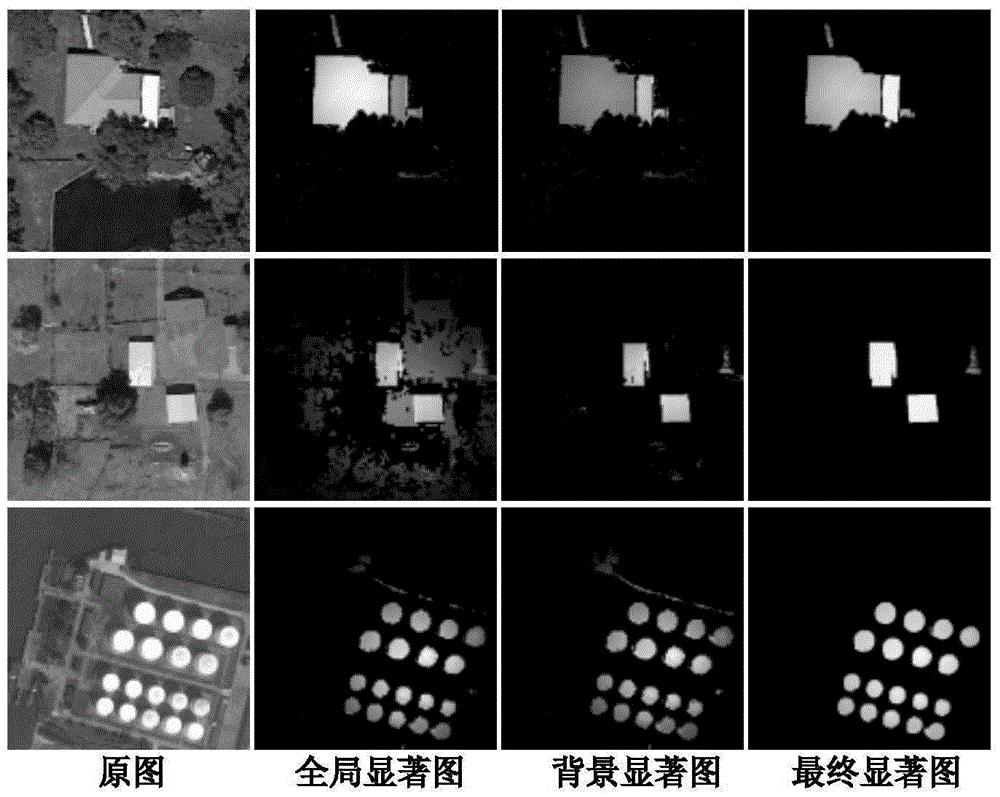

[0026] see figure 1 As shown, the present invention is directed to a remote sensing target detection method based on sparse guidance and saliency driving, and its specific implementation steps are as follows:

[0027] Step 1: dividing the input remote sensing image into several sub-blocks, extracting the color features of the global sub-blocks, clustering to form a global dictionary, and simultaneously extracting the background color features of the sub-blocks at the image boundary, and clustering to form a background dictionary;

[0028] (1) Construct a global dictionary

[0029] For the input remote sensing image, it is divided into T sub-blocks, T is an integer greater than 1, and the color features of the LAB color space are used to represent the sub-blocks to form a sub-block matrix. For the global set, the first The matrix formed by the expansion of color features in i sub-blocks is defined as G lab (i), 1≤i≤T, wherein, G is the name of the matrix, lab is the name of t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com