Obtaining metrics for a position using frames classified by an associative memory

一种关联存储器、已分类的技术,应用在存储器系统、内存地址/分配/重定位、仪器等方向,能够解决不期望测量额外的度量等问题

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

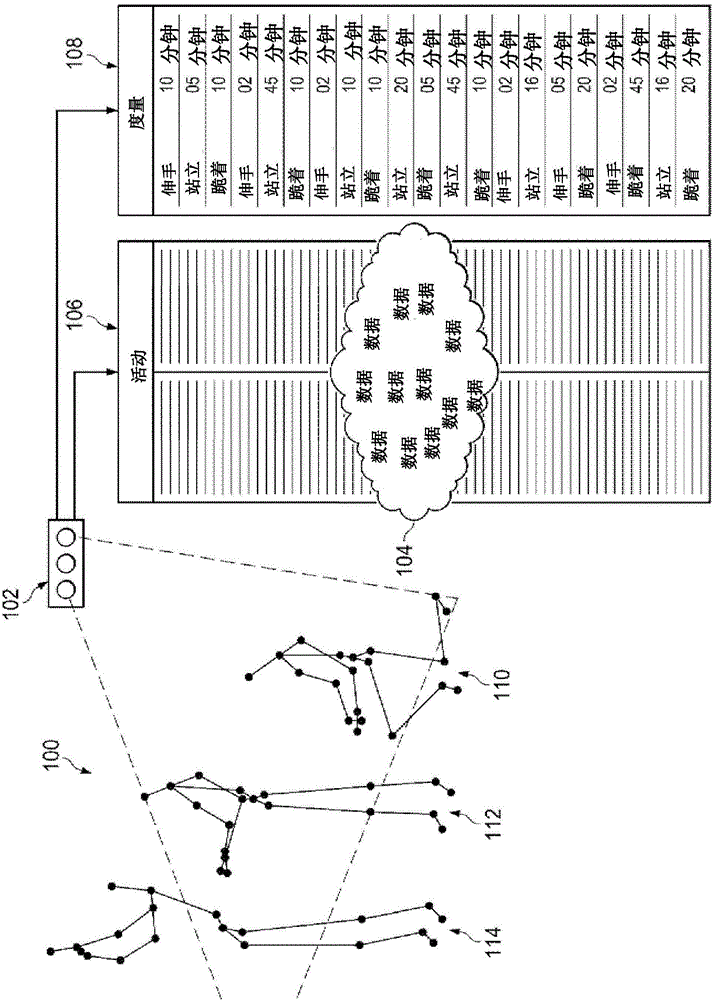

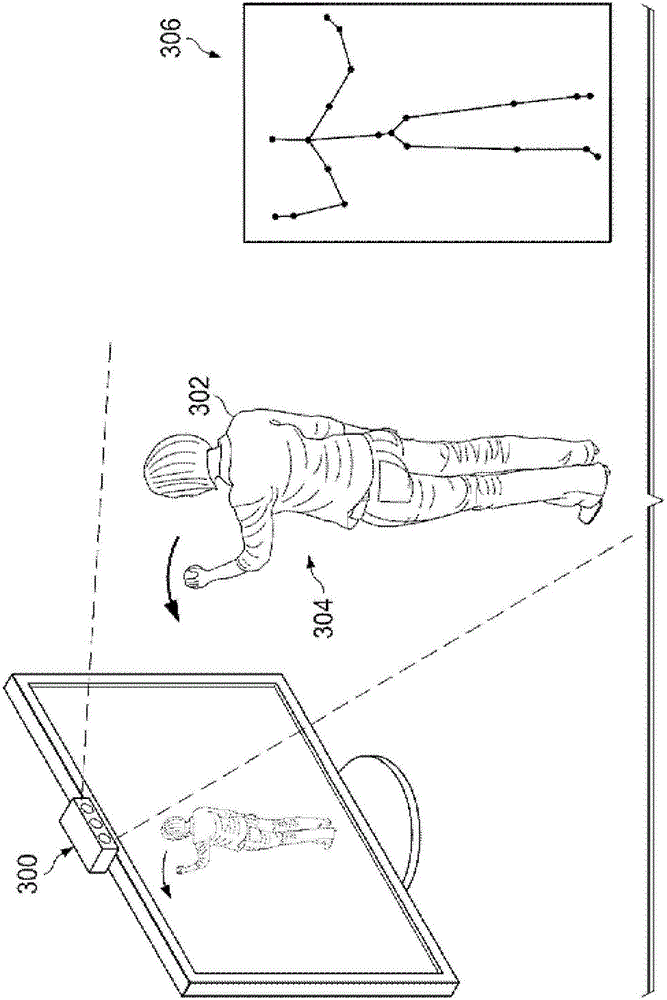

[0083] For this implementation, the user will set up a predefined database and embed the training data captured by the motion sensor. The results of the training data will thus be labeled with a label corresponding to each pose for which its measure is expected. Using the associative memory, the user then fetches this data into the associative memory for classifying new observations according to the data. The resulting data will be used as a general classifier.

[0084] Once extracted, the user is able to have the system periodically capture motion data from the motion sensor and perform an entity comparison of the captured data to similarly locate other motion. The resulting category of entity comparisons will be set to "result". Therefore, the new observation will adopt the motion result that is most equivalent to it, such as Figure 10 shown in . Thus, for example, the set of common attributes 1002 of the results 1004 belonging to “reach out” matches those attributes of...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com