Human behavior identification method based on mobile equipment

A mobile device and recognition method technology, applied in character and pattern recognition, instruments, computer parts, etc., can solve problems such as poor accuracy and poor generality of human behavior recognition methods

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0044] The present invention will be further described below in conjunction with the accompanying drawings.

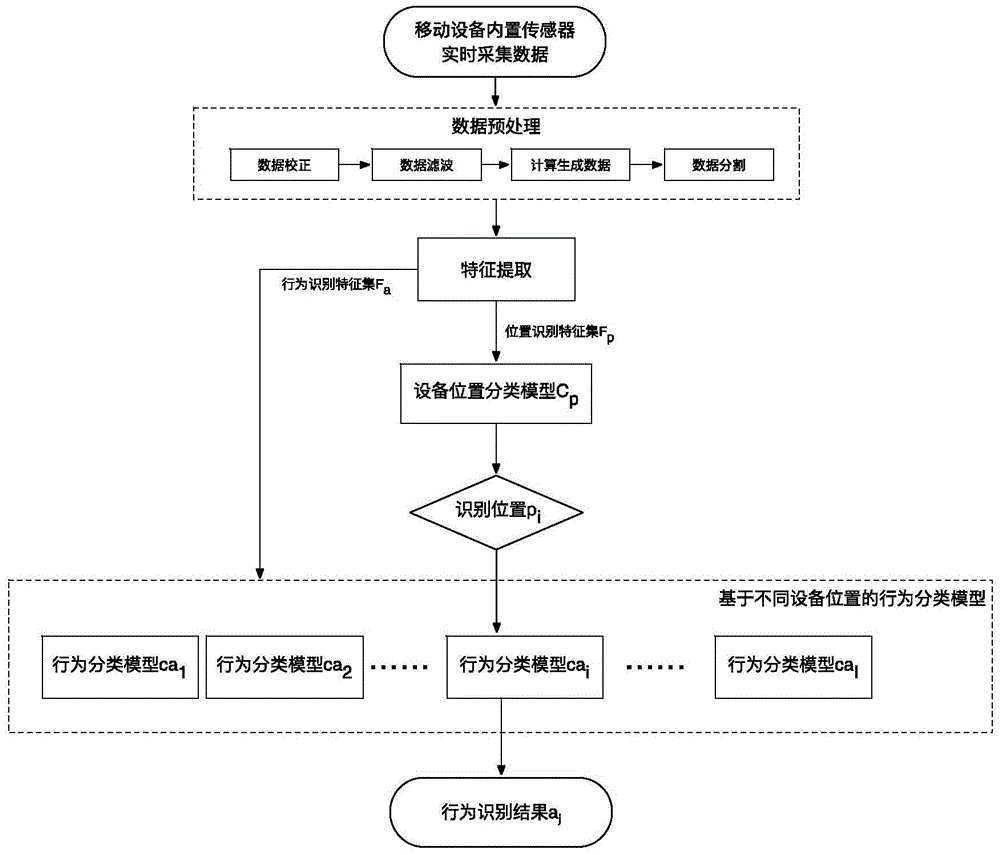

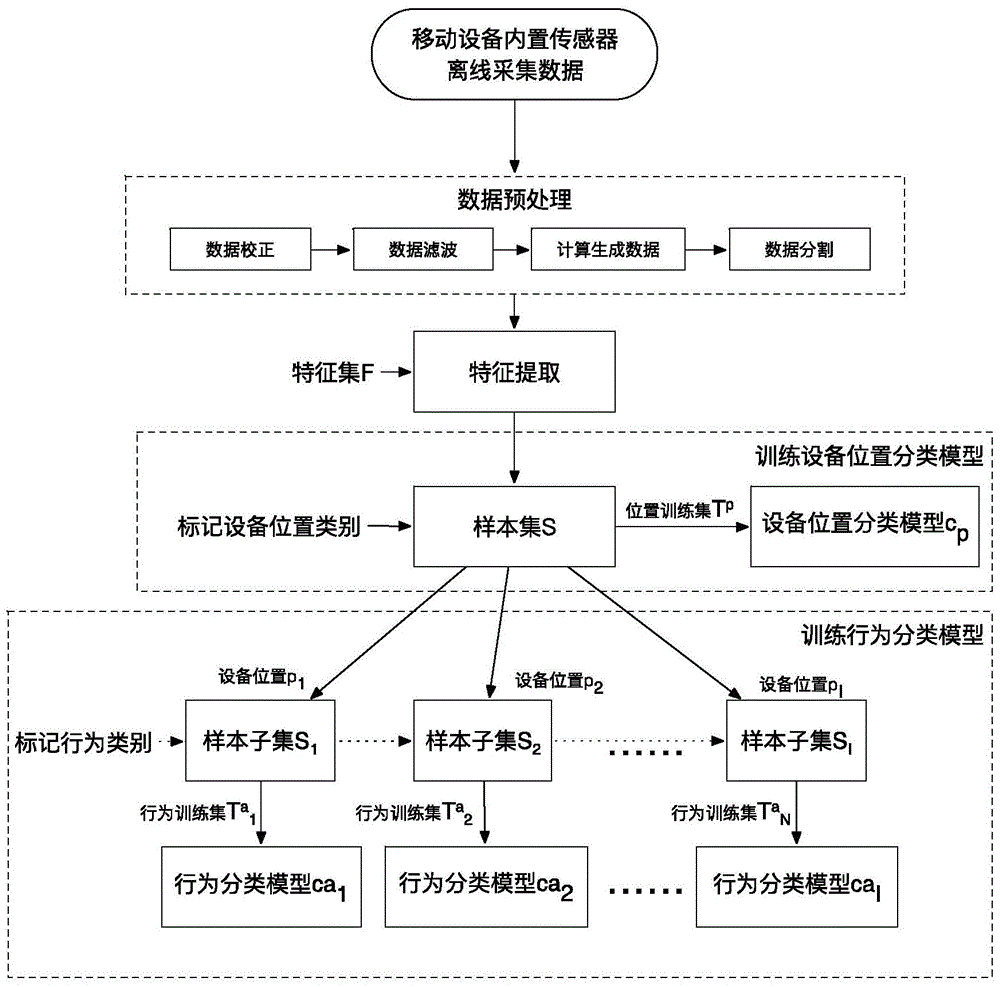

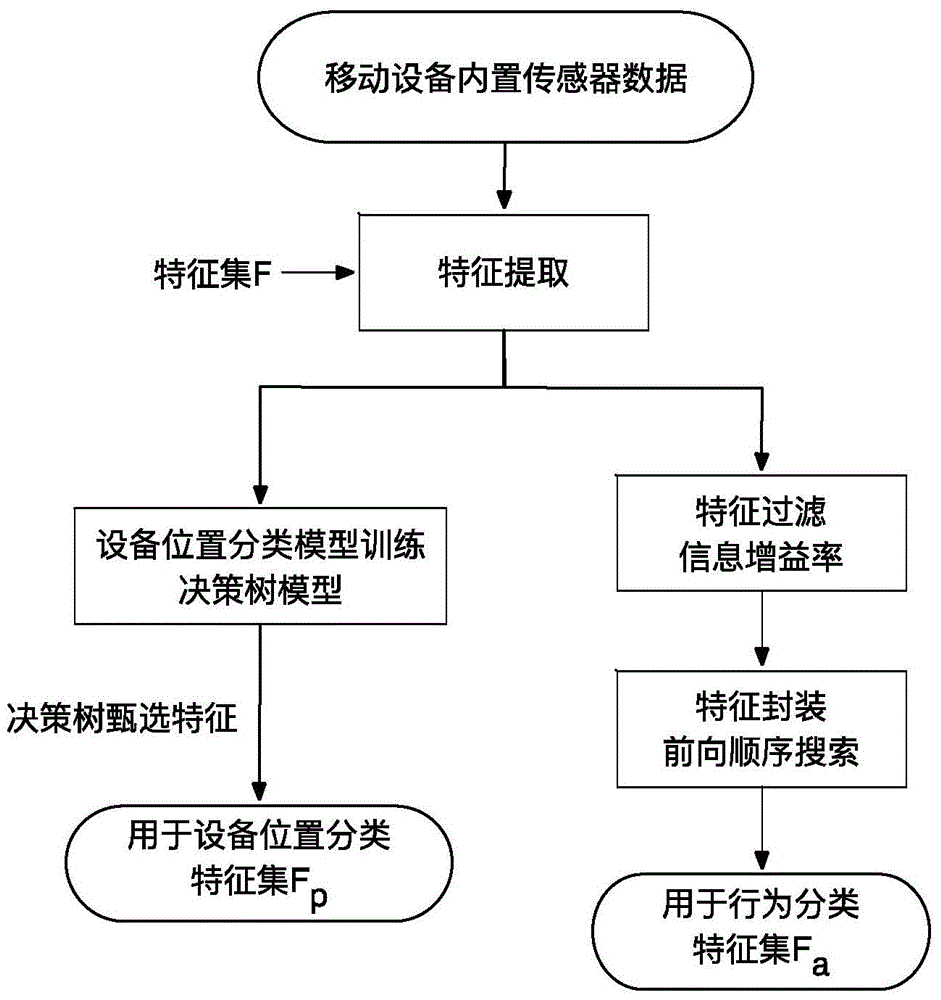

[0045] refer to Figure 1 ~ Figure 3 , a mobile device-based human behavior recognition method, comprising the following steps:

[0046] Step (1), training device location classification model c p and a behavioral classification model based on different device locations ca i ,ca i ∈C, C is a collection of behavior classification models, C={ca 1 ,ca 2 ,...,ca I}, behavior classification model ca i with device position p i has a one-to-one correspondence, the device position p i ∈P, P is a set of predefined device positions, P={p 1 ,p 2 ,...,p I}, I is the number of predefined device location categories;

[0047] Step (2), collecting raw sensor data in real time through the built-in sensor of the mobile device;

[0048] Step (3), performing data preprocessing on the data collected in real time by the built-in sensor of the mobile device, to obtain the data s...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com