Word vector training method and system

A training method and word vector technology, which is applied in the field of word vector training method and system, can solve the problems of unavailable and low accuracy of word vector training, and achieve the effect of improving training accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

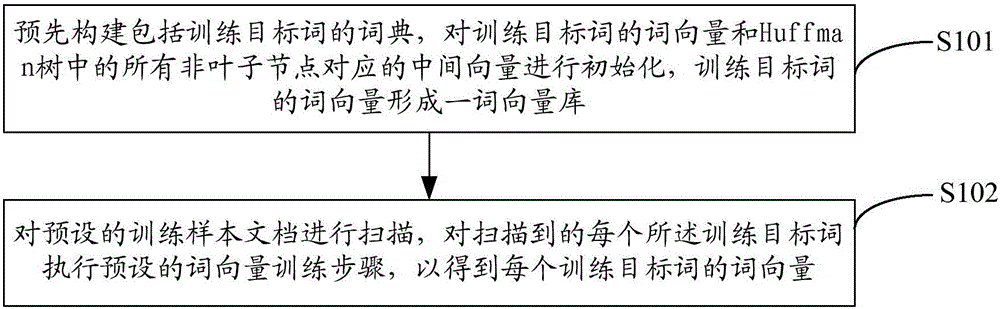

[0029] figure 1 The implementation process of the word vector training method provided by the first embodiment of the present invention is shown. For the convenience of explanation, only the parts related to the embodiment of the present invention are shown, and the details are as follows:

[0030] In step S101, a dictionary including the training target word is constructed in advance, the word vector of the training target word and the intermediate vectors corresponding to all non-leaf nodes in the Huffman tree are initialized, and the word vector of the training target word forms a word vector library.

[0031] In the embodiment of the present invention, a dictionary including training target words can be constructed in advance. Specifically, a text related to a certain type or subject can be segmented, stop words removed, and high and low frequency words removed, so as to construct a corresponding dictionary. Preferably, the ICTCLAS2015 word segmentation system of the Chine...

Embodiment 2

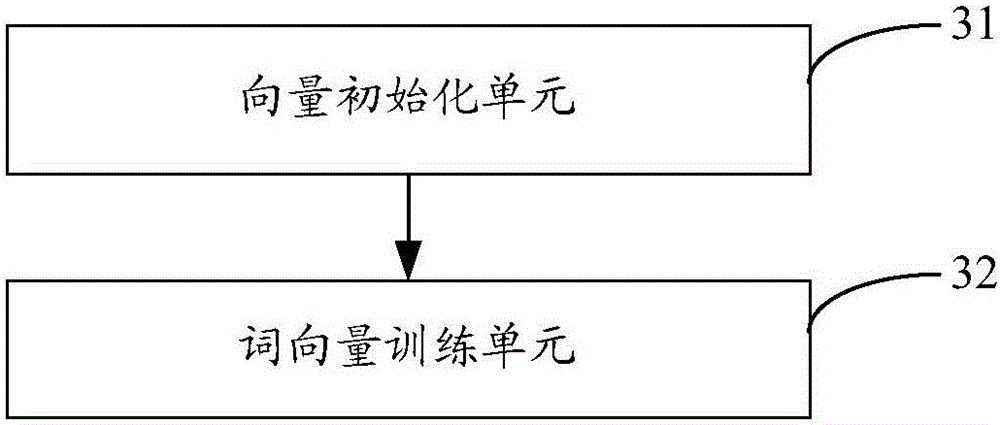

[0048] image 3 The structure of the word vector training system provided by Embodiment 2 of the present invention is shown. For the convenience of description, only the parts related to the embodiment of the present invention are shown, including:

[0049] Vector initialization unit 31, for pre-constructing the dictionary that comprises training target word, the word vector of training target word and the corresponding intermediate vector of all non-leaf nodes in the Huffman tree are initialized, the word vector of training target word forms a word vector storehouse ;as well as

[0050] The word vector training unit 32 is configured to scan a preset training sample document, and perform a preset word vector training step on each scanned training target word to obtain a word vector of each training target word.

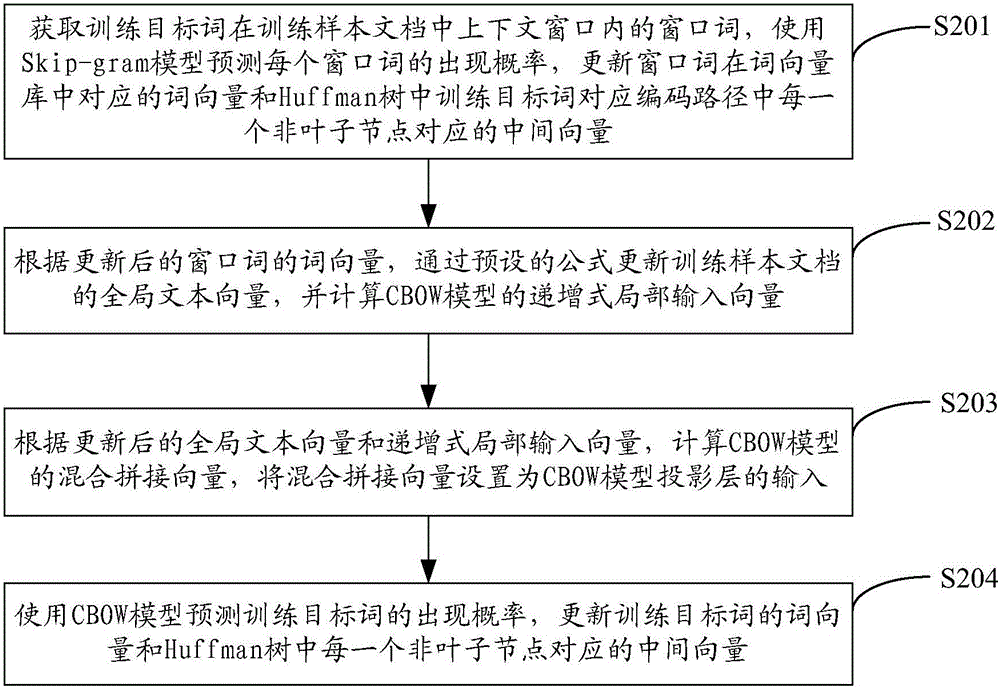

[0051] Preferably, as Figure 4 As shown, the word vector training unit 32 of the word vector training system provided by the embodiment of the present invention in...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com