Lip-reading recognition method and mobile terminal

A mobile terminal and recognition method technology, applied in speech recognition, neural learning methods, character and pattern recognition, etc., can solve problems such as not suitable for answering calls, unable to protect user privacy, and normal activities of surrounding people, so as to improve training Accuracy, training time savings, impact reduction effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

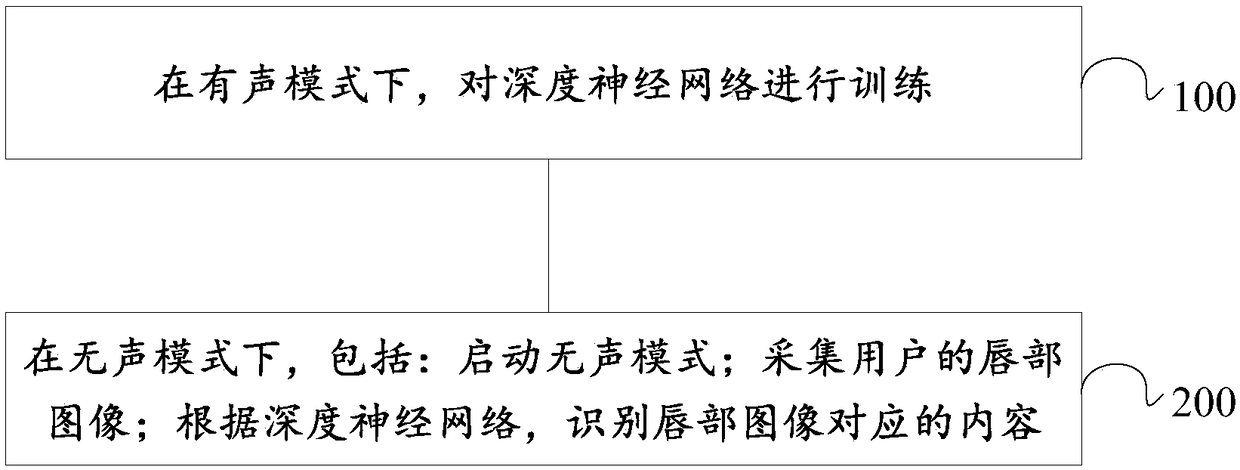

[0055]figure 1 The flowchart of the lip recognition method provided by the embodiment of the present invention, such as figure 1 As shown, the lip language recognition method provided by the embodiment of the present invention is applied in a mobile terminal, wherein a voiced mode and a silent mode are set in the mobile terminal, and the method specifically includes the following steps:

[0056] Step 100, in the vocal mode, train the deep neural network.

[0057] Specifically, the voice mode refers to that the user makes a voice call.

[0058] As a first alternative, step 100 includes: collecting lip images for training and corresponding voice data; obtaining corresponding image data according to the lip images for training, and training deep neural networks based on the image data and voice data. The internet.

[0059] As a second optional method, step 100 includes: collecting lip images for training and corresponding voice data; obtaining corresponding image data according...

Embodiment 2

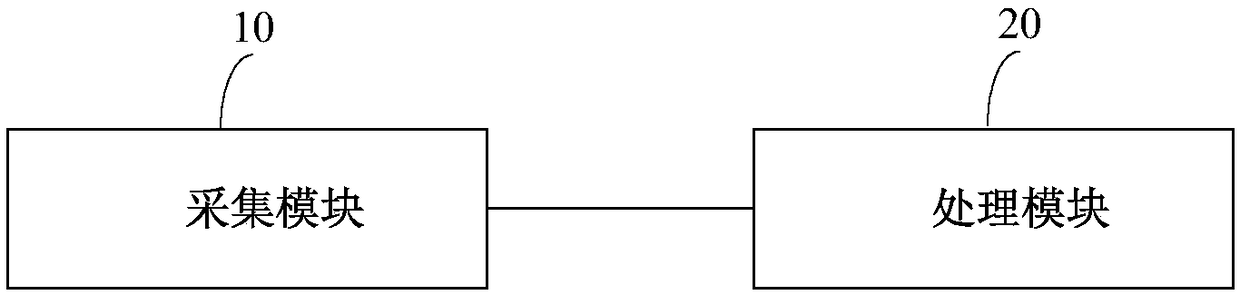

[0078] Based on the inventive concepts of the foregoing embodiments, figure 2 A schematic structural diagram of a mobile terminal provided by an embodiment of the present invention, such as figure 2 As shown, the mobile terminal provided by the embodiment of the present invention is provided with a voiced mode and a silent mode, and the mobile terminal includes: an acquisition module 10 and a processing module 20 .

[0079] Specifically, in the silent mode, the collection module 10 is configured to collect the user's lip image; the processing module 20 is connected in communication with the collection module 10, and is configured to identify the content corresponding to the lip image according to the deep neural network.

[0080] Among them, the deep neural network is established in the vocal mode.

[0081] It should be noted that the condition for starting the silent mode is a lip recognition start command input by the user, such as clicking a preset virtual button on the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com