Method and device for human target recognition

A human body target and human body technology, applied in the field of target recognition, can solve the problems of cumbersome maintenance and debugging process, can not meet real-time application, insufficient accuracy, achieve good uniqueness and spatial invariance, simplify the detection and recognition process, and strengthen self- adaptive effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

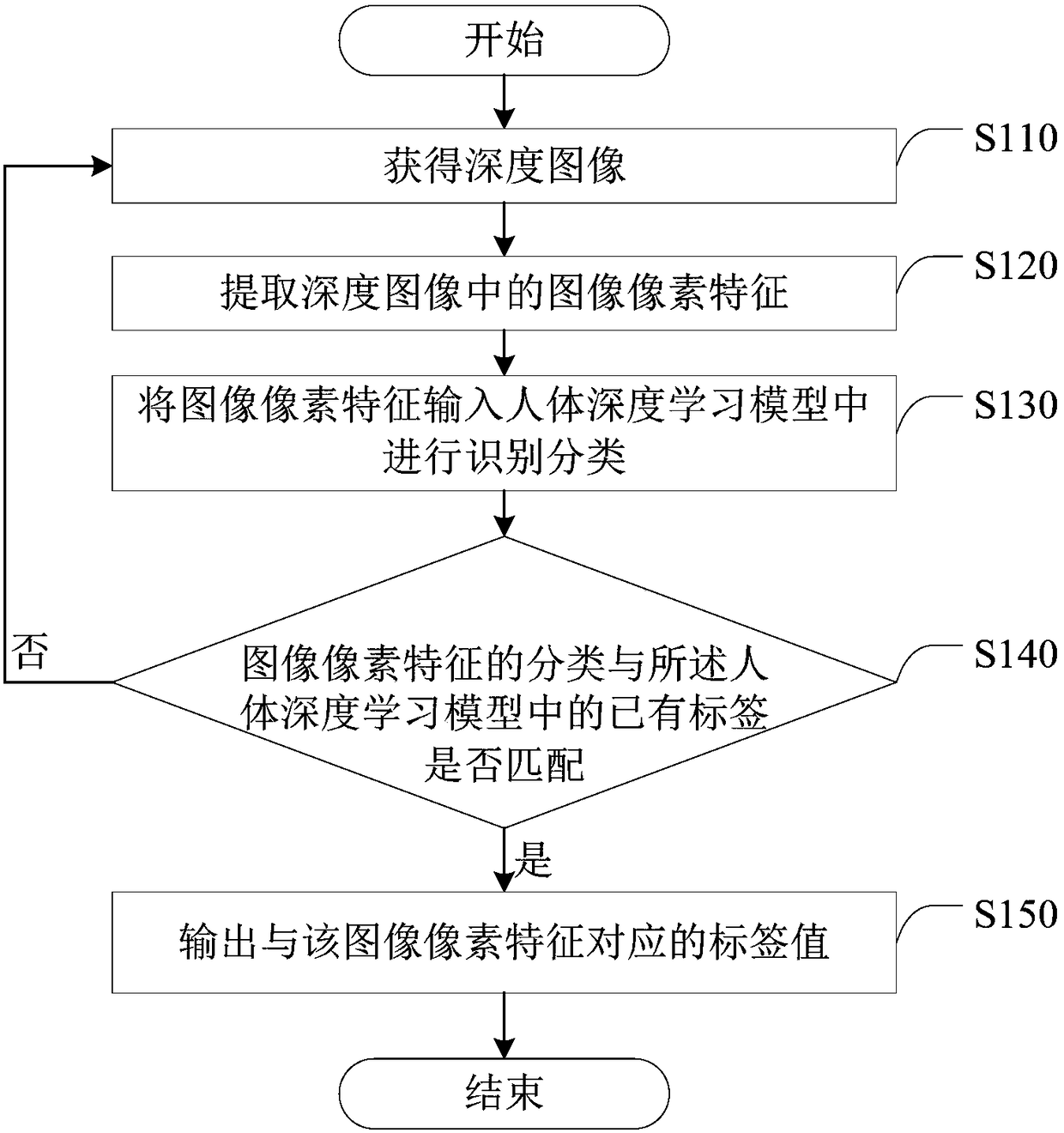

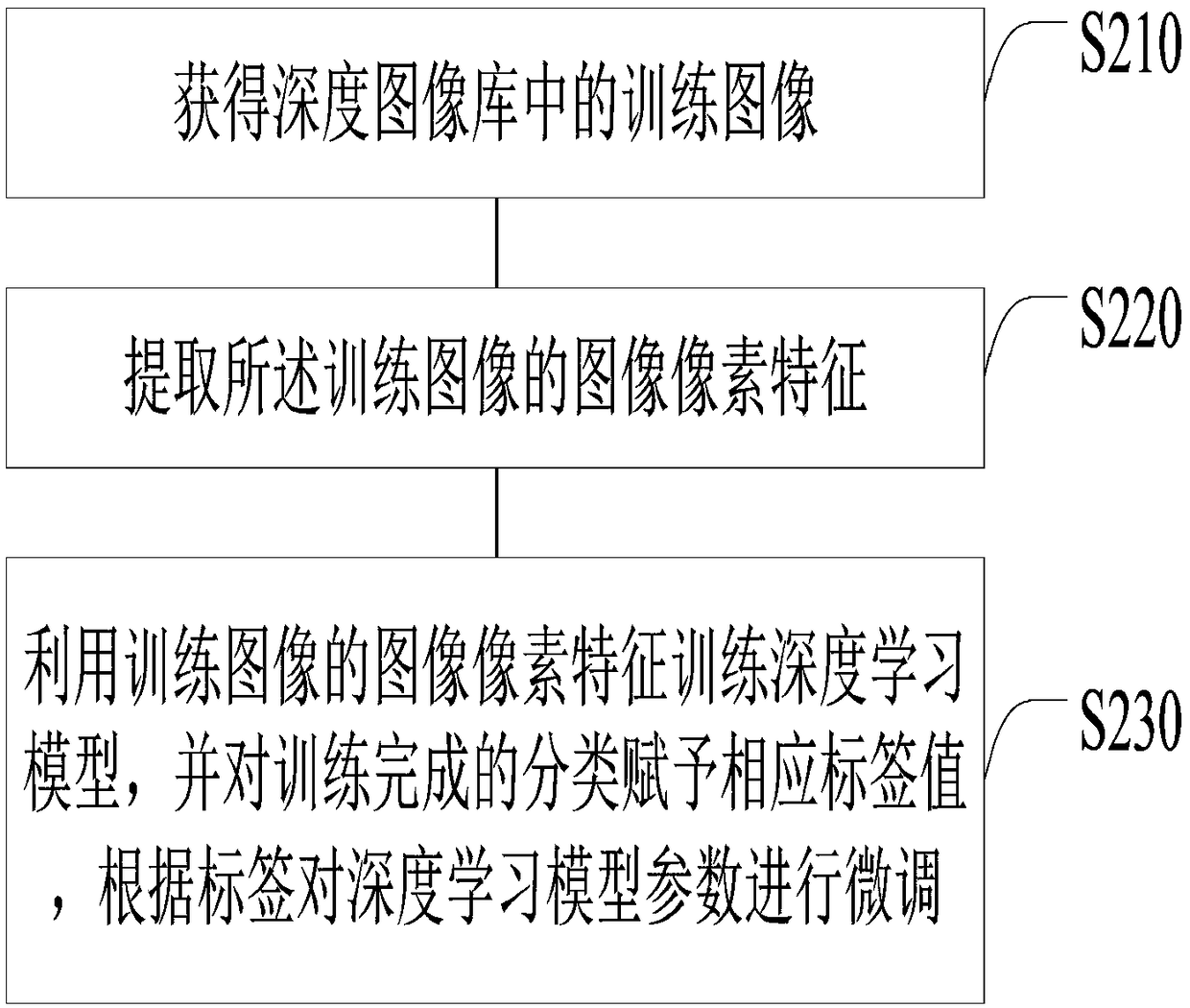

[0058] Please refer to figure 1 , figure 1 A specific flow chart of a method for human body target recognition is provided for this embodiment, and the method includes:

[0059] Step S110, obtaining a depth image.

[0060] In this embodiment, the depth image is obtained by a depth sensor, wherein the depth image includes a depth value of each pixel obtained by the depth sensor.

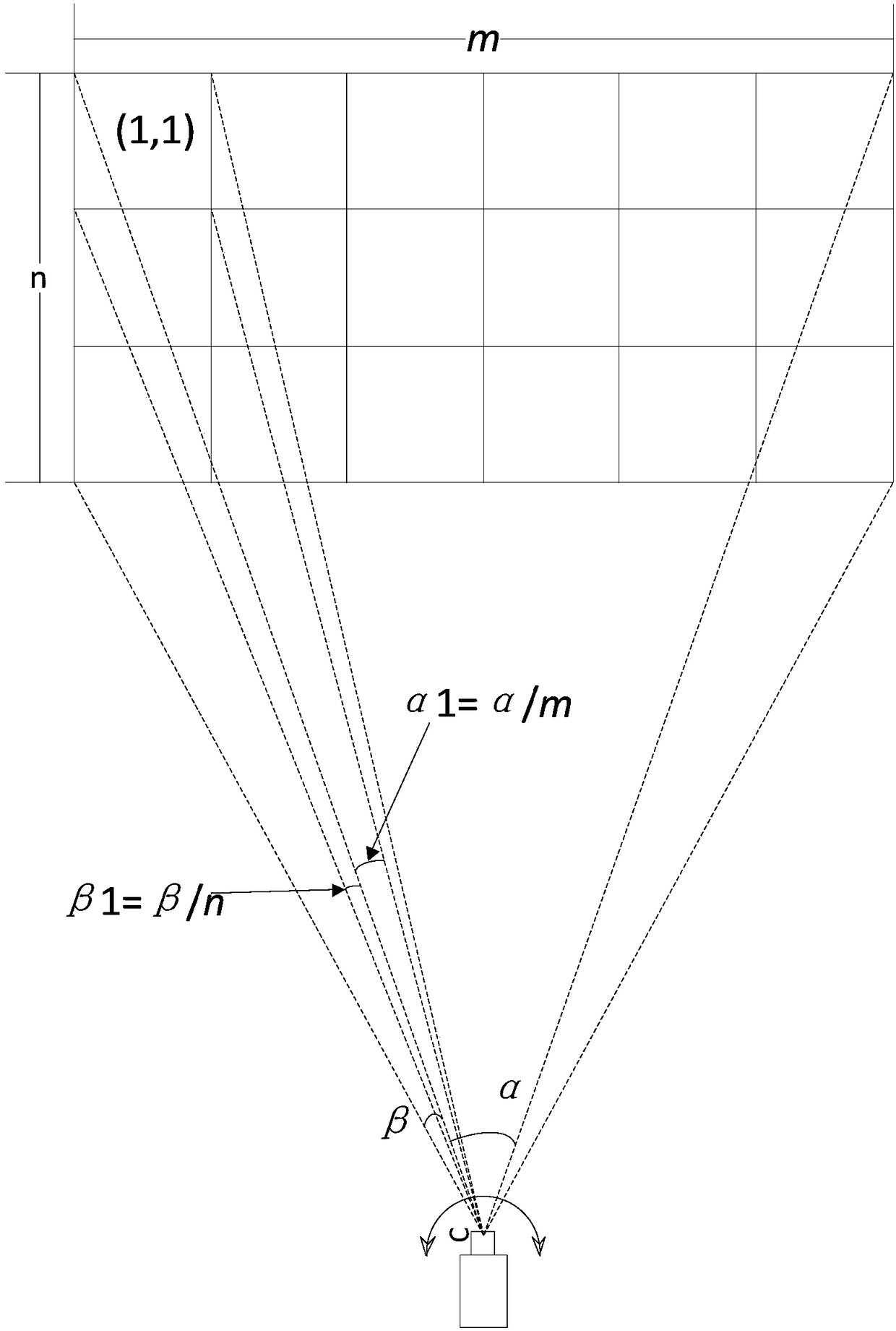

[0061] Please refer to figure 2 , assuming that the field angle of the depth sensor in this embodiment is (α, β), and the resolution of the obtained depth image is (m, n). Coordinates are established on the depth image in units of pixels, and the depth value of the pixel p=(x, y) is recorded as D(x, y).

[0062] Step S120, extracting image pixel features in the depth image.

[0063] Extracting the image pixel features may include: depth gradient direction histogram features, local simplified ternary pattern features, depth value statistical distribution features, and depth difference features be...

no. 2 example

[0103] Please refer to Figure 7 , the human target recognition device 10 provided in this embodiment includes:

[0104] A first acquisition module 110, configured to acquire a depth image;

[0105] A first feature extraction module 120, configured to extract image pixel features in the depth image;

[0106] The human body deep learning module 130 is used to identify and classify the input image pixel features;

[0107] A judging module 140, configured to judge whether the classification of the image pixel features matches the existing human body part labels in the human body deep learning model;

[0108] The output module 150 is configured to output the label corresponding to the pixel feature when the classification of the image pixel feature matches the existing label in the human body deep learning model.

[0109] In this embodiment, the human body deep learning model is used to use the image pixel features as the input of the bottom input layer, perform regression clas...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com