Image processing method and image processing device

An image processing and image technology, applied in the field of image processing, can solve the problems of image processing, vaguely seeing images, and less information, and achieve the effect of increasing the amount of information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] In order to make the object, technical solution and advantages of the present invention clearer, the implementation manner of the present invention will be further described in detail below in conjunction with the accompanying drawings.

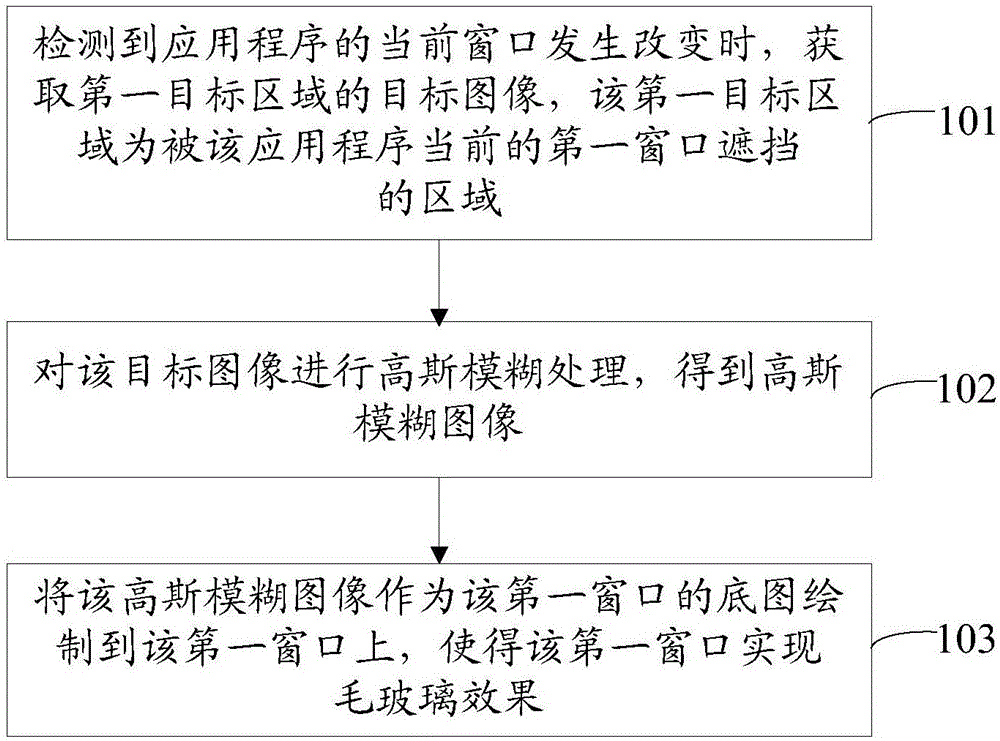

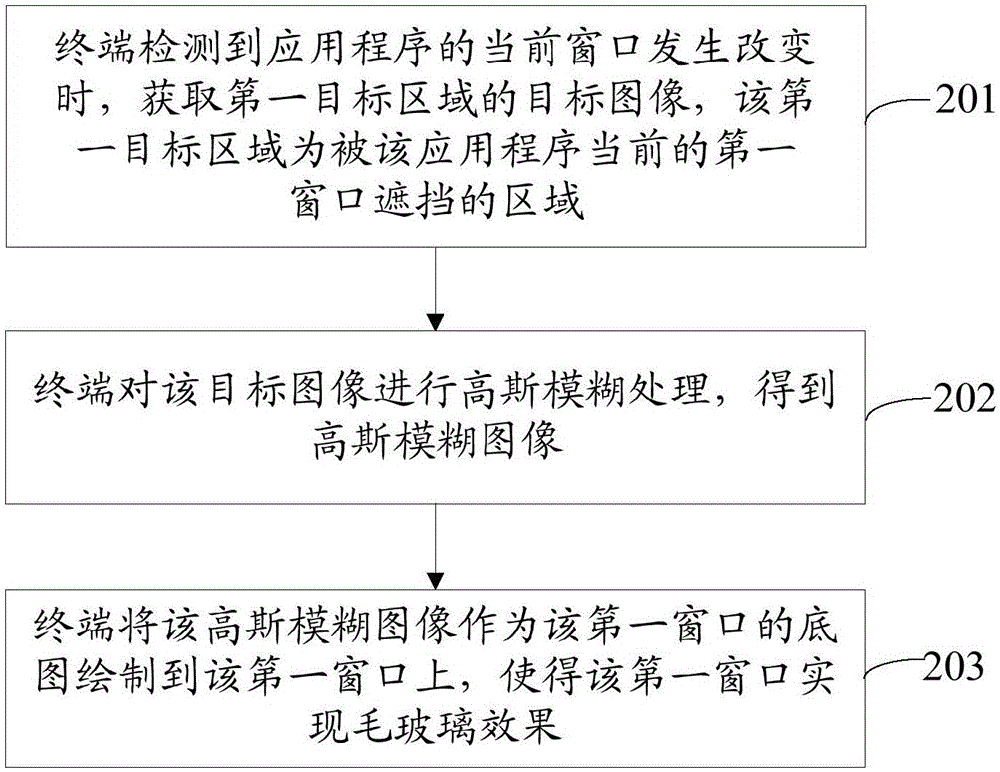

[0039] An embodiment of the present invention provides a method for image processing, and the subject of execution of the method may be a terminal of a designated system, wherein the designated system may be Windows XP, Windows 2003, and the like. see figure 1 , the method includes:

[0040] Step 101: When it is detected that the current window of the application program has changed, acquire a target image of a first target area, where the first target area is an area blocked by the current first window of the application program.

[0041] Step 102: Perform Gaussian blur processing on the target image to obtain a Gaussian blurred image.

[0042] Step 103: Draw the Gaussian blurred image as the base map of the first window on the firs...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com