AR implementation method and system based on positioning of visual angle of observer

An implementation method and system technology, applied in the AR field, can solve the problems of limited depth recognition, limited application range, and limited rendering ability, and achieve the effect of unlimited size and complexity, wide application range, and high rendering ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0043] In order to fully understand the technical content of the present invention, the technical solutions of the present invention will be further introduced and illustrated below in conjunction with specific examples, but not limited thereto.

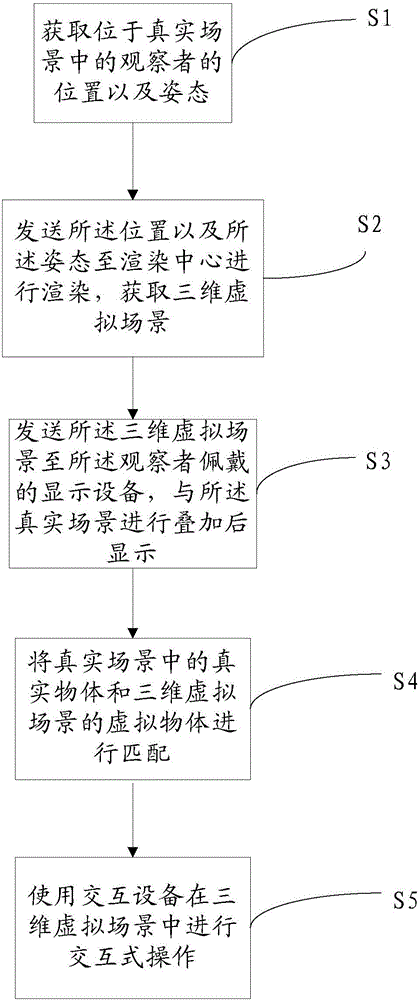

[0044] Such as Figure 1-6 In the specific embodiment shown, the AR implementation method based on the observer's perspective positioning provided by this embodiment can be used in any scene, realizing unlimited size and complexity of the real scene, high rendering capability, strong applicability, and Has a wide range of applications.

[0045] Such as figure 1 As shown, the AR implementation method based on the observer's perspective positioning includes:

[0046] S1. Obtain the position and posture of the observer in the real scene, and assign it to the virtual camera;

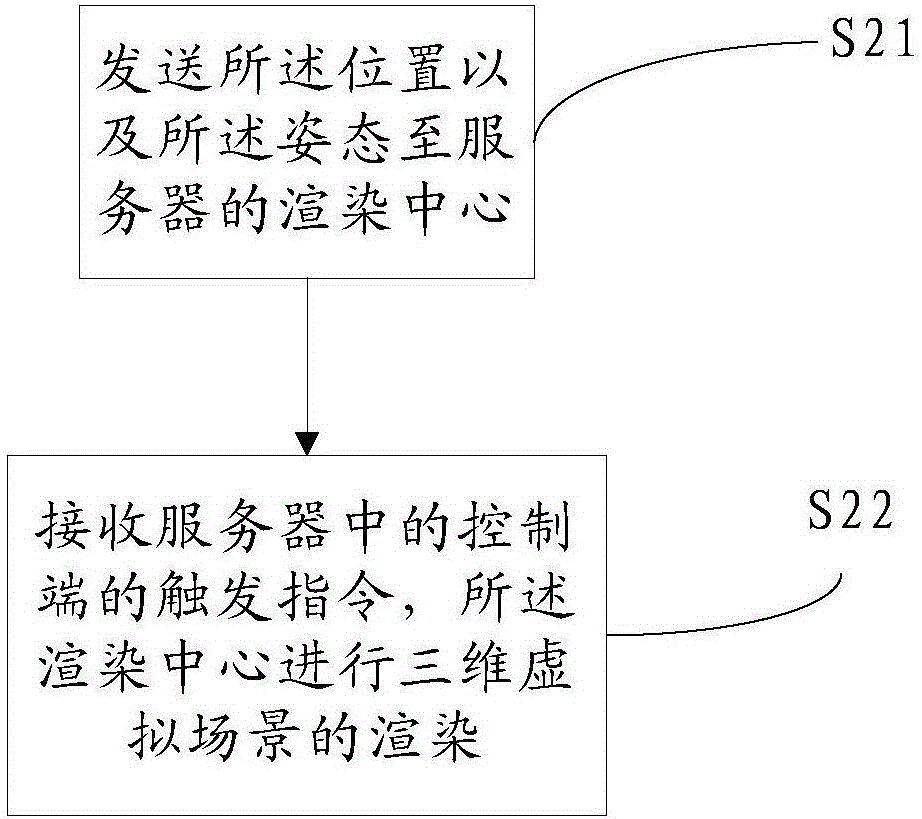

[0047] S2. Send the position and the posture to the rendering center for rendering, and obtain a three-dimensional virtual scene;

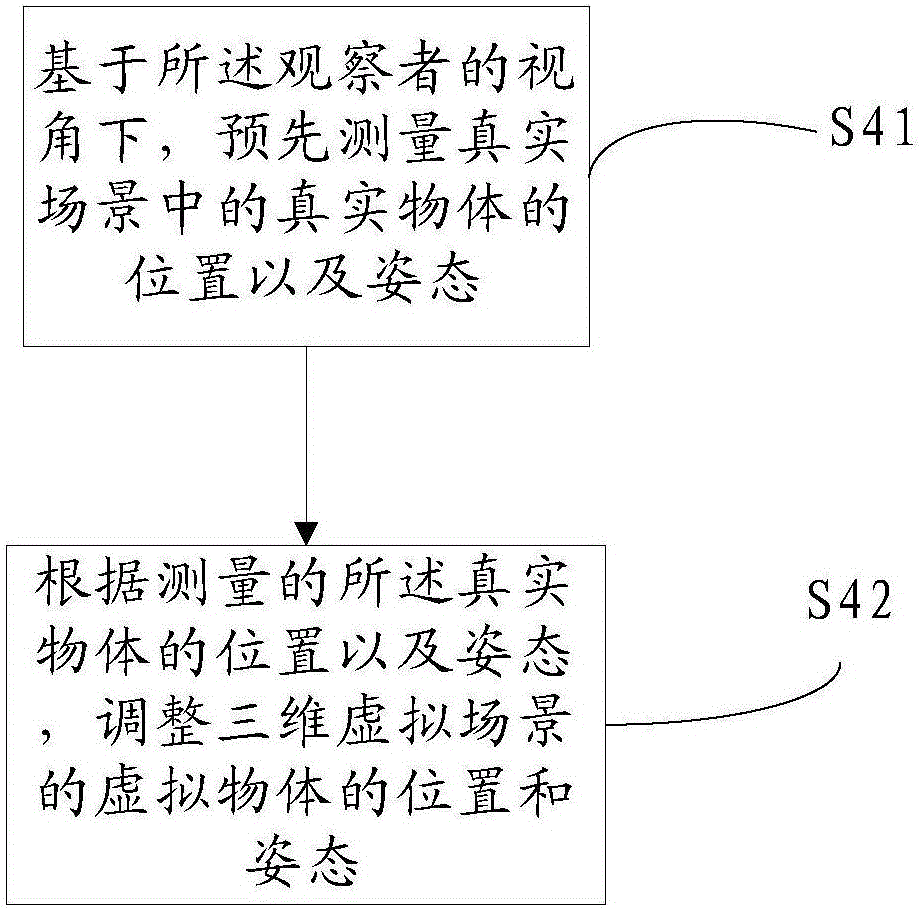

[0048] S3. Send the three-d...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com