Image captioning with weak supervision

A weak supervision and subtitle technology, applied in image communication, still image data retrieval, still image data indexing, etc., can solve problems such as poor work, difficult for users to search for specific images, and neglect of image details

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

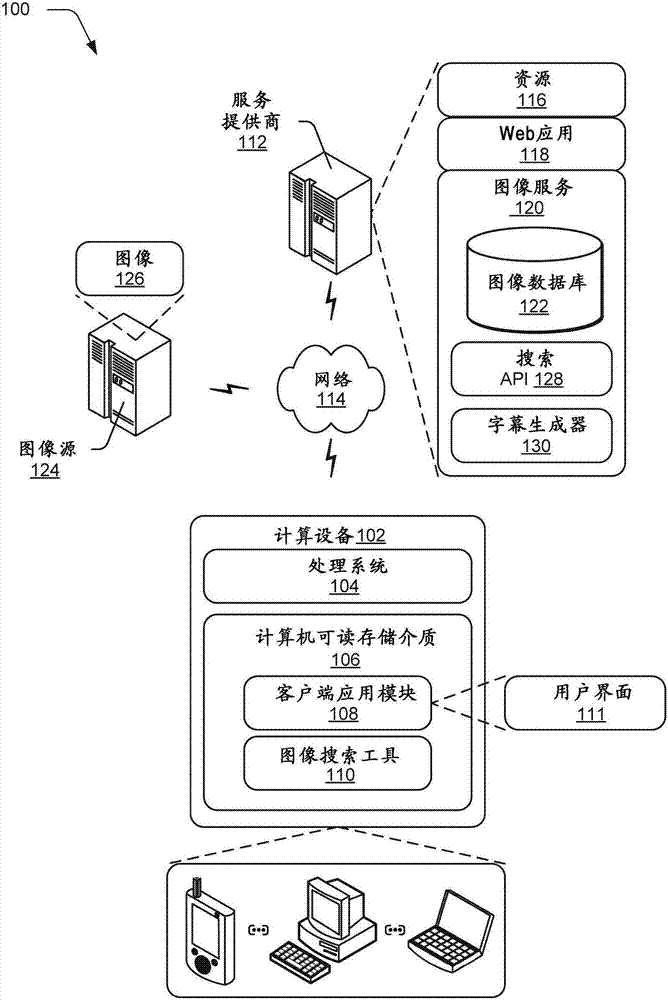

[0018] overview

[0019] Traditional techniques for image processing do not support high-precision natural language subtitles and image search due to the limitations of traditional image tagging and search algorithms. This is because traditional techniques only associate labels with images, but do not define the relationship between labels or between labels and images themselves. Alternatively, traditional techniques may include using a top-down approach in which the entire "gist" of an image is first taken and refined into appropriate descriptive words and captions through language modeling and sentence generation. However, this top-down approach does not work well in capturing fine details of an image, such as local objects, attributes, and regions that contribute to an accurate description of the image.

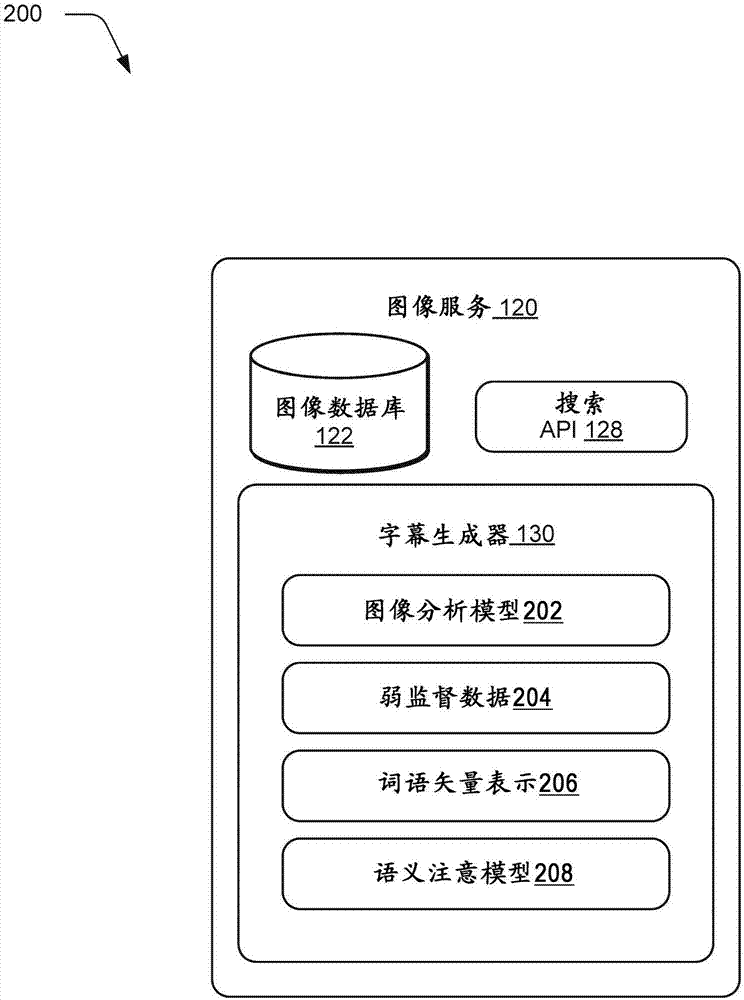

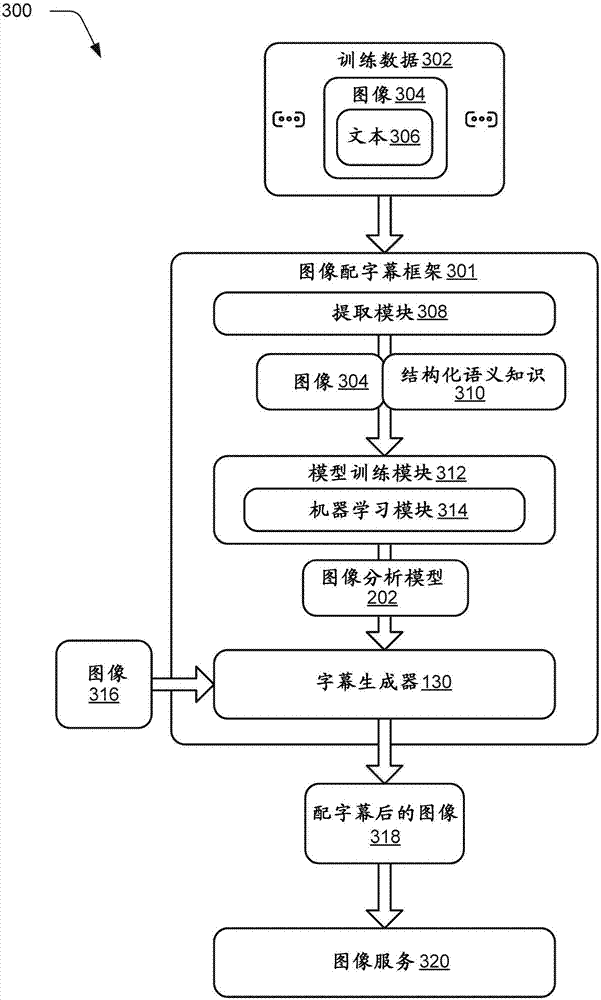

[0020] This paper describes techniques for captioning images with weak supervision. In one or more implementations, weakly supervised data about the target image is ac...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com