Scene segmentation correction method and system fusing global information

A scene segmentation, global technology, applied in the field of machine learning and computer vision, can solve the problems of discontinuity, incoherence, inconsistent segmentation results, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0036] In order to make the purpose, technical solution and advantages of the present invention clearer, the global residual correction network proposed by the present invention will be further described in detail below in conjunction with the accompanying drawings. It should be understood that the specific implementation methods described here are only used to explain the present invention, and are not intended to limit the present invention.

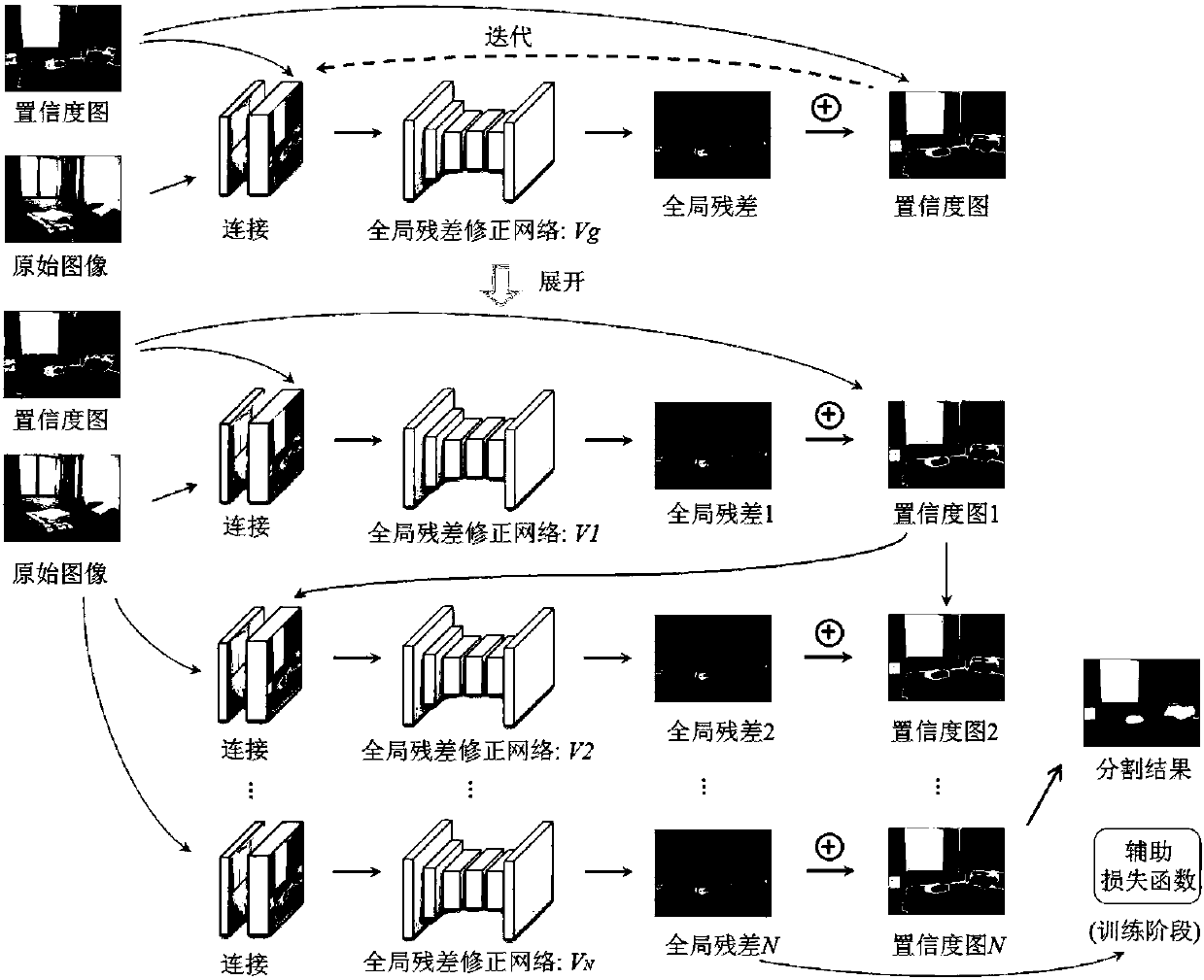

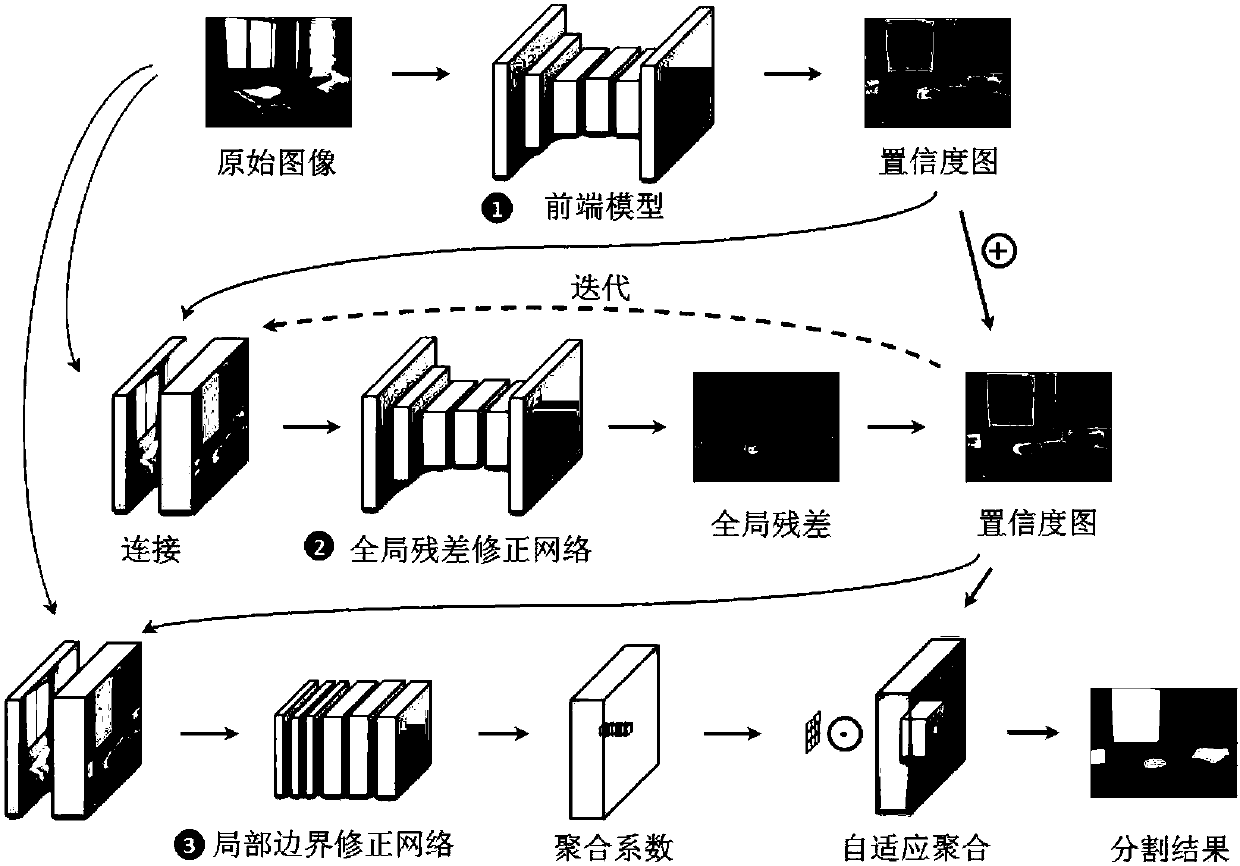

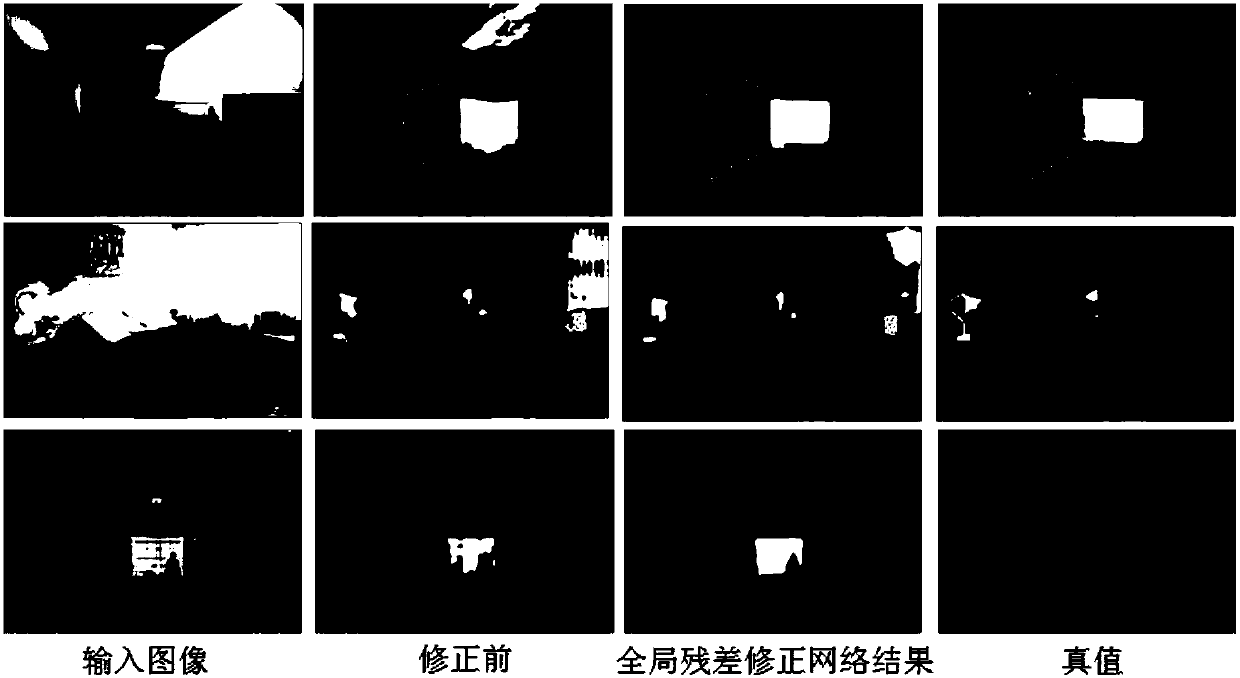

[0037]In order to better use the global residual correction network proposed by the present invention, the present invention adopts a cascade framework to correct the segmentation results of the front-end network. The framework consists of three parts: (1) using the currently popular full residual convolutional network as the front-end model; (2) using the global residual correction network and using the global content information for correction; (3) using the local boundary correction network, Local corrections are made to the segment...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com