Video saliency detection method based on Bayesian fusion

A detection method and remarkable technology, applied in the field of image processing, can solve problems such as weak sequence relationship, low accuracy and efficiency, and simple time domain structure, and achieve the effects of enhancing representation ability, reducing influence, and good experimental results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

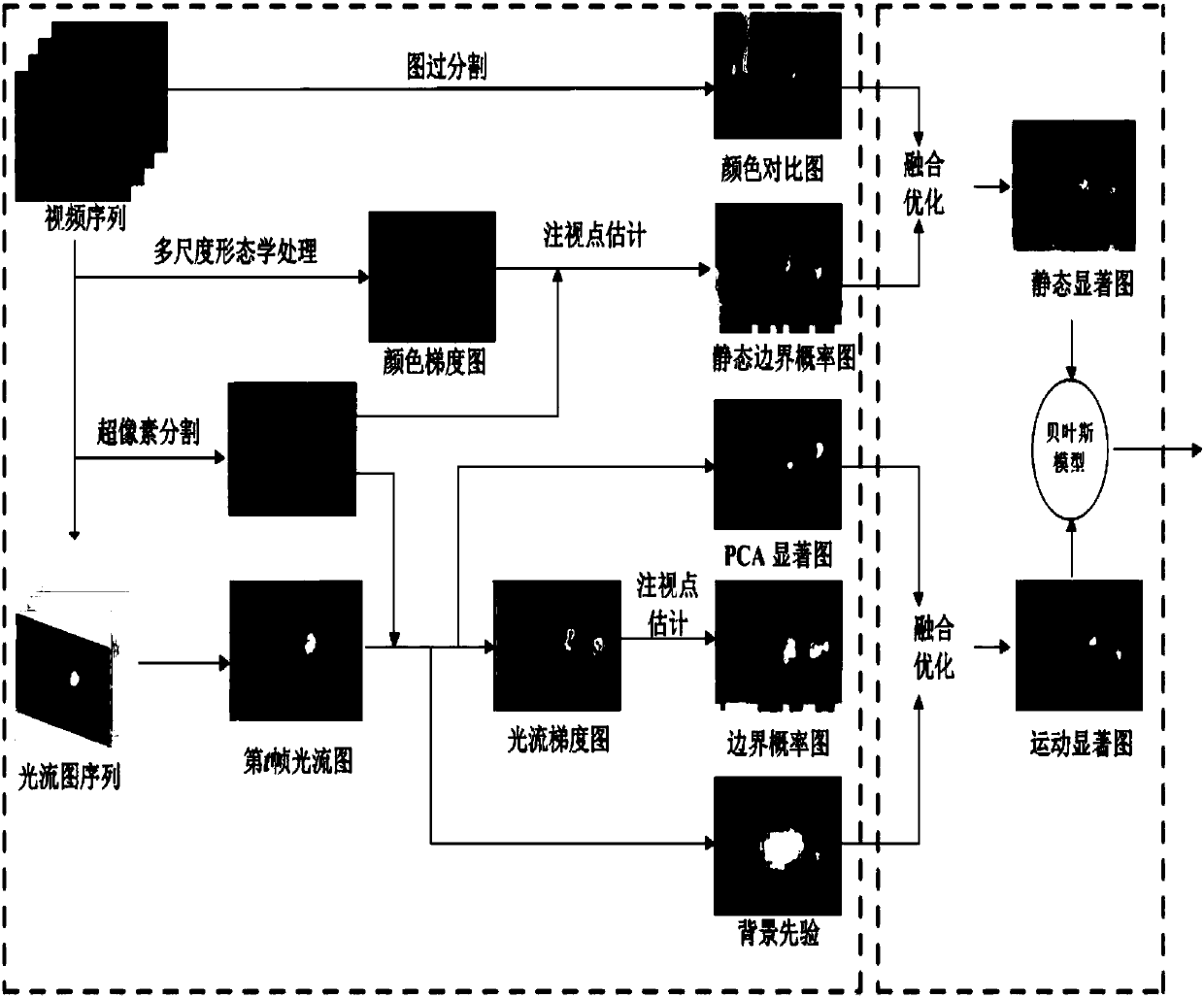

[0032] Embodiments and effects of the present invention will be further described in detail below in conjunction with the accompanying drawings.

[0033] refer to figure 1 , the implementation steps of the present invention are as follows:

[0034] Step 1, obtain the static saliency map of the video sequence.

[0035] 1.1) Obtain static boundary probability saliency map

[0036] For the original video sequence F={F 1 , F 2 ,…F k ,…F l} in each frame image F k , k=1,2...l, k is the frame number of image in the video sequence, adopts the method for multi-scale morphology estimation to obtain static boundary map, obtains the boundary of target in the image;

[0037] Will F k Medium pixel The corresponding boundary probability value is expressed as i represents the image F k The number of pixels in the middle, using the SLIC superpixel segmentation algorithm to process F k , get its corresponding superpixel block set is the set of superpixel blocks N k The jth...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com