Method for converting lip image feature into speech coding parameter

A technology of speech coding and image features, applied in speech analysis, speech synthesis, neural learning methods, etc., can solve the problem of complex conversion process, and achieve the effect of convenient construction and training

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

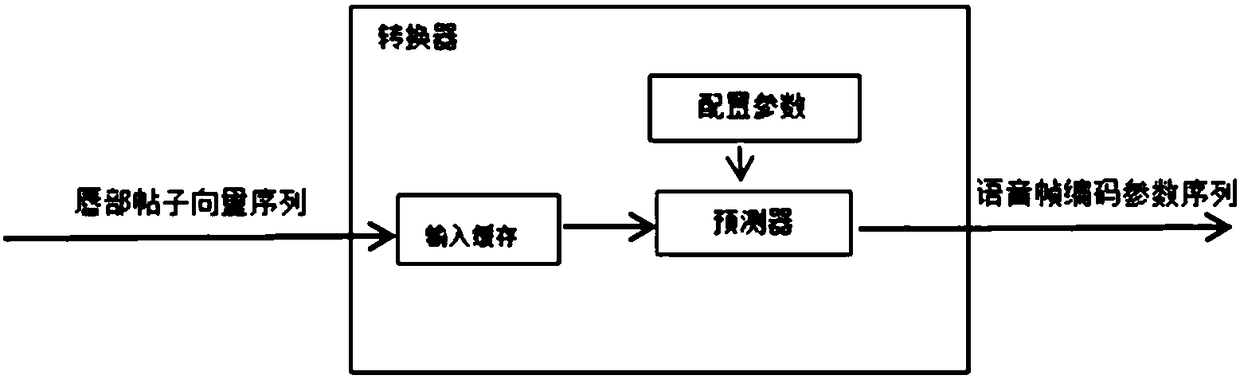

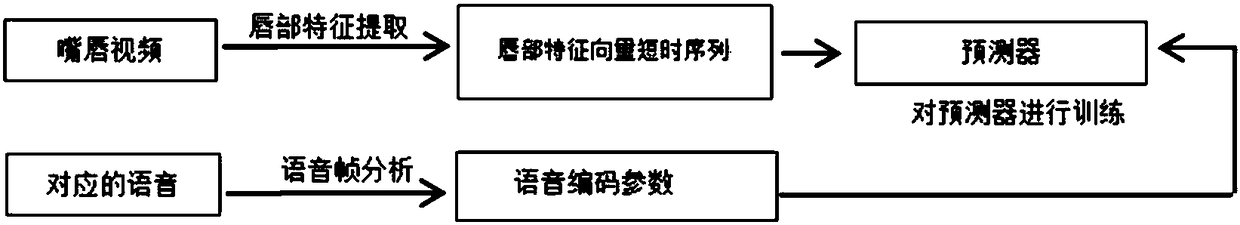

Method used

Image

Examples

Embodiment 1

[0048] The following is a specific implementation method, but the method and principle of the present invention are not limited to the specific numbers given therein.

[0049] (1) The predictor can be realized by artificial neural network. Predictors can also be constructed using other machine learning techniques. In the following process, the predictor uses an artificial neural network, that is, the predictor is equivalent to an artificial neural network.

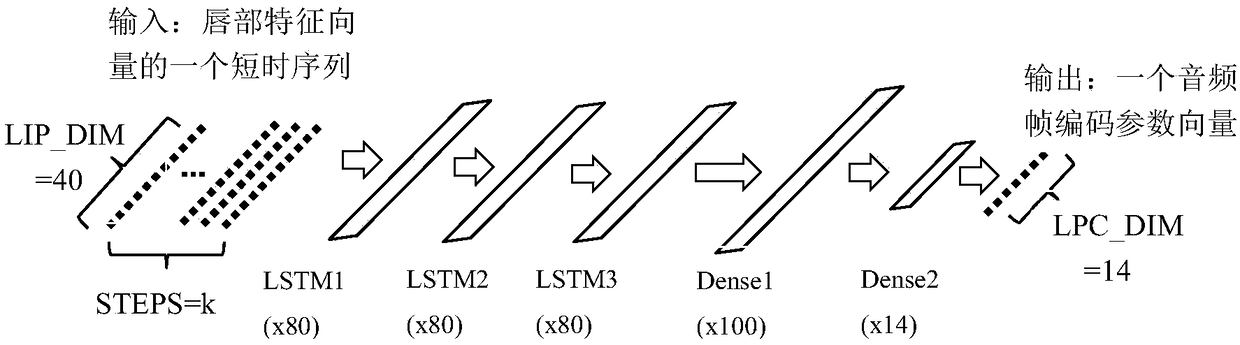

[0050] In this embodiment, the neural network is composed of 3 LSTM layers + 2 fully connected layers Dense connected in sequence. A Dropout layer is added between each two layers and between the internal feedback layers of LSTM. For the sake of clarity, these are not drawn in the figure. like image 3 Shown:

[0051] Among them, the three layers of LSTM each have 80 neurons, and the first two layers use the "return_sequences" mode. The two Dense layers have 100 neurons and 14 neurons respectively.

[0052] The first...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com