Data classification method

A data classification and classifier technology, applied in the field of data processing, can solve the problems of increasing sample weight, difficult training, incomplete training, etc., to achieve the effect of ensuring complete training, improving accuracy and performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0041] The present invention will be further described below in conjunction with the accompanying drawings and specific preferred embodiments, but the protection scope of the present invention is not limited thereby.

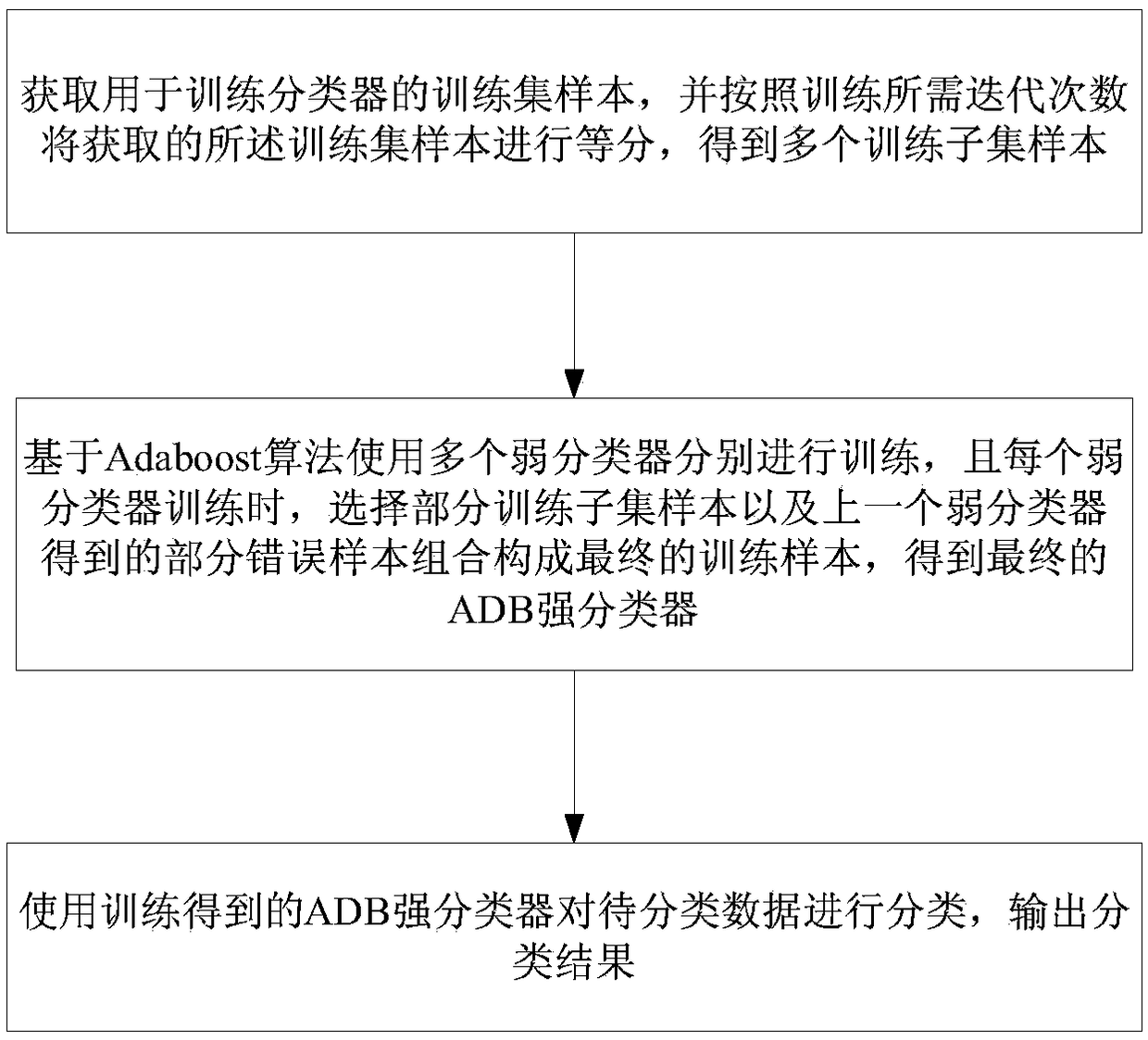

[0042] Such as figure 1 As shown, the data classification method of this embodiment, the steps include:

[0043] S1. Obtain a training set sample for training a classifier, and equally divide the obtained training set sample according to the number of iterations required for training to obtain multiple training subset samples;

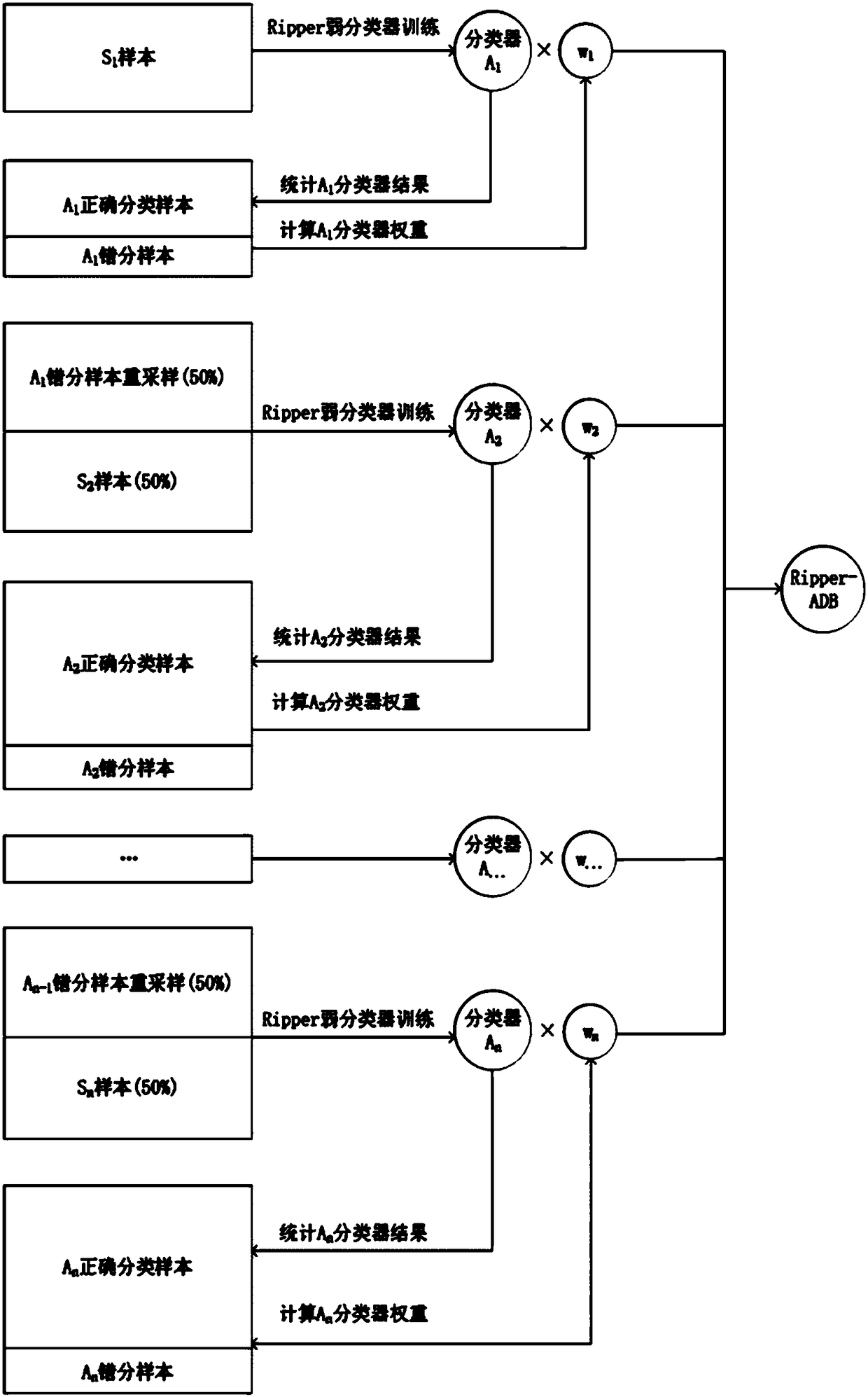

[0044] S2. Based on the Adaboost algorithm, multiple weak classifiers are used to train each training subset sample respectively, and when each weak classifier is trained, a part of the training subset samples and some error samples obtained by the previous weak classifier are selected to form the final The training samples of , the final ADB strong classifier is obtained from each weak classifier after training;

[0045] S3. Use the t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com