Parameter adjusting method and device and storage medium

A technology for adjusting parameters and hyperparameters, applied in the field of neural networks, which can solve the problems of rising time complexity, need to be improved, and missing optimal hyperparameter combinations.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

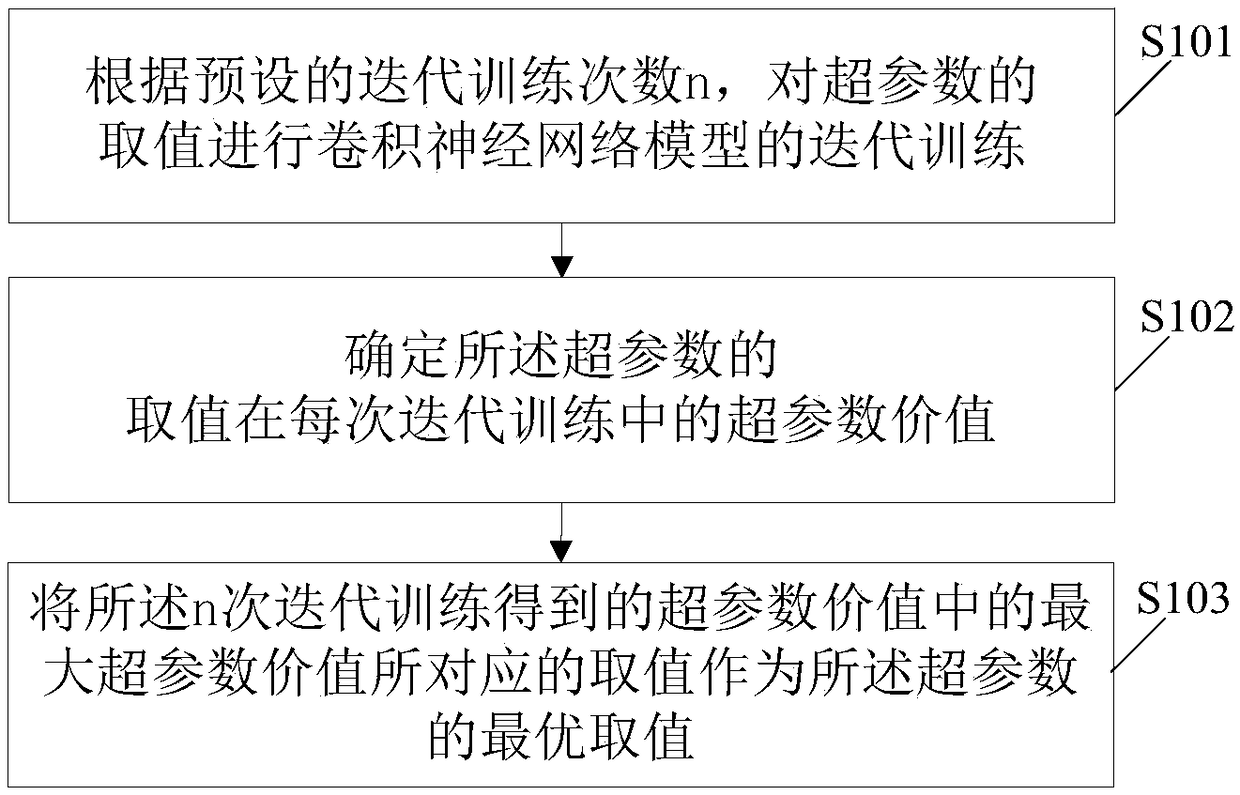

[0022] The embodiment of the present invention provides a parameter adjustment method, such as figure 1 As shown, the method includes:

[0023] S101, perform iterative training of the convolutional neural network model on the value of the hyperparameter according to the preset iterative training times n;

[0024] S102. Determine the hyperparameter value of the value of the hyperparameter in each iterative training;

[0025] S103. Use the value corresponding to the largest hyperparameter value among the hyperparameter values obtained by the n times of iterative training as the optimal value of the hyperparameter.

[0026] In detail, there are many hyperparameters in the convolutional neural network, but the convolutional neural network is not sensitive to some hyperparameters. Whether these hyperparameters are optimal values has little effect on the performance of the model. Therefore, the hyperparameters in the embodiment of the present invention Parameters are generally...

Embodiment 2

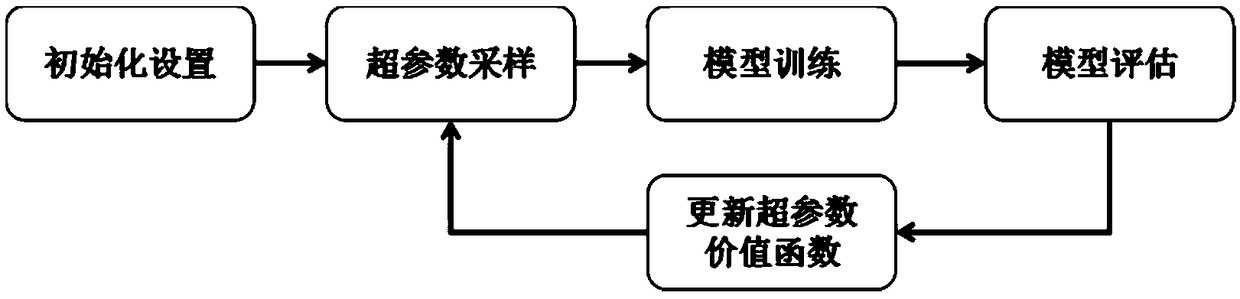

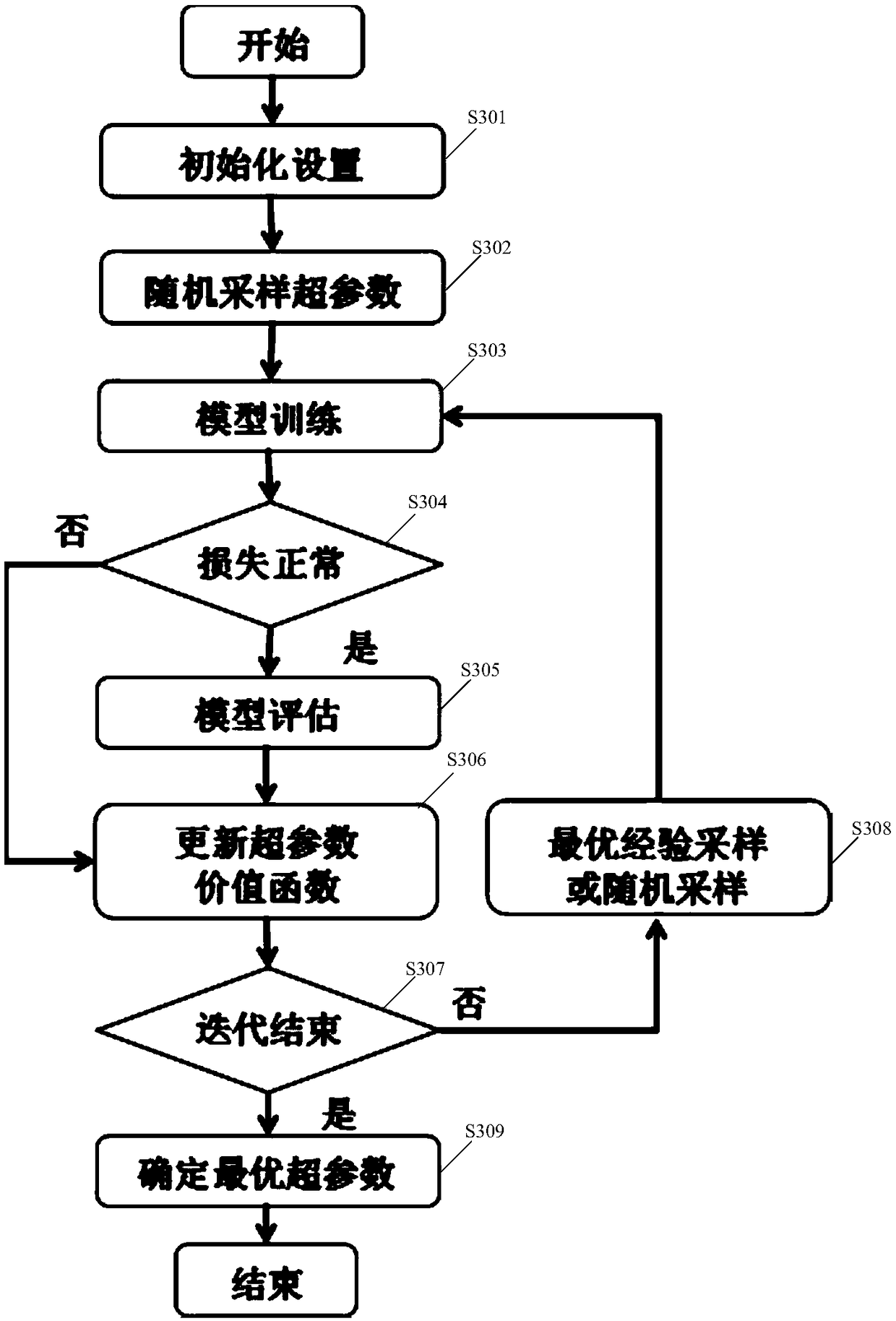

[0064] The embodiment of the present invention provides an optional parameter adjustment method, such as figure 2 As shown, the method in the embodiment of the present invention includes Embodiment 1, which realizes the fast search of the optimal hyperparameters in the convolutional neural network model. The main process includes initialization settings, updating hyperparameter values, hyperparameter sampling, model training and model evaluation, Determination of optimal hyperparameters, etc.; in detail, such as image 3 As shown, the method in the embodiment of the present invention includes:

[0065] S301, initializing settings. Configure the hyperparameters that need to be automatically tuned, the value range of each hyperparameter, form the hyperparameter value space, and set the sampling times of each hyperparameter.

[0066] For example, set the hyperparameters and their value ranges that need to be automated, and the number of samples for each hyperparameter. There ...

Embodiment 3

[0093] An embodiment of the present invention provides a parameter adjustment device for a convolutional neural network model, such as Figure 4 As shown, the device includes a memory 10 and a processor 12, the memory 10 stores a computer program, and the processor 12 executes the computer program to implement the method described in any one of the first to second embodiments A step of.

[0094] For example, the processor 12 executes the computer program to implement the following steps:

[0095] Perform iterative training of the convolutional neural network model on the value of the hyperparameter according to the preset number of iterative training times n;

[0096] Determine the hyperparameter value of the value of the hyperparameter in each iteration training;

[0097] Taking the value corresponding to the maximum hyperparameter value among the hyperparameter values obtained by the n iterations of training as the optimal value of the hyperparameter.

[0098] In the em...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com