A base station caching method under the time-varying content popularity

A time-varying and popular technology, applied in network traffic/resource management, wireless communication, electrical components, etc., can solve problems such as performance needs to be improved, improve user satisfaction, increase cache hit rate, and ease backhaul links The effect of load

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0022] The technical solution of the invention will be further introduced below in conjunction with the accompanying drawings and specific embodiments.

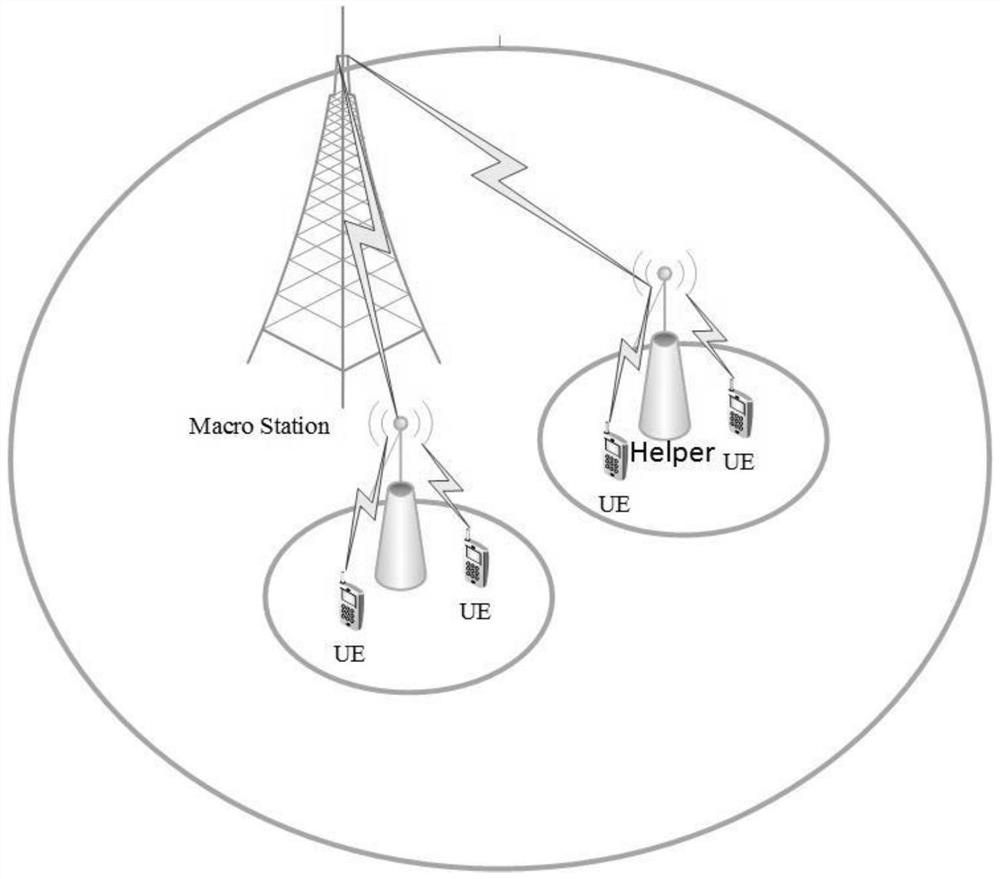

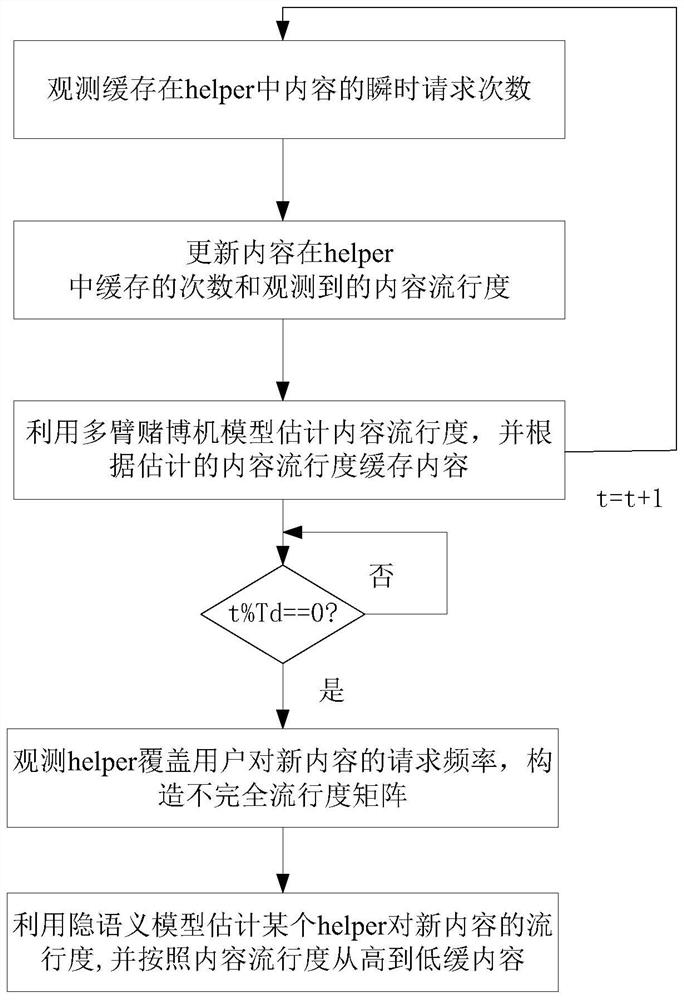

[0023] Consider a macro cellular network that deploys N helpers, and the Helper uses a collection of express. like figure 1 As shown, each helper is connected to the macro base station through a reliable backhaul link, while providing high-speed data services to the users it serves. Assuming that each helper has a fixed cache capacity M, the content controller in the macro base station determines the cached content of each helper according to the cache policy. We divide the time into time slots, each time slot contains a user request phase and a cache placement phase. In the user request phase, the user served by the helper requests content. If the requested content is stored in the helper, the helper processes the request and quickly transmits the content to the user without causing a load on the macro cellular network. ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com