Video super-resolution reconstruction method based on multi-memory and mixed loss

A technology of super-resolution reconstruction and mixed loss, which is applied in the field of super-resolution reconstruction constrained by the mixed loss function, can solve the problems of limited performance, limited effect, memory consumption of sub-pixel motion compensation layer, etc., and achieve fast convergence and enhanced features The effect of expressiveness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0017] In order to facilitate those of ordinary skill in the art to understand and implement the present invention, the present invention will be described in further detail below in conjunction with the accompanying drawings and embodiments. It should be understood that the implementation examples described here are only used to illustrate and explain the present invention, and are not intended to limit this invention.

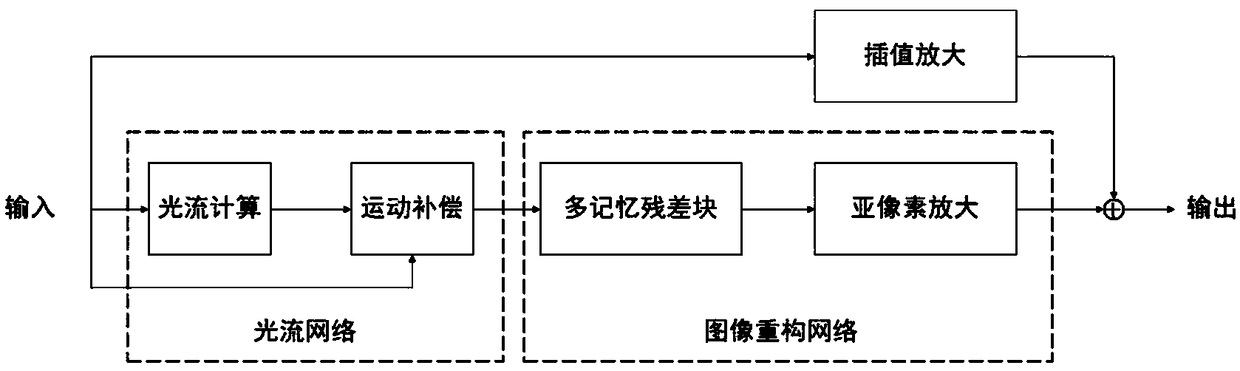

[0018] please see figure 1 , a kind of satellite image super-resolution reconstruction method provided by the present invention is characterized in that, comprises the following steps:

[0019] A video super-resolution reconstruction method based on multi-memory and mixed loss, is characterized in that, comprises the following steps:

[0020] Step 1: Select a number of video data as training samples, intercept an image with a size of N×N pixels from the same position in each video frame as a high-resolution learning target, and downsample it by r times to ob...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com